Is data preprocessing as important as they say? You might have heard the term “data preprocessing” being thrown around in discussions about data analysis and machine learning. But what exactly is it, and why is it so crucial? Let’s dive in and uncover the answers!

Imagine you’re baking a cake. Before you start mixing ingredients, you need to wash, peel, and chop them. Data preprocessing is like preparing those ingredients. It involves transforming raw data into a clean and organized format that machines can understand and analyze effectively.

Data preprocessing plays a vital role in obtaining accurate and reliable insights from your data. It helps to eliminate inconsistencies, errors, and missing values that can skew your results. By cleaning, normalizing, and transforming data, you ensure that the subsequent analysis and modeling steps are based on high-quality information.

But that’s not all! Data preprocessing also helps to enhance the performance and efficiency of machine learning models. By standardizing data ranges, reducing noise, and handling categorical variables, you set the stage for more accurate predictions and better decision-making.

So, whether you’re a data analyst, a machine learning enthusiast, or simply curious about the world of data, understanding the importance of data preprocessing is key to unlocking the full potential of your data analysis endeavors. Get ready to embark on an exciting journey of discovery and optimization!

Data preprocessing plays a crucial role in extracting meaningful insights from raw data. By cleaning, transforming, and organizing the data, preprocessing enhances the accuracy and reliability of machine learning models. With properly preprocessed data, models can make more accurate predictions and produce better results. Additionally, data preprocessing helps eliminate inconsistencies, missing values, and outliers, ensuring the quality of the data. Overall, data preprocessing is as important as experts claim, as it lays the foundation for successful data analysis and modeling.

Contents

- Is Data Preprocessing as Important as They Say?

- Benefits of Data Preprocessing

- Data Preprocessing Tips

- Conclusion

- Key Takeaways: Is Data Preprocessing as Important as They Say?

- Frequently Asked Questions

- 1. What is data preprocessing and why is it necessary?

- 2. What are some common techniques used in data preprocessing?

- 3. Does data preprocessing affect the performance of machine learning models?

- 4. Is data preprocessing always necessary for every dataset?

- 5. What are the potential challenges of data preprocessing?

- Summary

Is Data Preprocessing as Important as They Say?

Data preprocessing is a crucial step in data analysis and machine learning. It involves cleaning, transforming, and organizing raw data to make it suitable for further analysis. While some may wonder about the significance of data preprocessing, it is essential to understand that the quality of the data directly impacts the accuracy and reliability of the results obtained. In this article, we will explore the reasons why data preprocessing is indeed as important as experts claim it to be.

1. Enhancing Data Quality

Data preprocessing plays a vital role in enhancing the quality of the data. Raw data often contains errors, inconsistencies, missing values, and outliers, which can lead to biased or inaccurate results. By applying preprocessing techniques such as data cleaning, missing value imputation, and outlier detection and handling, we can ensure a higher quality of data. This, in turn, improves the robustness and reliability of the subsequent analysis or machine learning models built upon the preprocessed data.

Data cleaning involves techniques like removing duplicate entries, correcting typographical errors, and handling inconsistent data formats. Missing value imputation aims to fill in the gaps left by incomplete data, ensuring that the analysis considers all available information. Outlier detection and handling techniques help identify and address data points that deviate significantly from the expected values, preventing them from unduly influencing the analysis.

By addressing these issues and ensuring data quality through preprocessing, we can reduce the risk of incorrect conclusions or faulty predictions, leading to more trustworthy and reliable insights.

2. Improving Data Compatibility

When working with real-world datasets, it is common to encounter multiple sources of data with varying formats, structures, and scales. Data preprocessing helps ensure that these diverse datasets can be effectively integrated and analyzed together. By standardizing data formats, resolving inconsistencies, and normalizing data scales, data preprocessing improves data compatibility. This compatibility is essential for performing meaningful analyses, comparisons, and aggregations.

For instance, if we have data from different sources with different measurement units or scales (e.g., temperature in Celsius vs. Fahrenheit), preprocessing can be used to convert all data to a standardized format (e.g., Celsius). This enables accurate comparisons and eliminates any potential biases introduced by differences in measurement units. Similarly, standardizing categorical variables, such as converting different ways of representing gender (e.g., “Male,” “M,” “1”) into a single format (e.g., “Male”), ensures consistency and comparability across the dataset.

Data preprocessing techniques like feature scaling and normalization can also be applied to ensure that variables with different ranges or units are put on a common scale. This allows for fair and accurate comparisons between variables during analysis or modeling.

3. Enhancing Model Performance

Data preprocessing plays a significant role in improving the performance of machine learning models. Preprocessing techniques like feature selection, dimensionality reduction, and data transformation help in reducing noise, eliminating irrelevant or redundant features, and creating more informative representations of the data.

Feature selection techniques identify the most relevant and informative features for the predictive task at hand, reducing the dimensionality of the data and simplifying the model. This speeds up the training process, reduces the risk of overfitting, and improves the generalizability and interpretability of the model.

Dimensionality reduction techniques, such as principal component analysis (PCA), transform the data into a lower-dimensional space while preserving as much information as possible. This not only reduces the computational complexity but also helps in visualizing and understanding the data better.

Data transformation techniques, like scaling and normalization, are used to ensure that input variables are appropriately scaled and distributed. This can lead to improved model convergence, faster training times, and more accurate predictions.

4. Handling Missing Values

Missing values are a common occurrence in real-world datasets and can pose challenges during analysis. Data preprocessing techniques include various methods to handle missing values and ensure that they do not adversely affect the analysis.

One approach is to remove data instances or variables with missing values. However, this can lead to a loss of valuable information and may introduce bias. Alternatively, missing values can be imputed, i.e., estimated or filled in with reasonable values based on the other available data. Different imputation techniques, such as mean imputation, regression imputation, and multiple imputation, can be employed to handle missing values effectively.

By addressing missing values through preprocessing, we can make better use of the available data and avoid potential biases or information loss in the analysis.

5. Addressing Outliers

Outliers are observations that deviate significantly from the majority of the data points and can have a considerable impact on the analysis results. Data preprocessing techniques allow us to handle outliers effectively, ensuring that they do not unduly influence the analysis or skew the results.

One approach is to remove outliers from the dataset. This can be done based on statistical measures such as the z-score, which measures the number of standard deviations an observation deviates from the mean. Another method is to replace outliers with more reasonable or plausible values through techniques like winsorization (replacing extreme values with values at a certain percentile).

By addressing outliers through preprocessing, we ensure that the subsequent analysis or modeling is not unduly influenced by extreme or unusual data points.

6. Dealing with Imbalanced Datasets

In many real-world scenarios, datasets are often imbalanced, meaning that some classes or categories are significantly underrepresented compared to others. This can lead to biased predictions and unfair model performance. Data preprocessing techniques can be used to address this issue and ensure fair and accurate analysis.

One technique is oversampling, where the minority class is replicated or synthesized to make the class distribution more balanced. Another approach is undersampling, where data from the majority class is randomly removed to achieve a balanced distribution. Additionally, techniques like SMOTE (Synthetic Minority Over-sampling Technique) can be used to generate synthetic samples for the minority class and balance the dataset.

By preprocessing imbalanced datasets, we can avoid biases in the results and enable the model to learn patterns and make predictions that are equally applicable to all classes.

7. Ensuring Data Privacy and Security

Data preprocessing also plays a critical role in ensuring data privacy and security. While analyzing or sharing data, it is essential to protect sensitive information and comply with privacy regulations. Preprocessing techniques like data anonymization, aggregation, and encryption can be employed to mitigate privacy risks.

Data anonymization involves removing or obfuscating personally identifiable information (PII) from the dataset, making it impossible to link the data back to specific individuals. Aggregation techniques can be used to summarize or aggregate data at higher levels, ensuring that individual-level details are not disclosed. Encryption methods can be applied to protect data during storage or transmission, preventing unauthorized access.

By incorporating privacy-enhancing techniques during data preprocessing, we can maintain data security, build trust among data providers, and ensure compliance with privacy regulations.

Benefits of Data Preprocessing

Data preprocessing offers numerous benefits, some of which include:

- Improved data quality and reliability

- Enhanced compatibility and integration of diverse data sources

- Better performance of machine learning models

- Reduced bias and improved fairness in analysis or predictions

- Protection of data privacy and compliance with regulations

- More accurate and meaningful insights

- Efficient and effective data analysis

Data Preprocessing Tips

Here are a few tips to keep in mind when performing data preprocessing:

- Understand the characteristics and limitations of your dataset.

- Document all preprocessing steps to ensure reproducibility.

- Handle missing values carefully, choosing appropriate imputation techniques.

- Be mindful of the potential impact of outliers and choose suitable handling techniques.

- Consider the scalability and computational resources required for preprocessing large datasets.

- Regularly validate and evaluate the effectiveness of preprocessing techniques for your specific analysis.

Conclusion

Data preprocessing is indeed as important as experts claim it to be. It impacts data quality, compatibility, model performance, handling of missing values and outliers, addressing imbalanced datasets, and maintaining data privacy and security. By investing time and effort into data preprocessing techniques, analysts and data scientists can ensure more accurate, reliable, and meaningful results from their data analysis and machine learning endeavors.

Key Takeaways: Is Data Preprocessing as Important as They Say?

Data preprocessing is crucial for successful data analysis.

It helps to clean and transform raw data into a usable format.

Data preprocessing reduces errors and inconsistencies in the data.

It improves the accuracy and reliability of machine learning models.

By addressing missing values and outliers, data preprocessing enhances the overall quality of the data.

Frequently Asked Questions

When it comes to data preprocessing, there’s often a lot of talk about its importance. But is it really as crucial as they say? We’ve got the answers to your burning questions about data preprocessing right here.

1. What is data preprocessing and why is it necessary?

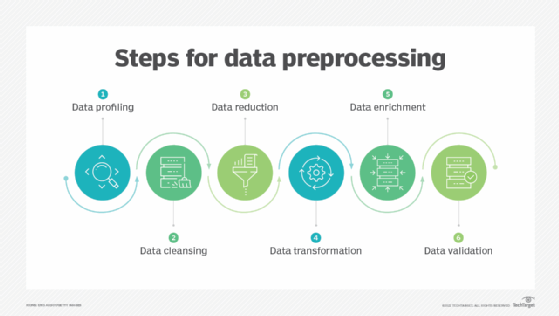

Data preprocessing is the process of transforming raw data into a format that is suitable for analysis. It involves cleaning the data, handling missing values, scaling numerical features, encoding categorical variables, and more. Data preprocessing is necessary because raw data is often messy and contains inconsistencies that can negatively impact the quality of analysis and machine learning models.

Without proper preprocessing, the results obtained from data analysis may be inaccurate or misleading. Data preprocessing helps to ensure that the data is reliable, consistent, and ready for analysis, enabling better decision-making and more accurate predictions.

2. What are some common techniques used in data preprocessing?

There are several techniques commonly used in data preprocessing. Some of the most common ones include:

1. Data cleaning: Removing or handling missing values, outliers, and irrelevant data.

2. Feature scaling: Scaling numerical features to a specific range, such as normalization or standardization.

3. Encoding categorical variables: Converting categorical variables into numerical representations.

4. Data transformation: Applying mathematical transformations to the data to improve its distribution and meet modeling assumptions.

These techniques, along with others, help to improve the quality and reliability of the data, ensuring more accurate analysis and modeling results.

3. Does data preprocessing affect the performance of machine learning models?

Absolutely! Data preprocessing has a significant impact on the performance of machine learning models. When the data is preprocessed correctly, it can lead to improved model performance and more accurate predictions.

By cleaning the data, handling missing values, and normalizing or standardizing features, the model can learn patterns and relationships more effectively. Data preprocessing also helps to prevent overfitting, where the model performs well on the training data but fails to generalize to new, unseen data. Preprocessing techniques like feature selection and dimensionality reduction can also improve model performance by reducing the complexity of the data and eliminating irrelevant or redundant features.

4. Is data preprocessing always necessary for every dataset?

In most cases, yes. Data preprocessing is necessary for most datasets to ensure data quality, consistency, and accurate analysis. However, there may be situations where the data is already clean and well-structured, reducing the need for extensive preprocessing.

It’s important to assess the quality of the data and the specific requirements of the analysis or modeling task. If the data is reliable, consistent, and ready for analysis, minimal preprocessing may be required. However, it’s always a good practice to at least perform some basic preprocessing steps, such as handling missing values or scaling features, to improve the robustness and accuracy of the analysis.

5. What are the potential challenges of data preprocessing?

Data preprocessing can come with its fair share of challenges. Some common challenges include:

1. Data quality: Dealing with missing values, outliers, and noisy data.

2. Data scalability: Handling large datasets that may require significant computational resources.

3. Choosing the right techniques: Selecting the most appropriate preprocessing techniques for the specific dataset and analysis goal.

4. Overfitting: Ensuring that preprocessing steps do not unintentionally introduce bias or overfit the model to the training data.

By being aware of these challenges and utilizing the right techniques and tools, these obstacles can be overcome, leading to more accurate and reliable data preprocessing results.

Summary

Data preprocessing is really important! It helps make our data clean and ready for analysis.

By removing missing values, outliers, and redundant information, we can get better results.

It also involves scaling and transforming our data so that everything is on the same scale.

Without preprocessing, our analysis may be inaccurate or biased, and our models may not work well.

So, remember to preprocess your data before diving into analysis!