Calling all curious minds! Today, we’re diving into the fascinating world of feature engineering. What’s the lowdown, you ask? Well, buckle up and get ready to uncover the secrets behind this powerful technique in the field of data science.

You might be wondering, “What’s the big deal about feature engineering?” Well, my friend, it’s like adding a secret ingredient to a recipe that takes it from good to mind-blowingly delicious. In the world of data analysis, feature engineering is all about creating new, meaningful features from existing data, giving our machine learning models the extra edge they need to make accurate predictions.

Now, you might be thinking, “But why do we need to engineer features? Can’t the models figure it out on their own?” Ah, an excellent question! You see, sometimes our raw data needs a little makeover. By transforming and selecting the right variables, we can highlight important patterns, remove noise, and enhance our models’ predictive powers. It’s like giving them a pair of super cool sunglasses to decipher hidden trends.

So, whether you’re a budding data scientist or just someone curious about the workings of machine learning, join us on this feature engineering adventure. Get ready to unlock the full potential of your data and unleash the magic of machine learning like never before! Let’s dive in!

Feature engineering is an essential aspect of data analysis. It involves transforming raw data into meaningful features that can improve the performance of machine learning models. By identifying and selecting the right features, you can enhance predictive accuracy and reduce overfitting. Through techniques like feature scaling, one-hot encoding, and dimensionality reduction, feature engineering empowers you to extract valuable insights from data. It’s a powerful tool in the data scientist’s arsenal, helping to uncover hidden patterns and drive better decision-making.

Contents

- What’s the Lowdown on Feature Engineering?

- Key Takeaways: What’s the lowdown on feature engineering?

- Frequently Asked Questions

- 1. What is feature engineering and why is it important?

- 2. What are some common techniques used in feature engineering?

- 3. How does feature engineering benefit machine learning models?

- 4. What are some challenges in feature engineering?

- 5. Are there any automated feature engineering tools available?

- What is feature engineering | Feature Engineering Tutorial Python # 1

- Summary:

What’s the Lowdown on Feature Engineering?

Welcome to our in-depth article on feature engineering! In the ever-evolving world of data science and machine learning, feature engineering plays a critical role in extracting meaningful insights from raw data. From creating new variables to transforming existing ones, feature engineering is a powerful technique that can enhance the performance of predictive models. In this article, we’ll delve into the details of feature engineering, its benefits, techniques, and tips for success. So, let’s get started!

Understanding Feature Engineering

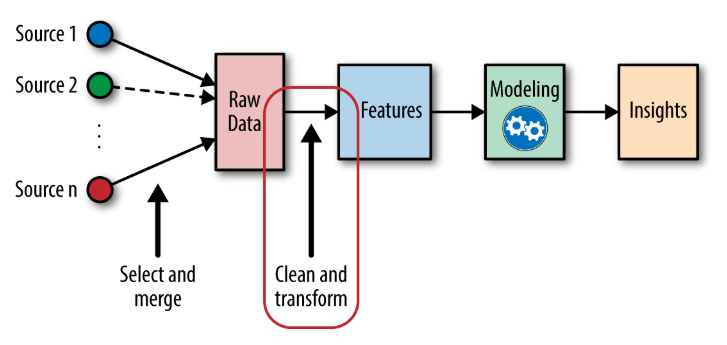

At its core, feature engineering involves creating, transforming, and selecting variables (also known as features) from the raw dataset to improve the performance of machine learning algorithms. It is an iterative process that requires domain knowledge, creativity, and a deep understanding of the data. The goal of feature engineering is to maximize the predictive power of the features while reducing noise and redundancy.

Feature engineering encompasses a wide range of techniques, including:

- Creating new features by combining existing ones

- Transforming variables to meet the assumptions of the model

- Selecting the most relevant features for the model

- Encoding categorical variables

- Normalizing or scaling features

By performing these techniques, data scientists can unlock hidden patterns and relationships within the data, leading to improved model performance and more accurate predictions.

The Benefits of Feature Engineering

Feature engineering offers several significant benefits in the field of data science. Let’s explore a few key advantages:

- Improved Model Performance: Feature engineering helps in creating meaningful variables that capture the underlying patterns in the data. By leveraging domain knowledge and data insights, engineers can develop features that are highly predictive, resulting in improved model accuracy and performance.

- Reduced Overfitting: Overfitting occurs when a model becomes too complex or specific to the training data, leading to poor generalization on unseen data. Feature engineering techniques such as regularization, dimensionality reduction, and target encoding can help reduce overfitting and improve the model’s ability to generalize.

- Increased Interpretablity: Feature engineering can also enhance the interpretability of machine learning models. By transforming or creating variables that align with domain knowledge, data scientists can provide meaningful insights and explainability into the model’s decision-making process.

The Techniques of Feature Engineering

Feature engineering involves a repertoire of techniques that can be applied to different types of data and modeling problems. Let’s explore some essential techniques:

1. Missing Value Imputation

Missing values are a common challenge in real-world datasets. Imputing missing values with appropriate techniques such as mean/median imputation, mode imputation, or regression imputation is a crucial step in feature engineering. Missing value imputation ensures that the data remains complete and usable for subsequent analysis and modeling.

2. Binning

Binning involves grouping continuous variables into discrete bins or intervals. This technique can help capture non-linear relationships and make the model more resilient to outliers. Binning can be performed using equally spaced intervals (e.g., age ranges) or based on data distribution (e.g., quartiles).

3. One-Hot Encoding

One-Hot encoding is used to convert categorical variables into a binary (0/1) format. In this technique, each category is transformed into a separate binary feature, allowing the model to understand and process categorical information. One-Hot encoding prevents the model from assigning arbitrary numerical values to categories, which can lead to misinterpretations.

4. Feature Scaling

Feature scaling is crucial when the variables in the dataset have different scales or units. Scaling techniques such as standardization or normalization ensure that all features contribute equally to the model’s computation. This technique prevents variables with larger scales from dominating the model’s predictions and facilitates convergence during the training process.

5. Feature Extraction

Feature extraction involves transforming high-dimensional data into a lower-dimensional space. Techniques such as Principal Component Analysis (PCA) and Linear Discriminant Analysis (LDA) are commonly used for extracting essential features that capture most of the information while reducing dimensionality. Feature extraction is particularly useful when dealing with datasets that have a large number of variables.

Tips for Successful Feature Engineering

Mastering feature engineering requires practice, experimentation, and an in-depth understanding of the problem domain. Here are a few tips to help you excel in feature engineering:

- Begin with Exploratory Data Analysis: Thoroughly analyze the data to identify patterns, outliers, and relationships between variables. This exploration will guide your feature engineering decisions.

- Collaborate with Domain Experts: Seek guidance from subject matter experts to gain insights into the data and its underlying relationships. Their expertise will help you design meaningful features that align with the problem domain.

- Iterate and Validate: Experiment with different feature engineering techniques and evaluate their impact on the model’s performance using appropriate evaluation methods such as cross-validation. Continuously iterate and refine your feature engineering process based on the results.

- Keep Learning: Stay updated with the latest feature engineering techniques, tools, and research papers. Feature engineering is an evolving field, and continuous learning is essential to stay ahead.

With these tips and techniques in mind, you now have a solid foundation for diving into the fascinating world of feature engineering. Remember, the key is to be curious, creative, and persistent in finding the best features to unlock the true potential of your data. Happy engineering!

Key Takeaways: What’s the lowdown on feature engineering?

- Feature engineering is the process of creating new variables or transforming existing ones to improve model performance.

- It involves analyzing and understanding the data to come up with relevant features that capture important patterns and relationships.

- Feature engineering helps in making machine learning models more effective and accurate.

- Techniques such as imputation, scaling, encoding, and creating interaction variables are commonly used in feature engineering.

- Proper feature engineering can significantly enhance the predictive power of machine learning models.

Frequently Asked Questions

Welcome to our Frequently Asked Questions about feature engineering! Here, we’ll dive into the basics of what feature engineering is and why it’s important in various industries, such as data science and machine learning. Read on to learn more!

1. What is feature engineering and why is it important?

Feature engineering is the process of selecting, transforming, and creating new features from existing data to improve the performance of machine learning models. It involves analyzing the dataset, identifying relevant variables, and creating new features that can better represent the underlying patterns and relationships in the data.

Feature engineering is crucial because the quality and relevance of the features used in a model directly impact its predictive power. Well-engineered features can help models make more accurate predictions, generalize better to new data, and be more robust against noise and outliers in the dataset. It can also help in reducing overfitting, which occurs when a model fits the training data too closely and performs poorly on unseen data.

2. What are some common techniques used in feature engineering?

There are several techniques used in feature engineering, including:

- Missing value imputation: Dealing with missing data by filling in the missing values using strategies such as mean, median, or mode imputation.

- Encoding categorical variables: Transforming categorical variables into numerical representations that can be understood by machine learning models, such as one-hot encoding or label encoding.

- Scaling and normalization: Rescaling numerical features to ensure they have a similar range or distribution, preventing certain features from dominating others.

- Feature extraction: Creating new features by combining or transforming existing features, often using domain knowledge or statistical methods.

- Dimensionality reduction: Reducing the number of features while retaining most of the important information, typically achieved through techniques like principal component analysis (PCA).

These techniques, among others, help in effectively preparing the data for machine learning algorithms, improving model performance, and making better predictions.

3. How does feature engineering benefit machine learning models?

Feature engineering plays a crucial role in improving the performance of machine learning models in several ways:

Firstly, it helps in providing more relevant and informative input to the models. By analyzing the data and transforming or creating new features, feature engineering can capture hidden patterns, relationships, and nuances that can enhance the model’s understanding of the data.

Secondly, feature engineering assists in reducing the complexity and noise in the dataset. By selecting or extracting the most meaningful features, it helps in eliminating irrelevant or redundant information, which can lead to overfitting and poor generalization.

Lastly, feature engineering enables models to handle different types of data and make better predictions. Through techniques like scaling, encoding, and transformation, it ensures that the data is in the appropriate format and range for the algorithms to process effectively.

4. What are some challenges in feature engineering?

Feature engineering can present several challenges, such as:

- Identifying relevant features: Determining which features are most relevant to the problem at hand can be a complex and time-consuming task, requiring domain knowledge and exploration of the data.

- Dealing with missing data: Managing missing values in the dataset, which can be in the form of sparse or incomplete records, requires careful consideration and imputation techniques.

- Avoiding overfitting: Creating features that do not introduce biases or noise that can lead to overfitting, where the model becomes too specific to the training data and performs poorly on unseen data.

- Managing high-dimensional data: Handling datasets with a large number of features can be challenging, as it can lead to computational inefficiency and potentially degrade model performance.

Overcoming these challenges requires a combination of data analysis, domain expertise, and creativity to engineer features that effectively represent the underlying patterns and relationships in the data.

5. Are there any automated feature engineering tools available?

Yes, there are several automated feature engineering tools available that can assist in the feature engineering process. These tools use various algorithms and techniques to automatically generate relevant features from the data.

Some popular automated feature engineering tools include Featuretools, Tsfresh, and AutoFeat. These tools can handle tasks such as imputing missing values, encoding categorical variables, creating new features, and more. While they can provide a good starting point, it’s important to note that domain expertise and manual feature engineering may still be required to fine-tune and improve the generated features for specific problems.

What is feature engineering | Feature Engineering Tutorial Python # 1

Summary:

Feature engineering is a way to make data better for machine learning. It involves creating new features from existing data to help models understand patterns. Feature engineering can help improve model accuracy and make predictions more valuable in real-world situations. It’s an important step in the machine learning process, and understanding it can lead to better results. So, if you want to take your machine learning skills to the next level, exploring feature engineering is definitely worth it!