Have you ever wondered how machine learning models are trained to make accurate predictions? Well, the answer lies in the fascinating world of hyperparameters! So, what’s the role of hyperparameters in model tuning? Let’s find out!

Okay, let’s break it down in simpler terms. Imagine you’re baking a cake and have all the ingredients ready. But to make sure it turns out just right, you need to tweak a few things, like the baking time, the temperature, and the amount of sugar. These tweaks are like hyperparameters in machine learning.

Hyperparameters are like knobs or levers that we can adjust to fine-tune our models. They control the behavior and performance of the model, allowing us to optimize its accuracy. Think of it as finding the perfect combination of these settings to create the best possible model.

Now that you have a basic understanding of hyperparameters, get ready to dive deeper into this exciting topic. We’ll explore different types of hyperparameters and how they impact model performance. So, let’s unlock the secrets behind hyperparameters and unleash the true potential of machine learning!

Contents

Understanding the Role of Hyperparameters in Model Tuning

When it comes to machine learning models, the performance and accuracy of the final output depend greatly on the choices made during the model building process. One critical aspect of this process is tuning the hyperparameters. Hyperparameters are parameters that are set prior to the start of the learning process and affect the behavior of the model during training. In this article, we will explore the role of hyperparameters in model tuning and understand their significance in achieving optimal results.

What are Hyperparameters?

Hyperparameters are configuration settings that determine how a machine learning model learns and generalizes from the training data. They are different from the model parameters, which are learned from the data during the training process. Hyperparameters cannot be learned directly from the data, but instead, they need to be set before training the model. These settings influence the learning algorithm’s behavior and directly impact the model’s performance.

Some common examples of hyperparameters in machine learning algorithms include the learning rate, the number of hidden layers in a neural network, the regularization parameter, and the number of decision trees in a random forest. Each hyperparameter controls a specific aspect of the learning process, such as the speed of convergence, the complexity of the model, or the level of regularization applied. The choice of hyperparameter values greatly affects the model’s performance, and finding the right combination can be crucial for achieving optimal results.

The Impact of Hyperparameters on Model Performance

The hyperparameters play a crucial role in determining the performance of a machine learning model. Setting the hyperparameters incorrectly can lead to suboptimal results, such as overfitting or underfitting the data. Overfitting occurs when the model becomes too complex and learns to perfectly fit the training data, but fails to generalize well to new, unseen data. On the other hand, underfitting occurs when the model is too simplistic and fails to capture the underlying patterns in the data.

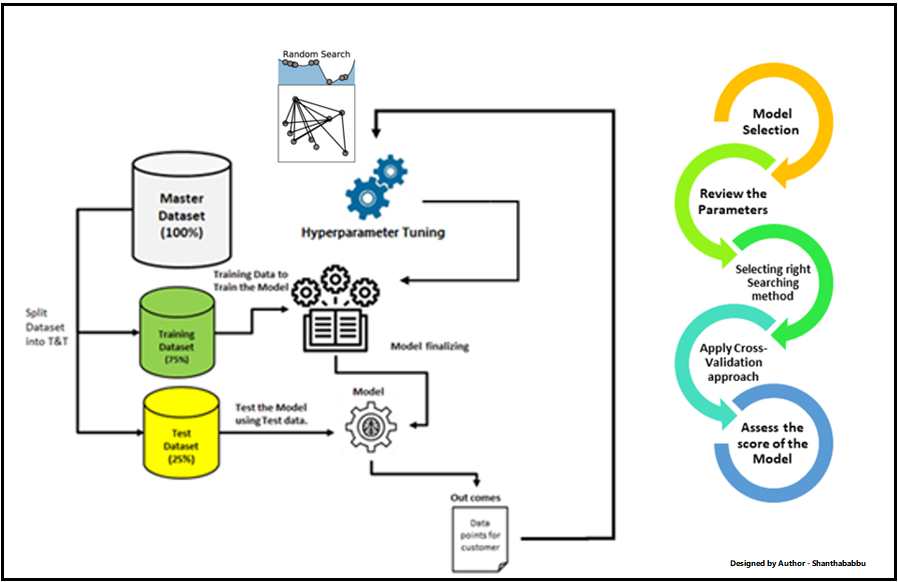

By tuning the hyperparameters, we aim to find the right balance between underfitting and overfitting. This involves adjusting the hyperparameter values to optimize the model’s performance on a validation dataset. The process of tuning hyperparameters is often iterative, involving multiple experiments and evaluations of different parameter settings. It requires a deep understanding of the algorithm and the problem at hand, as well as careful analysis of the model’s performance metrics.

Strategies for Hyperparameter Tuning

There are several strategies and techniques that can be used for hyperparameter tuning. One common approach is grid search, where a predefined set of hyperparameter values is specified, and the model is trained and evaluated for each combination of values. This allows for an exhaustive search of the hyperparameter space but can be computationally expensive, especially when the number of hyperparameters and their possible values is large.

Another approach is random search, where random combinations of hyperparameter values are sampled and evaluated. This method can be more efficient than grid search, as it allows for a broader exploration of the hyperparameter space without the need to evaluate all possible combinations. Bayesian optimization and genetic algorithms are other popular techniques that can be used for hyperparameter tuning.

It is worth noting that hyperparameter tuning is not a one-size-fits-all process. The optimal hyperparameter values can vary depending on the specific dataset and the problem being solved. Therefore, it is important to carefully analyze the results and performance metrics of the different parameter settings to make informed decisions about the best hyperparameter values for a given model.

Key Takeaways: The Role of Hyperparameters in Model Tuning

- Hyperparameters are settings that need to be adjusted to optimize the performance of a machine learning model.

- They control aspects like the learning rate, regularization strength, or the number of hidden layers in a neural network.

- Choosing the right hyperparameter values can greatly impact the model’s accuracy and generalization ability.

- Tuning hyperparameters involves experimenting with different values and evaluating the model’s performance to find the best combination.

- Common techniques for hyperparameter tuning include grid search, random search, and Bayesian optimization.

Frequently Asked Questions

When it comes to model tuning, hyperparameters play a crucial role in determining the performance and accuracy of the model. Understanding the role of hyperparameters is essential for optimizing machine learning models. Here are some common questions and answers related to the role of hyperparameters in model tuning.

1. What are hyperparameters in machine learning?

Hyperparameters are parameters that are set before the learning process begins. They are not learned from the data, but instead, they define the configuration of the learning algorithm. Examples of hyperparameters include the learning rate, batch size, the number of hidden layers in a neural network, or the number of trees in a random forest algorithm.

Choosing the right hyperparameters is crucial as they directly impact the performance of the model. By tuning the hyperparameters, you can find the optimal configuration that helps the model achieve the best accuracy or performance on the given problem.

2. How do hyperparameters affect the model?

Hyperparameters affect the learning process and the model’s performance in several ways. For example, the learning rate hyperparameter controls the step size at which the model updates its parameters during training. A high learning rate may cause the model to converge too quickly and miss the optimal solution, while a low learning rate may cause the model to take too long to converge or get stuck in local minima.

Similarly, hyperparameters like the regularization parameter in a regression model control the trade-off between fitting the training data well and preventing overfitting. Choosing the right hyperparameters can make the model more accurate and generalize well to unseen data, while poor choices can lead to suboptimal performance or even model failure.

3. How do you tune hyperparameters?

Tuning hyperparameters involves finding the best values for these parameters that optimize the model’s performance. There are several strategies for hyperparameter tuning, including manual tuning, grid search, random search, and more advanced techniques like Bayesian optimization or genetic algorithms.

In manual tuning, the data scientist manually selects different values for each hyperparameter and evaluates the model’s performance. Grid search involves defining a grid of hyperparameter values and systematically testing each combination. Random search randomly samples hyperparameter values and evaluates the corresponding models. Advanced techniques use optimization algorithms to intelligently search the hyperparameter space and find the best values.

4. What are the challenges in hyperparameter tuning?

Hyperparameter tuning can be a challenging task due to several factors. Firstly, the search space for hyperparameters can be large, especially when dealing with complex models with many hyperparameters. Finding the optimal combination of hyperparameters can be time-consuming and computationally expensive.

Secondly, tuning hyperparameters requires a good understanding of the model and its behavior. Identifying which hyperparameters have the most impact and the appropriate range of values to search within requires expertise and experimentation. Finally, hyperparameter tuning is problem-specific, meaning that the optimal configuration for one problem may not be the best for another.

5. Can hyperparameter tuning improve model performance?

Absolutely! Hyperparameter tuning is essential for optimizing model performance. By finding the best combination of hyperparameters, you can significantly improve the accuracy, generalization, and efficiency of your machine learning models.

However, it’s important to note that hyperparameter tuning is not a one-size-fits-all solution. The impact of hyperparameter tuning varies depending on the problem, dataset, and algorithm used. Experimentation and iterative refinement are often required to find the best hyperparameter configuration for each specific scenario.

Machine Learning | Hyperparameter

Summary

So, to wrap things up, hyperparameters are like settings that we choose for our models. They can make a big difference in how well our models perform. But finding the right hyperparameters is like solving a puzzle because it’s not always easy to know which settings will work best. That’s where model tuning comes in. It’s like fine-tuning a musical instrument to get the best sound. By trying out different hyperparameter values and seeing how our model performs, we can find the settings that give us the most accurate results. So, next time you hear about hyperparameters and model tuning, remember that it’s all about finding the best settings for our models to do their magic!