Is there a recipe for deploying Machine Learning models? Well, let’s dive in and find out!

When it comes to Machine Learning, you’ve probably heard about training models and making predictions. But what happens next? How do we take those amazing models and put them to use? That’s where deploying comes in.

In this article, we’ll explore the exciting world of deploying Machine Learning models and discover if there’s a secret recipe to make it happen seamlessly. So, get ready to unleash the power of Machine Learning and join me on this journey!

Contents

- Is there a recipe for deploying Machine Learning models?

- The Importance of Proper Model Deployment

- Common Challenges in Model Deployment

- Key Takeaways: Is there a recipe for deploying Machine Learning models?

- Frequently Asked Questions

- Why is deploying Machine Learning models important?

- What are the steps involved in deploying a Machine Learning model?

- Which tools or frameworks can I use to deploy Machine Learning models?

- What are some best practices for deploying Machine Learning models?

- What are some challenges in deploying Machine Learning models?

- Summary

Is there a recipe for deploying Machine Learning models?

Deploying Machine Learning models is a complex process that requires careful planning, testing, and optimization. While there isn’t a one-size-fits-all recipe for deploying these models, there are some essential steps and considerations that can help guide the process. In this article, we will explore the various aspects of deploying Machine Learning models and provide insights into best practices to ensure successful implementation.

1. Understanding the Problem and Data

Before diving into deploying a Machine Learning model, it is crucial to have a thorough understanding of the problem you are trying to solve and the data you have at your disposal. Start by defining the problem statement and identifying the specific goals you want to achieve. Then, carefully examine the available data, ensuring its quality and relevance. Preprocessing and cleaning the data may be necessary to remove any inconsistencies or outliers that might adversely affect your model’s performance. Additionally, consider whether you have enough labeled data for training and evaluation.

Once you have a clear understanding of the problem and the data, identify the Machine Learning algorithms that are most suitable for the task at hand. Research and experiment with different algorithms to determine which ones yield the best results. Consider factors such as accuracy, speed, interpretability, and robustness when making your decision.

Furthermore, it is important to establish a baseline performance metric to assess the effectiveness of your deployed models. This metric will serve as a benchmark for comparing the performance of different models or iterations.

2. Model Training and Validation

The next phase in deploying Machine Learning models is training and validation. This involves splitting your labeled data into two sets: a training set and a validation set. The training set is used to train your model on historical data, while the validation set is used to evaluate its performance.

During the training process, the model learns from the labeled data and adjusts its internal parameters to optimize its predictions. It is important to monitor the model’s performance on the validation set to detect any signs of overfitting or underfitting. Overfitting occurs when a model performs well on the training set but fails to generalize to new, unseen data. Underfitting, on the other hand, is when a model fails to capture the patterns and complexities present in the data, resulting in low performance.

To mitigate overfitting, techniques such as regularization, cross-validation, and early stopping can be employed. Regularization adds a penalty term to the model’s objective function, discouraging it from relying too heavily on any particular feature. Cross-validation involves dividing the training set into multiple subsets and training the model on different combinations of these subsets. Early stopping stops the training process when the model’s performance on the validation set starts to deteriorate.

3. Model Evaluation and Selection

Once you have trained and validated multiple models, it’s time to evaluate their performance and select the best one to deploy. Evaluation involves measuring the models’ performance against predefined evaluation metrics, such as accuracy, precision, recall, or F1 score.

It is important to select metrics that align with your problem statement and goals. For instance, if you are building a model to detect spam emails, precision (the proportion of correctly identified spam emails) might be more important than recall (the proportion of all actual spam emails correctly identified).

Based on the evaluation results, choose the model that performs the best and meets your requirements. Consider factors such as accuracy, interpretability, scalability, and computational requirements when making your decision. It is also important to validate the selected model on unseen data or an independent test set to ensure its performance holds up in real-world scenarios.

4. Model Deployment and Monitoring

Once you have selected a model to deploy, you need to integrate it into your production environment. This involves converting the model into a format that can be implemented in a production system, such as an API or a web service.

During the deployment phase, it is crucial to establish robust monitoring and error handling mechanisms to ensure the deployed model’s performance is continuously assessed and any issues or errors are promptly addressed. Monitor the model’s performance on real-time data and track metrics such as accuracy, latency, and resource utilization. This allows for timely identification of any degradation in performance or anomalies that may require intervention.

Deployed Machine Learning models should also be periodically reevaluated and updated to account for changes in the underlying data distribution or business requirements. Regularly test the deployed model with new data and retrain or fine-tune it as needed to maintain optimal performance.

5. Ethical Considerations and Transparency

When deploying Machine Learning models, it is imperative to consider ethical implications and ensure transparency. This involves addressing issues such as bias, fairness, privacy, and interpretability.

Bias may be present in the data used to train Machine Learning models, and this bias can result in unfair or discriminatory outcomes. It is crucial to assess and mitigate bias at both the data and model levels. This can be done by carefully selecting and preprocessing data, using fairness-aware algorithms, and conducting audits and fairness tests.

Transparency is also essential, especially when deploying models in sensitive domains. Users should have a clear understanding of how the model works, what features it relies on, and how decisions are made. Providing explanations and interpretability can help build trust and acceptance of the deployed models.

6. Scalability and Infrastructure

As the demand for Machine Learning models grows, scalability and infrastructure become key considerations. Deployed models should be able to handle high volumes of data and concurrent requests efficiently.

Considerations for scalability include choosing appropriate hardware and infrastructure, optimizing the model’s architecture and algorithms for speed and efficiency, and implementing parallel processing techniques when necessary. Utilize cloud-based platforms and services that provide scalable and reliable resources to handle the computational demands of your deployed models.

Furthermore, implement monitoring and auto-scaling mechanisms to automatically adjust resources based on demand. This ensures a smooth and responsive experience for end users, even during peak usage periods.

7. Continuous Learning and Iteration

Deploying Machine Learning models is not a one-time process; it requires continuous learning and iteration. As new data becomes available or business requirements evolve, the deployed models need to be updated and improved.

Regularly monitor the performance of deployed models and collect feedback from end users to identify areas for improvement. This feedback can be used to gather new labeled data, fine-tune the models, add new features, or explore more advanced algorithms.

Deployed models should be seen as living systems that are continuously refined and enhanced to deliver the best possible performance and outcomes.

The Importance of Proper Model Deployment

Proper deployment of Machine Learning models plays a critical role in realizing their potential and ensuring their effectiveness in real-world scenarios. Following the steps outlined above and considering the unique requirements and challenges of your specific use case will greatly increase the chances of successful deployment. Remember to continuously learn and improve, staying agile in response to changing circumstances and new data. With proper planning, monitoring, and optimization, your Machine Learning models can make a significant impact and drive meaningful outcomes.

Common Challenges in Model Deployment

Deploying Machine Learning models involves navigating a range of challenges and considerations. In addition to the steps outlined above, it is important to be aware of and address common challenges that can arise during the deployment process. Here are some key challenges to keep in mind:

1. Data Quality and Availability

One of the biggest challenges in deploying Machine Learning models is ensuring the quality and availability of data. Garbage in, garbage out applies here; if your data is noisy, incomplete, or biased, it will negatively impact your model’s performance. Assess the quality of your data and preprocess it as necessary to improve its reliability and relevance. Additionally, consider whether you have enough labeled data for training and evaluation.

If data availability is an issue, consider data augmentation techniques or explore the possibility of acquiring additional data through external sources or collaborations. However, be mindful of potential ethical and legal implications when using third-party data.

Key Takeaways: Is there a recipe for deploying Machine Learning models?

- Deploying Machine Learning models involves a systematic process.

- Understanding the problem and data is crucial before deployment.

- Data preprocessing and feature engineering are important steps.

- Model selection and training are key aspects of deployment.

- Testing and evaluating the model’s performance is necessary.

Frequently Asked Questions

Looking to deploy your Machine Learning models? Find answers to common questions below!

Why is deploying Machine Learning models important?

Deploying Machine Learning models is essential because it allows you to put your trained models into action and make predictions on new data. By deploying your models, you can leverage their predictive power to solve real-world problems and make informed decisions. Whether it’s predicting customer behavior, analyzing data patterns, or recommending personalized content, deploying Machine Learning models helps you unlock the value of your data.

Moreover, deploying Machine Learning models enables you to automate processes and integrate predictive capabilities into your applications or systems. This empowers you to streamline workflows, improve efficiency, and gain a competitive advantage in today’s data-driven world.

What are the steps involved in deploying a Machine Learning model?

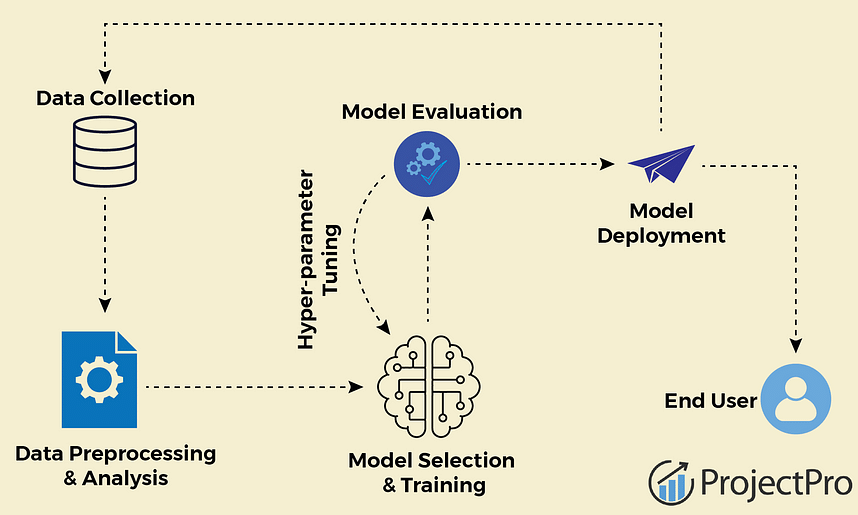

Deploying a Machine Learning model typically involves several key steps. First, you need to prepare your data and preprocess it to ensure it’s in a suitable format for your model to consume. This may involve cleaning the data, transforming features, or performing feature engineering.

Next, you’ll train your model using a suitable algorithm or framework. This involves feeding your prepared data into the model and optimizing its parameters to achieve the best possible performance. Once the model is trained, you’ll evaluate its performance and fine-tune it if necessary.

Finally, the deployment phase involves packaging your trained model, integrating it into your application or system, and making it available for predictions. This may require converting your model into a deployable format, setting up an infrastructure to host and serve the model, and implementing a mechanism to trigger predictions based on incoming data.

Which tools or frameworks can I use to deploy Machine Learning models?

There are several popular tools and frameworks available for deploying Machine Learning models. One commonly used framework is TensorFlow Serving, which provides a flexible and scalable solution for serving models built using TensorFlow. Another popular option is scikit-learn, a versatile library that offers various deployment options, including exporting models to a deployable format for use in different applications or systems.

If you prefer a cloud-based solution, platforms like Google Cloud AI Platform, Amazon SageMaker, or Microsoft Azure Machine Learning provide comprehensive tools and infrastructure to deploy and manage Machine Learning models. These platforms offer features such as model monitoring, scaling, and automated deployment pipelines, making it easier to deploy and maintain your models on a larger scale.

What are some best practices for deploying Machine Learning models?

When deploying Machine Learning models, it’s important to consider a few best practices. One key aspect is versioning your models, as this helps track changes and ensures reproducibility. By versioning your models, you can easily roll back to a previous version if needed and maintain a clear history of model improvements.

Additionally, it’s crucial to monitor your deployed models to ensure their performance remains optimal. This involves setting up monitoring mechanisms to track prediction quality, data drift, and potential issues. Regularly evaluating and retraining your models using fresh data is also recommended to maintain accuracy and adapt to changes in the environment.

What are some challenges in deploying Machine Learning models?

Deploying Machine Learning models can present challenges, and it’s important to be aware of them. One common challenge is the need to handle scalability. As your user base or data volume increases, you need to ensure your deployed models can handle the load efficiently. This may involve optimizing the model’s architecture, leveraging distributed computing, or utilizing cloud-based resources for scalability.

Another challenge is maintaining model performance over time. As data changes, models may become less accurate or lose their predictive power. It’s crucial to establish processes for continuous monitoring, retraining, and updating models to prevent performance degradation and ensure they remain effective in the long run. Additionally, handling data privacy and security concerns is essential when deploying models that deal with sensitive or personal information.

Summary

So, to sum it all up, deploying Machine Learning models is important because it allows us to use the models we create in real-world applications. It involves five main steps: collecting and preprocessing data, training and evaluating the model, converting it into a deployable format, choosing a deployment method, and monitoring the deployed model. Each step has its challenges and considerations, but by following best practices and using appropriate tools, we can successfully deploy Machine Learning models. Remember, it’s all about making our models useful and accessible to solve practical problems!

In conclusion, deploying Machine Learning models is like baking a delicious cake – you need the right ingredients, the right tools, and a systematic process. It may take some practice and experimentation, but with time, you’ll become a master at deploying models and bringing Machine Learning to life in the real world. So, go ahead and start baking those models!