In the exciting world of Deep Learning, have you ever wondered what transfer learning is? Well, let me break it down for you! Transfer learning, in simple terms, is like borrowing knowledge from one task to help us with another task. It’s like using your math skills to solve a science problem or your soccer skills to play basketball.

Here’s the deal: Deep Learning models are like super intelligent machines, capable of learning patterns and making predictions. But sometimes, instead of training a model from scratch for a specific task, we can leverage the knowledge it gained from a different but related task. It’s like starting with a head-start!

So, how does transfer learning work? Picture this: Imagine a pre-trained model as a chef who spent years mastering the art of cooking. When faced with a new dish, they don’t start from scratch; they use their culinary expertise and adapt their knowledge to create a delicious new recipe. Similarly, transfer learning allows us to take a pre-trained model, modify it slightly, and use it to solve a different problem. Pretty cool, huh?

Contents

- Understanding Transfer Learning in Deep Learning

- The Process of Transfer Learning

- Fine-tuning and Training

- Conclusion

- Key Takeaways: What is transfer learning in Deep Learning?

- Frequently Asked Questions

- How does transfer learning work in deep learning?

- What are the advantages of using transfer learning in deep learning?

- When is transfer learning useful in deep learning?

- Are there any limitations or challenges in using transfer learning in deep learning?

- How can one implement transfer learning in deep learning?

- What Is Transfer Learning? | Transfer Learning in Deep Learning | Deep Learning Tutorial|Simplilearn

- Summary

Understanding Transfer Learning in Deep Learning

Welcome to our in-depth article on transfer learning in deep learning. In this guide, we will explore the concept of transfer learning, its applications, and its benefits in the field of deep learning. Transfer learning is a powerful technique that allows us to leverage knowledge gained from one task and apply it to another related task, thereby accelerating the learning process and improving the performance of our models.

What is Transfer Learning?

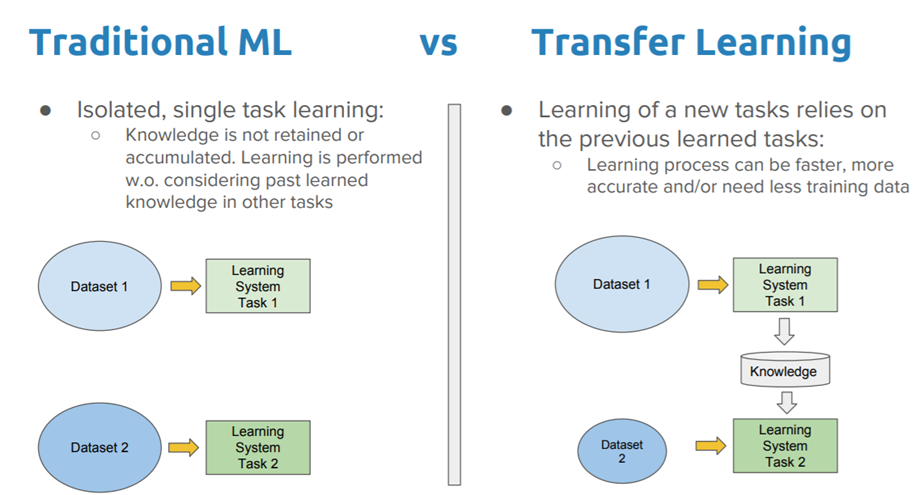

Transfer learning, as the name suggests, involves transferring knowledge learned from one domain or task to another domain or task. In the context of deep learning, transfer learning refers to the practice of leveraging pre-trained neural networks on large datasets and using their learned feature representations as a starting point for training on a new, smaller dataset.

Deep neural networks are incredibly powerful models that can learn complex patterns and representations from vast amounts of data. However, training deep networks from scratch typically requires a large labeled dataset and significant computational resources. This is where transfer learning comes in. By utilizing the knowledge acquired by pre-trained networks, we save both time and computational resources, while leveraging the rich representations learned during pre-training.

Transfer learning allows us to take advantage of the features extracted by models trained on massive datasets like ImageNet, which contains millions of labeled images. Instead of training from scratch, we can fine-tune these pre-trained models on our specific task or dataset, saving us time and resources while still achieving impressive performance.

Applications of Transfer Learning

Transfer learning has found widespread applications in various domains, including computer vision, natural language processing, and speech recognition. Let’s take a closer look at some of the specific applications:

- Image Classification: One of the most common applications of transfer learning is image classification. Pre-trained convolutional neural networks, such as VGG16, ResNet, and Inception, have been extensively trained on large-scale image classification tasks. By utilizing these pre-trained models, we can quickly adapt them to new image classification tasks with smaller datasets.

- Object Detection: Object detection is another popular area where transfer learning has been highly effective. Pre-trained models like Faster R-CNN and YOLO are trained on extensive datasets with labeled bounding boxes. By leveraging these models, we can achieve state-of-the-art object detection performance and reduce training time.

- Text Classification: In natural language processing, transfer learning has been successfully applied to text classification tasks. Pre-trained models, such as BERT and GPT, trained on huge text corpora, can be fine-tuned for specific text classification tasks like sentiment analysis, spam detection, and topic classification.

- Speech Recognition: Transfer learning has also shown promise in speech recognition tasks. Pre-trained models trained on large speech datasets, such as those used for automatic speech recognition (ASR) or wake word detection, can be utilized to improve performance on specific speech recognition tasks.

These are just a few examples of the many applications of transfer learning in deep learning. Its versatility and effectiveness make it a valuable technique in various domains.

The Benefits of Transfer Learning

Transfer learning offers several key benefits that make it a favored approach in deep learning:

- Improved Performance: By starting with pre-trained models, which have already learned rich feature representations, we can achieve better performance on our specific task, even with limited labeled data.

- Reduced Training Time: Training a deep neural network from scratch can be time-consuming, especially when dealing with complex models and large datasets. By utilizing pre-trained models and fine-tuning them, we significantly reduce the time required for training.

- Computational Resource Savings: Training deep neural networks often requires substantial computational resources. By leveraging transfer learning, we can utilize the computational resources used for pre-training, saving both time and money.

- Overcoming Data Limitations: In many real-world scenarios, acquiring a substantial amount of labeled data may be challenging or expensive. Transfer learning allows us to overcome this limitation by adapting models trained on large datasets to our specific task, even with limited labeled data available.

- Generalization: Pre-trained models that have been trained on diverse and extensive datasets tend to have better generalization capabilities. Transfer learning helps to transfer this general knowledge and improve the performance of models on new, unseen data.

These benefits make transfer learning a valuable technique in deep learning, enabling us to achieve impressive results while overcoming challenges associated with limited data and computational resources.

The Process of Transfer Learning

Now that we have a good understanding of what transfer learning is and its applications, let’s dive into the process of implementing transfer learning in practice. Successfully applying transfer learning involves the following steps:

Step 1: Selecting a Pre-trained Model

The first step is to choose an appropriate pre-trained model that has been trained on a large dataset related to your specific task. The choice of model will depend on the nature of your problem, the available resources, and the complexity of the task at hand. Popular pre-trained models for computer vision tasks include VGG16, ResNet, and Inception, while BERT and GPT are commonly used pre-trained models for natural language processing tasks.

Step 2: Removing the Output Layer

Once you have selected a pre-trained model, the next step is to remove the output layer. The output layer of the pre-trained model is responsible for predicting the labels/categories of the original dataset it was trained on. Since our task is different, we need to remove this layer and replace it with a new output layer that matches the number of classes/categories in our specific task.

Step 3: Freezing the Layers

After removing the output layer, we typically freeze the layers of the pre-trained model. Freezing the layers means that we prevent the weights of these layers from being updated during the fine-tuning process. By freezing the layers, we ensure that the learned representations from the pre-training phase are retained and not modified.

Fine-tuning and Training

After the above steps, we are ready for fine-tuning and training on our specific task. We can either train the entire model with the new output layer or selectively unfreeze some of the earlier layers to allow their weights to be updated. The decision to freeze or unfreeze layers depends on the availability of data, computational resources, and the complexity of the task.

Conclusion

Transfer learning is a powerful technique that allows us to leverage knowledge gained from one task and apply it to another related task in deep learning. It offers several benefits, including improved performance, reduced training time, and the ability to overcome data limitations. By utilizing pre-trained models and fine-tuning them, we can achieve impressive results even with limited labeled data. Whether in image classification, object detection, text classification, or speech recognition, transfer learning has proven to be a valuable approach in various domains.

Key Takeaways: What is transfer learning in Deep Learning?

- Transfer learning is a technique in deep learning where a pre-trained model is used to solve a new, similar task.

- It allows the knowledge learned from one task to be applied to another, saving time and resources.

- Transfer learning can be especially useful when there is limited labeled data available for the new task.

- By leveraging the knowledge encoded in a pre-trained model, transfer learning enables more efficient and accurate learning.

- It can be compared to how humans learn by building upon knowledge gained from previous experiences.

Frequently Asked Questions

Transfer learning is a concept in deep learning where knowledge gained from one task is applied to a different but related task. It involves taking a pre-trained model and using it as a starting point for a new model, allowing the new model to learn from the already learned knowledge. This saves time and computational resources and can lead to improved performance in the new task.

How does transfer learning work in deep learning?

In transfer learning, a pre-trained model is used as a starting point instead of training a model from scratch. The pre-trained model has already been trained on a large dataset and has learned general features. These features can be transferred to a new model, which can then be fine-tuned on a smaller, specific dataset for the new task.

This transfer of knowledge allows the new model to benefit from the learned representations and generalizations made by the pre-trained model. It saves time and resources as the new model does not need to start from scratch and can learn from the already learned features.

What are the advantages of using transfer learning in deep learning?

The use of transfer learning in deep learning has several advantages. Firstly, it saves time and computational resources as the new model does not need to be trained from scratch. It can leverage the learned knowledge from the pre-trained model and build upon it for the new task.

Secondly, transfer learning can lead to improved performance. The pre-trained model has learned general features from a large dataset, which can be transferable to the new task. This can help the new model learn faster and achieve better results, especially when the new task has limited data.

When is transfer learning useful in deep learning?

Transfer learning is particularly useful in deep learning when there is limited data available for the new task. Deep learning models typically require a large amount of data to generalize well. However, in many real-world scenarios, gathering a large dataset for a specific task can be challenging or time-consuming.

In such cases, transfer learning can be beneficial as it enables us to leverage the knowledge learned from a larger dataset through a pre-trained model. This allows the new model to make use of the already learned features and generalize better, even with limited data.

Are there any limitations or challenges in using transfer learning in deep learning?

While transfer learning has many advantages, there are also some limitations and challenges to consider. One challenge is that the pre-trained model needs to be suitable for the new task. If the pre-trained model has learned features that are not relevant to the new task, it may not provide significant benefits.

Another limitation is that transfer learning relies on the assumption that the new task is related to the original task the pre-trained model was trained on. If the tasks are too different, the transfer of knowledge may not be effective, and training the model from scratch may be more suitable.

How can one implement transfer learning in deep learning?

To implement transfer learning in deep learning, the process usually involves the following steps. First, select a pre-trained model that is suitable for the new task. Next, remove the final layers of the pre-trained model, which are task-specific, and add new layers for the new task.

It is important to freeze the weights of the pre-trained layers initially and only train the new layers. This allows the new model to gradually learn from the pre-trained features. Once the new layers have been trained, the weights of the pre-trained layers can then be fine-tuned to further enhance performance, if necessary.

What Is Transfer Learning? | Transfer Learning in Deep Learning | Deep Learning Tutorial|Simplilearn

Summary

Transfer learning in deep learning is all about using knowledge from one task to help with another. It’s like using what you’ve already learned to make new learning easier. This can save time and resources when training deep learning models.

By reusing pre-trained models or pre-trained layers, transfer learning allows us to leverage existing knowledge. We can adapt these models to new tasks by fine-tuning them or extracting features. With transfer learning, we can improve accuracy and performance even with limited data. It’s a powerful technique that makes deep learning more efficient and effective.