Do you ever wonder how computers are able to understand and process human language? Well, that’s where the magic of deep learning comes into play. In this article, we’ll explore the fascinating world of natural language processing and unravel the secrets of how deep learning is used in this field!

So, what exactly is deep learning? Imagine a computer that learns to understand language the same way humans do – by analyzing patterns, finding hidden meanings, and making predictions. That’s deep learning in a nutshell! It’s a branch of artificial intelligence that uses complex neural networks to mimic the human brain’s ability to process information.

Now, let’s dive into how deep learning is utilized in natural language processing (NLP). NLP is all about teaching computers to process and interpret human language, enabling them to understand, analyze, and respond to text or speech. Deep learning algorithms play a crucial role in this process, allowing computers to learn from vast amounts of data and generate accurate and meaningful language models.

In this article, we’ll discover the specific techniques of deep learning, like recurrent neural networks and transformers, that are used in NLP applications. We’ll explore how these algorithms are trained to perform tasks like text classification, sentiment analysis, language translation, and even chatbot interactions. So, fasten your seatbelts and get ready to unravel the wonders of deep learning in natural language processing!

Contents

- How is Deep Learning used in Natural Language Processing?

- Deep Learning techniques for Natural Language Processing

- Leveraging Deep Learning for NLP Advancements

- Key Takeaways: How is Deep Learning used in natural language processing?

- Frequently Asked Questions

- 1. How does deep learning help in understanding the meaning of text?

- 2. How does deep learning assist in sentiment analysis?

- 3. How is deep learning used in chatbots and virtual assistants?

- 4. How does deep learning help in text generation?

- 5. How is deep learning used in speech recognition?

- Natural Language Processing In 5 Minutes | What Is NLP And How Does It Work? | Simplilearn

- Summary

How is Deep Learning used in Natural Language Processing?

Natural Language Processing (NLP) is a subfield of artificial intelligence that focuses on the interaction between computers and human language. Deep learning, on the other hand, is a branch of machine learning that uses neural networks to analyze and process complex data. In recent years, deep learning has revolutionized NLP, enabling computers to understand and generate human language with remarkable accuracy. In this article, we will explore how deep learning is used in natural language processing and its impact on various applications.

1. Sentiment Analysis and Text Classification

Deep learning is widely used for sentiment analysis and text classification tasks. Sentiment analysis involves determining the sentiment or emotion expressed in a piece of text, such as positive, negative, or neutral. Deep learning models, particularly recurrent neural networks (RNNs) and convolutional neural networks (CNNs), have shown impressive results in sentiment analysis as they can capture the contextual information and meaning of words. These models learn to recognize patterns and relationships in text data, enabling them to accurately classify text into different categories. This technology is leveraged in various applications, such as analyzing social media sentiment, customer feedback analysis, and personalized marketing campaigns.

Benefits:

– Deep learning models can handle large volumes of text data, making sentiment analysis and text classification feasible at scale.

– The ability of deep learning models to capture nuances and contextual information improves the accuracy of sentiment analysis compared to traditional methods.

– These models can adapt and learn from new data, making them adaptable to changing trends and evolving language patterns.

2. Machine Translation

Deep learning has significantly improved machine translation systems. Previously, statistical approaches were used for machine translation, but they struggled with sentence structure and context. Deep learning models, such as recurrent neural networks with encoder-decoder architecture, have overcome these limitations. These models can take a sentence in one language and generate a corresponding sentence in another language. They process the input text, break the sentence into individual components, and translate each component to produce the final translated output. Deep learning-based translation systems, such as Google Translate, have achieved impressive results, especially for widely spoken languages.

Benefits:

– Deep learning models have improved translation accuracy, making machine translation more viable for businesses and individuals.

– These models can handle multiple languages and adapt to different language structures, enabling accurate translations across various language pairs.

– Deep learning-based translation systems continue to improve with more data, allowing for continuous enhancement in translation quality.

3. Speech Recognition

Deep learning has revolutionized the field of speech recognition. Speech recognition technology converts spoken words into written text, and deep learning models have significantly enhanced the accuracy and precision of this process. Convolutional neural networks (CNNs) and recurrent neural networks (RNNs) are commonly used for speech recognition tasks. CNNs analyze the acoustic features of speech, such as frequency and duration, while RNNs perform language modeling to predict the most likely sequence of words based on the audio input. Deep learning-based speech recognition systems like Apple’s Siri and Google Assistant have improved user experience and made voice-controlled interfaces a reality.

Benefits:

– Deep learning models have improved speech recognition accuracy, making voice assistants and speech-to-text systems more reliable and efficient.

– These models can handle variations in speech patterns, accents, and background noise, enabling robust speech recognition in real-world scenarios.

– Deep learning-based speech recognition technology has enhanced accessibility for individuals with disabilities, allowing them to interact with digital devices using their voice.

4. Question Answering and Chatbots

Deep learning plays a crucial role in question-answering systems and chatbots. These systems are designed to understand user queries and provide accurate and relevant responses. Deep learning models, such as transformers and deep neural networks, are used to process and analyze the input question, match it with relevant information, and generate appropriate answers. These models can learn from large corpora of text data and capture the semantic meaning and context of the questions, enabling chatbots to provide intelligent and context-aware responses. Chatbots powered by deep learning are used in customer support, virtual assistants, and information retrieval systems.

Benefits:

– Deep learning models enable accurate and context-aware responses in question-answering systems and chatbots, enhancing user experience.

– These models can handle variations in question phrasing and understand the user’s intent, leading to more accurate and relevant answers.

– Deep learning-based chatbots can learn and improve over time with more user interactions, resulting in better performance and user satisfaction.

5. Text Generation

Deep learning has made significant strides in text generation tasks, such as language modeling, story generation, and poetry generation. Models like generative adversarial networks (GANs) and recurrent neural networks (RNNs) have the ability to generate coherent and contextually relevant text. Text generation models are trained on large volumes of text data, learning the patterns and structures of sentences. These models can then generate new text that resembles human-written content. Deep learning-based text generation is used in creative applications, content generation, and automated writing systems.

Benefits:

– Deep learning models have improved the naturalness and creativity of text generation, allowing for more engaging and human-like content creation.

– These models can learn from diverse text sources, enabling them to generate text in various styles, genres, and tones.

– Deep learning-based text generation systems can assist authors, marketers, and content creators in generating high-quality content efficiently.

6. Named Entity Recognition

Deep learning is also utilized in named entity recognition (NER) tasks. NER involves identifying and classifying named entities, such as person names, organization names, locations, and dates, in a given text. Deep learning models, particularly recurrent neural networks (RNNs) and transformers, have shown remarkable performance in NER by learning to recognize patterns and context. These models can understand the relationships between words and identify specific entities in text data. Named entity recognition is applied in various domains, including information extraction, entity linking, and data mining.

Benefits:

– Deep learning-based models have significantly improved the accuracy and speed of named entity recognition, enabling efficient processing of large volumes of text.

– These models can handle variations in named entity expressions, such as different writing styles or abbreviations, leading to accurate entity identification.

– Deep learning-based named entity recognition systems can adapt to new entity types and continuously improve with additional labeled data.

7. Semantic Analysis and Topic Modeling

Deep learning techniques are also employed in semantic analysis and topic modeling. Semantic analysis involves understanding the meaning and intent behind a piece of text, while topic modeling aims to identify the main themes or topics present in a collection of documents. Deep learning models, such as deep semantic networks and topic models, can learn the semantic relationships between words, sentences, and documents. These models are trained on large text datasets and can automatically identify the sentiment, subject, or topic discussed in a document. Semantic analysis and topic modeling find applications in social media monitoring, content recommendation, and market research.

Benefits:

– Deep learning models enable accurate semantic analysis and topic modeling by capturing the contextual information and relationships within texts.

– These models can handle ambiguous or complex language constructions, leading to more accurate and nuanced analysis.

– Deep learning-based semantic analysis and topic modeling systems can scale to handle large and diverse datasets, providing valuable insights for businesses and researchers.

Deep Learning techniques for Natural Language Processing

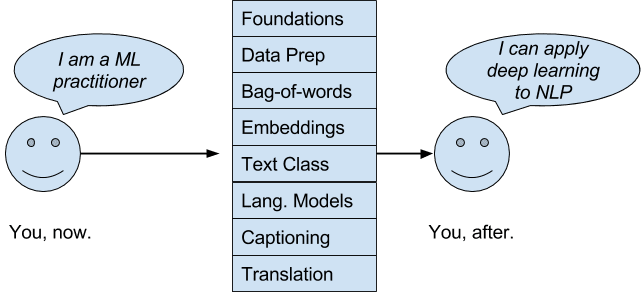

During the past decade, several deep learning techniques have emerged as powerful tools for natural language processing (NLP). These techniques leverage the capabilities of neural networks to analyze and process language data, enabling computers to understand, generate, and manipulate human language with increasing accuracy. Let’s explore some of the key deep learning techniques commonly used in NLP applications.

1. Word Embeddings

Word embeddings are a representation of words in a high-dimensional vector space, where words with similar meanings are located close to each other. Deep learning techniques, such as Word2Vec and GloVe, are used to generate word embeddings by training neural networks on large text corpora. These embeddings capture the semantic meaning and contextual relationships between words, enabling machines to understand the meaning and similarity of words in a more intuitive manner. Word embeddings find application in various NLP tasks, such as sentiment analysis, text classification, and information retrieval.

2. Recurrent Neural Networks (RNNs)

Recurrent Neural Networks (RNNs) are a type of neural network architecture designed to process sequential data, such as natural language. RNNs have a unique ability to maintain memory of past states, allowing them to capture the temporal dependencies and context within a sequence of words. This makes RNNs ideal for tasks such as language modeling, machine translation, and speech recognition. However, traditional RNNs suffer from vanishing or exploding gradients, which hampers their ability to learn long-term dependencies. To address this, variants such as Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) were developed, which alleviate the gradient problem and improve the performance of RNNs in NLP tasks.

3. Convolutional Neural Networks (CNNs)

Convolutional Neural Networks (CNNs) are widely known for their success in image recognition tasks. However, CNNs can also be applied to text data by treating the text as a one-dimensional signal. CNNs use filters to extract local features from the text, capturing patterns such as n-grams or phrases. These filters slide across the text, generating feature maps that are then fed into fully connected layers for classification or regression tasks. CNNs have shown excellent performance in tasks such as sentiment analysis, text categorization, and document classification.

4. Transformers

Transformers are a revolutionary deep learning architecture that has gained attention for its exceptional performance in language tasks. Transformers, with the famous implementation known as the Transformer model, use attention mechanisms to learn the relationships between words in a sentence or document. The attention mechanism allows the model to focus on relevant parts of the input text and capture long-range dependencies, making Transformers highly effective in tasks such as machine translation, text summarization, and question answering. Transformers have become the state-of-the-art in many NLP benchmarks and have significantly advanced the field of natural language processing.

5. Autoencoders

Autoencoders are unsupervised learning models that aim to learn efficient representations of the input data. In the context of NLP, autoencoders can be used for tasks such as text generation and document reconstruction. By training the autoencoder on a large corpus of text data, it can learn to encode the high-dimensional text inputs into low-dimensional representations, often referred to as embeddings. These embeddings capture the essence of the input data and can be used for various downstream tasks, including semantic similarity, clustering, and topic modeling.

6. Generative Models

Generative models, such as Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs), are deep learning models that can generate new data samples resembling the training data. In the context of NLP, generative models have been used for tasks such as text generation, dialog systems, and chatbots. GANs can generate realistic and coherent text by training a generator network to produce text samples that are then evaluated by a discriminator network. VAEs, on the other hand, learn a probabilistic representation of the input text data and can generate new samples based on this learned distribution.

Leveraging Deep Learning for NLP Advancements

Deep learning has revolutionized the field of natural language processing, enabling computers to understand, generate, and manipulate human language with unprecedented accuracy. With techniques such as sentiment analysis, machine translation, speech recognition, question answering, text generation, named entity recognition, and semantic analysis, deep learning is transforming various applications and industries. The continuous advancements in deep learning algorithms and models, coupled with the availability of large datasets, have propelled NLP to new heights.

As deep learning continues to evolve, it holds immense potential for further advancements in natural language processing. Ongoing research focuses on improving model efficiency, interpretability, and fine-tuning techniques for specialized domains. Additionally, the integration of deep learning with other AI techniques, such as reinforcement learning and graph neural networks, opens up new possibilities for NLP applications.

To leverage the power of deep learning in NLP, it is crucial to have access to massive amounts of labeled data and computational resources capable of training complex models. As the technology matures and becomes more accessible, we can expect to see even more innovative solutions and breakthroughs in the field of natural language processing, empowering computers to communicate and understand human language more effectively than ever before.

Key Takeaways: How is Deep Learning used in natural language processing?

- Deep learning is a technique used to teach computers how to understand and process human language.

- It uses neural networks, which are like virtual brains, to analyze and interpret text and speech.

- Deep learning algorithms can improve over time by learning from large amounts of data.

- With deep learning, computers can perform tasks such as language translation, sentiment analysis, and question answering.

- Deep learning models have achieved impressive results in various natural language processing applications.

Frequently Asked Questions

When it comes to natural language processing, deep learning plays a crucial role. Deep learning involves training neural networks to learn and understand patterns in data, and it has proven to be highly effective in processing and understanding text. Here are some commonly asked questions about how deep learning is used in natural language processing:

1. How does deep learning help in understanding the meaning of text?

Deep learning models, like recurrent neural networks (RNNs) and transformer models, are designed to understand the context and semantics of text. By analyzing large amounts of text data, these models can learn to identify patterns and relationships within the text. This allows them to accurately interpret the meaning of words, phrases, and entire sentences.

For example, in machine translation applications, deep learning models can learn to recognize the corresponding words and phrases between different languages by analyzing large bilingual datasets. This helps in accurately translating text from one language to another, taking into account the context and nuances of the language.

2. How does deep learning assist in sentiment analysis?

Sentiment analysis is the process of determining the sentiment or emotion behind a given piece of text. Deep learning models can be trained to classify text data into different sentiment categories, such as positive, negative, or neutral. These models learn to recognize patterns in the text that are indicative of different emotions.

For example, a deep learning model can analyze customer reviews to determine whether they are positive or negative, helping businesses understand customer satisfaction levels. By training on large datasets of labeled customer feedback, the model can learn to accurately classify new reviews and provide valuable insights to businesses.

3. How is deep learning used in chatbots and virtual assistants?

Chatbots and virtual assistants rely on natural language processing techniques to understand and generate human-like responses. Deep learning models are used to train these systems to understand user queries and provide relevant responses.

By analyzing large amounts of conversational data, deep learning models can learn to generate contextually appropriate responses based on the input they receive. They can understand the intent behind user queries, extract relevant information, and generate accurate responses. This enables chatbots and virtual assistants to have more natural and effective interactions with users.

4. How does deep learning help in text generation?

Deep learning models such as recurrent neural networks (RNNs) and generative adversarial networks (GANs) have been used for text generation tasks. These models can learn the patterns and structures of a given text dataset and generate new, coherent text based on that training.

For example, deep learning models have been used to generate human-like text in applications like creative writing and automated article generation. By training on large amounts of text data, the models can learn to mimic the style and structure of the input text, allowing them to generate new text that is contextually relevant and coherent.

5. How is deep learning used in speech recognition?

Deep learning models, particularly convolutional neural networks (CNNs) and recurrent neural networks (RNNs), have been extremely successful in speech recognition tasks. They can learn to convert spoken language into written text, enabling applications like voice assistants and transcription services.

By training on large speech datasets, deep learning models can learn to recognize and interpret speech patterns. They can identify phonetic and linguistic features in the audio signals, and map them to corresponding words or phrases. This allows for accurate and efficient speech recognition, even in noisy or challenging environments.

Natural Language Processing In 5 Minutes | What Is NLP And How Does It Work? | Simplilearn

Summary

Deep learning is a powerful technology used in natural language processing (NLP). It helps computers understand and generate human language by analyzing vast amounts of data. By using deep learning models like neural networks, NLP systems can accurately classify text, extract information, and even generate human-like language. Deep learning has revolutionized the field of NLP and is used in various applications, such as voice assistants, language translation, and sentiment analysis.

However, deep learning in NLP also faces challenges. It requires a large amount of labeled data for training, which can be time-consuming and expensive to acquire. Additionally, deep learning models can sometimes struggle with understanding context and sarcasm in language. Despite these challenges, researchers are constantly working to improve deep learning techniques for NLP, making it an exciting and rapidly evolving field. With its ability to help computers understand and interact with human language, deep learning is transforming how we communicate and interact with technology.