Are there any limitations to Deep Learning? You’ve probably heard about the amazing capabilities of deep learning algorithms in tasks like image recognition and natural language processing. But, like any powerful tool, there are certain limitations that come along with it.

Now, you might be wondering, what are these limitations and why should I care? Well, understanding the limitations of deep learning can help us better grasp its potential and avoid unrealistic expectations. So, let’s dive in and discover the boundaries of this exciting technology!

In this article, we’ll explore the fascinating world of deep learning and uncover its limitations. We’ll discuss why deep learning struggles with certain types of data, the need for large amounts of labeled training data, and the potential biases that can be embedded in the algorithms. So, buckle up, and get ready to explore the untapped potential and the boundaries of deep learning!

Contents

Are there any limitations to Deep Learning?

Deep learning has revolutionized the field of artificial intelligence, enabling machines to learn and make decisions without explicit programming. This technology has shown immense promise in various domains, from image recognition to natural language processing. However, like any other technology, deep learning has its limitations. In this article, we will explore the constraints and challenges associated with deep learning algorithms, shedding light on the areas where improvements are needed.

1. Lack of Interpretability

Deep learning models, particularly deep neural networks, are known for their black-box nature. While they can provide accurate predictions, understanding the reasoning behind these predictions can be challenging. Unlike traditional machine learning algorithms, deep learning models lack interpretability, making it difficult for users to trust their decisions or identify potential biases. This limitation is a significant concern in critical domains such as healthcare and finance, where explainability is crucial. Researchers are actively working on developing methods to enhance the interpretability of deep learning models, but this remains an ongoing challenge.

2. Large Data Requirements

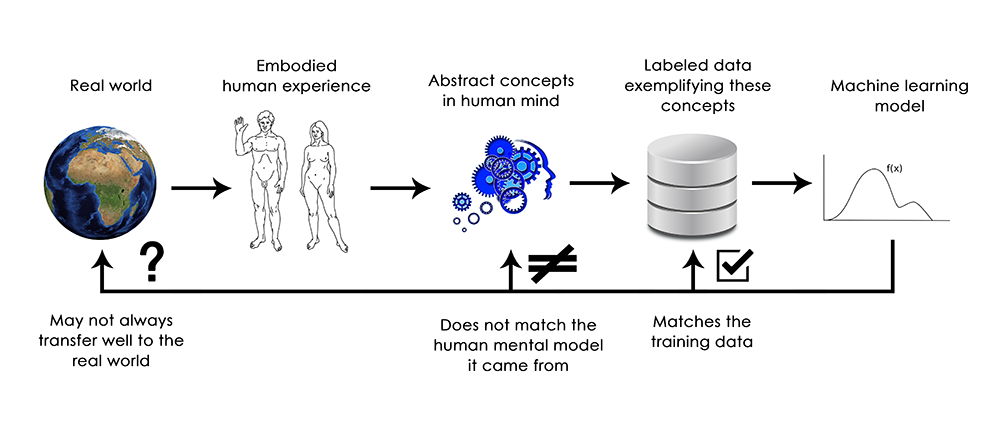

Deep learning models excel when they have access to vast amounts of data. They learn to recognize patterns and make accurate predictions by processing enormous datasets. However, this reliance on extensive data can be a limitation, especially in domains where data is scarce or expensive to collect. For example, in medical research, deep learning algorithms may struggle to perform well on rare diseases due to limited available data. This limitation highlights the need for alternative approaches or strategies to overcome the data requirements of deep learning models.

3. High Computational Power

Deep learning models are computationally demanding, often requiring powerful hardware or specialized processors such as GPUs. Training deep neural networks can be time-consuming and resource-intensive, especially for complex models with numerous layers. This limitation poses challenges for organizations or individuals with limited access to high-performance computing resources. Addressing this limitation involves developing more efficient algorithms, exploring hardware advancements, and optimizing deep learning frameworks to reduce computational requirements.

4. Overfitting and Generalization

Overfitting is a common challenge in machine learning, including deep learning. Deep learning algorithms can memorize the training data, resulting in poor performance on unseen data. This occurs when the model becomes overly complex and fails to generalize well. Mitigating overfitting requires techniques such as regularization, dropout, and early stopping, but it remains an ongoing concern in deep learning. Improving generalization capabilities is crucial to ensure deep learning models perform well in real-world scenarios and handle unseen or noisy data effectively.

5. Lack of Robustness

Deep learning models can be sensitive to slight variations or perturbations in the input data. Adversarial attacks, where small modifications are made to the input to fool the model, highlight the lack of robustness in deep learning algorithms. These vulnerabilities raise concerns in areas such as cybersecurity or autonomous vehicles, where even minor manipulations could have significant consequences. Developing techniques to enhance robustness and resilience to adversarial attacks is an active area of research in the deep learning community.

6. Data Bias and Ethical Considerations

Deep learning models are highly dependent on the quality and diversity of the training data. If the training dataset is biased, reflecting societal or cultural biases, the model can perpetuate or amplify these biases. This raises ethical concerns, as biased models can lead to unfair or discriminatory outcomes. Ensuring fairness and addressing biases in deep learning algorithms require careful data curation, diverse representation in training data, and algorithmic interventions. The development of frameworks and guidelines for ethical AI practices is essential to mitigate these limitations.

7. Limited Transfer Learning

Transfer learning, the ability to transfer knowledge from one task to another, is a powerful capability in machine learning. While deep learning models have shown success in transfer learning, their ability to transfer knowledge is somewhat limited compared to traditional machine learning models. Pretrained deep learning models often require substantial fine-tuning to adapt to new tasks, which can be time-consuming and resource-intensive. Improving transfer learning capabilities in deep learning could accelerate model development and deployment across different domains.

Addressing the Limitations of Deep Learning

1. Enhanced Interpretability

Researchers are actively working on developing methods to enhance the interpretability of deep learning models. Techniques such as attention mechanisms, layer-wise relevance propagation, and visual explanations are being explored to provide insights into the decision-making process of deep neural networks. By gaining a deeper understanding of how these models arrive at their predictions, users can trust the results and identify potential biases, leading to more transparent and accountable AI systems.

2. Data Augmentation and Semi-Supervised Learning

To mitigate the limitations posed by large data requirements, researchers are exploring techniques such as data augmentation and semi-supervised learning. Data augmentation involves generating synthetic data samples to supplement the training dataset, increasing its diversity and size. Semi-supervised learning leverages both labeled and unlabeled data, making more efficient use of available resources. These approaches can help overcome the limitations of limited data availability and reduce the dependency on massive datasets.

3. Model Compression and Efficient Architectures

To address the computational power constraints of deep learning, techniques such as model compression and efficient architectures are being developed. Model compression involves reducing the size and complexity of deep neural networks while preserving their performance. This enables running deep learning models on resource-constrained devices or systems. Efficient networks, such as MobileNet and EfficientNet, have also been introduced, specifically designed for deployment on devices with limited computational capabilities.

Conclusion

While deep learning has achieved remarkable success in various domains, it is important to acknowledge its limitations. From interpretability challenges to data requirements and computational constraints, deep learning algorithms are not without their shortcomings. However, ongoing research and advancements in the field are addressing these limitations, paving the way for more robust, interpretable, and efficient deep learning models. By understanding and overcoming these limitations, we can harness the full potential of deep learning and continue to drive advancements in artificial intelligence.

Key Takeaways: Are there any limitations to Deep Learning?

- Deep learning has high computational requirements, which can be a limitation for resource-constrained devices.

- Training deep learning models requires a large amount of labeled data, which can be challenging to obtain.

- Deep learning models are often seen as black boxes, making it difficult to understand how they arrive at their predictions.

- Deep learning models may suffer from overfitting, where they become too specialized to the training data and perform poorly on new, unseen data.

- Deep learning is not suitable for all types of problems and may not always be the best approach compared to traditional machine learning methods.

Frequently Asked Questions

When it comes to deep learning, many people wonder about its limitations. In this section, we’ll address some common questions regarding the limitations of deep learning and provide insightful answers.

Can deep learning solve all kinds of problems?

While deep learning is incredibly powerful, it does have its limitations. Deep learning excels at tasks such as image and speech recognition, natural language processing, and recommendation systems. However, it may not be suitable for all types of problems. For example, tasks that require reasoning and logical thinking may be better suited to other approaches.

Furthermore, deep learning typically requires a large amount of labeled data for training. In scenarios where data is scarce or of poor quality, deep learning may not perform as well. Therefore, it’s important to consider the nature of the problem and the available data when deciding whether deep learning is the right approach.

Can deep learning models explain their decisions?

One limitation of deep learning is its lack of explainability. Most deep learning models operate as black boxes, making it difficult to understand and interpret their decision-making processes. While they can make accurate predictions, they often struggle to provide explanations for these predictions.

This lack of transparency can be an issue, especially in critical applications like healthcare or finance, where understanding the reasoning behind a decision is essential. Researchers are actively working on developing techniques for explaining deep learning models, but currently, explainability remains a challenge in the field.

Is deep learning prone to overfitting?

Overfitting is a common concern in deep learning. Overfitting occurs when a model becomes too complex and starts fitting the training data too closely. As a result, the model struggles to generalize well to unseen data, leading to poor performance.

To mitigate overfitting, techniques such as regularization and dropout are commonly employed. Regularization introduces penalties for complex models, encouraging simpler and more generalizable representations. Dropout randomly drops out a fraction of the neurons during training, preventing the model from relying too heavily on specific features or patterns.

Does deep learning require a lot of computational resources?

Yes, deep learning typically demands significant computational resources. Deep neural networks consist of numerous layers and parameters, which require substantial computational power to train. Training deep learning models can be computationally intensive and time-consuming, especially when dealing with large datasets.

However, advancements in hardware, such as specialized graphics processing units (GPUs) and tensor processing units (TPUs), have made deep learning more accessible. These hardware accelerators are specifically designed to handle the computational demands of deep learning, allowing for faster training times and more efficient model deployment.

Are there any ethical concerns associated with deep learning?

Deep learning raises several ethical concerns. One major concern is bias in the data used to train deep learning models. If the training data is biased, the model may learn and perpetuate those biases, leading to unfair or discriminatory outcomes in decision-making processes.

Additionally, deep learning models can be vulnerable to adversarial attacks, where malicious actors purposely manipulate input data to deceive the model. This can have serious consequences, especially in sensitive applications like autonomous vehicles or cybersecurity.

Addressing these ethical concerns requires careful data collection, diverse and representative datasets, rigorous testing, and continuous monitoring of deep learning models to ensure fair and unbiased results.

Summary

Deep learning is a powerful technology, but it does have some limitations. One limitation is the need for large amounts of data to achieve accurate results. Another limitation is the lack of transparency in how deep learning models make decisions. Despite these limitations, deep learning continues to make significant advancements and has the potential to revolutionize various industries. It’s essential to understand these limitations and work towards addressing them for the continued improvement of deep learning technology.