Can you explain the concept of backpropagation? Well, imagine you’re playing a game of telephone. You whisper a message to your friend, who then passes it along to the next person, and so on. But sometimes, the message gets distorted along the way, and the final person receives a completely different message than what you initially said.

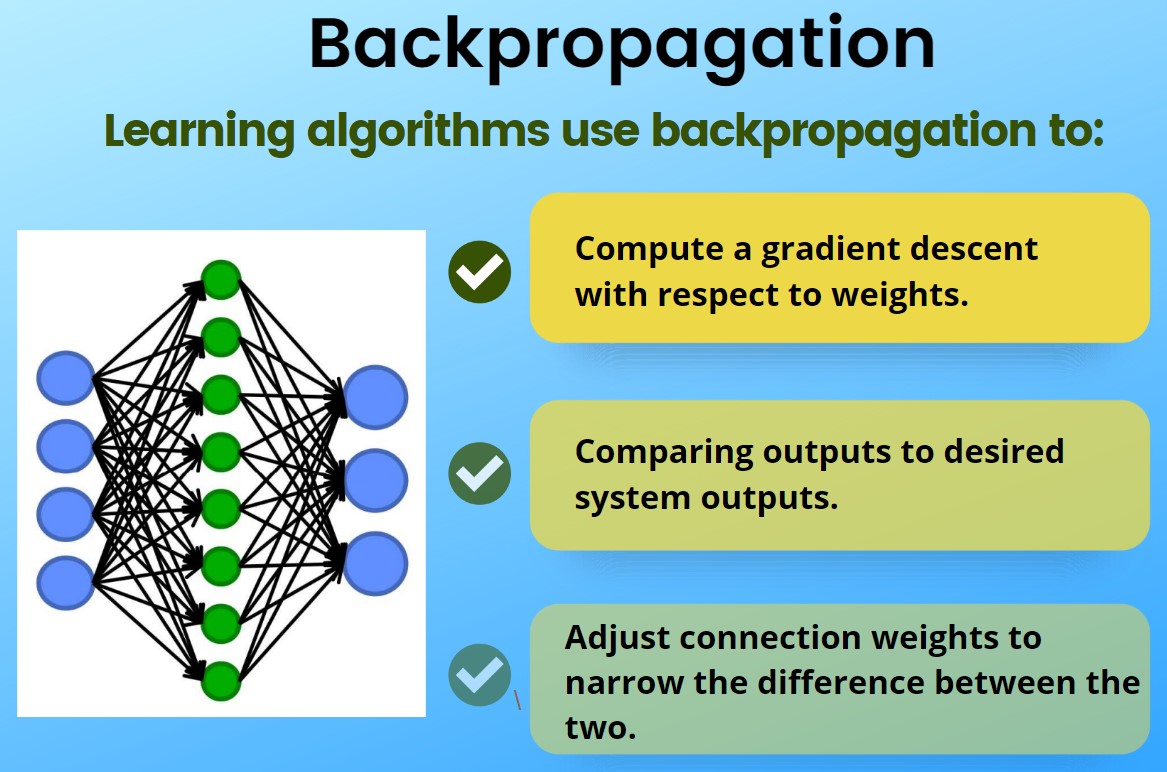

Backpropagation is like playing the telephone game with a mathematical twist. It’s a method used in artificial neural networks to fine-tune the weights and biases of the neurons, so the model can learn from its mistakes and improve its accuracy.

Think of it as a feedback loop that helps the network adjust its calculations based on the difference between its predictions and the actual outcomes of the data it’s given. By going backward through the layers of the network and updating the weights and biases, backpropagation helps the model get closer and closer to making accurate predictions.

So, are you ready to dive deeper into the fascinating world of backpropagation? Let’s unravel the mysteries together and discover how this concept powers the learning process of neural networks.

Backpropagation is a fundamental concept in machine learning. It involves the process of iteratively adjusting the weights of a neural network to minimize the error in its predictions. By propagating the error backwards through the network, the weights are updated to improve the accuracy of the model. This concept forms the basis of deep learning and enables the training of complex neural networks. Understanding backpropagation is crucial for anyone interested in machine learning and artificial intelligence.

Contents

- Demystifying Backpropagation: Unveiling the Hidden Algorithm Behind Neural Networks

- The Birth of Backpropagation: A Revolutionary Breakthrough

- Common Challenges and Pitfalls in Backpropagation

- Backpropagation: Empowering Neural Networks to Shape Our Future

- Applications of Backpropagation: From Healthcare to Finance

- Tips for Understanding and Implementing Backpropagation

- The Future of Backpropagation: Advancements on the Horizon

- Key Takeaways: Explaining the Concept of Backpropagation

- Frequently Asked Questions

- 1. How does backpropagation work?

- 2. Why is backpropagation important in neural networks?

- 3. Can backpropagation be used with any neural network architecture?

- 4. What are the challenges of backpropagation?

- 5. Are there any alternatives to backpropagation?

- Backpropagation in Neural Networks | Back Propagation Algorithm with Examples | Simplilearn

- Summary

Demystifying Backpropagation: Unveiling the Hidden Algorithm Behind Neural Networks

Backpropagation is a fundamental concept in the field of artificial intelligence and machine learning. Understanding it is crucial for grasping the inner workings of neural networks, the backbone of many AI applications. In this article, we will delve deep into the concept of backpropagation, exploring its origins, its mechanics, and its significance in training neural networks. So, let’s embark on a journey through the intricate world of backpropagation and unravel its secrets.

The Birth of Backpropagation: A Revolutionary Breakthrough

Backpropagation, short for “backward propagation of errors,” was first introduced in the 1970s as a novel method to train artificial neural networks. It was a groundbreaking discovery that revolutionized the field of machine learning, making neural networks more efficient and powerful than ever before.

The concept of backpropagation is rooted in the idea of error correction. When training a neural network, the goal is to minimize the difference between the predicted output and the actual output. Backpropagation allows the network to adjust its internal parameters, known as weights and biases, by propagating the error backwards from the output layer to the input layer. This iterative process fine-tunes the network’s connections, enabling it to make more accurate predictions over time.

The Mechanics of Backpropagation: Unleashing the Power of Gradients

To understand how backpropagation works, let’s dive into its mechanics. The process can be divided into two main steps: forward propagation and backward propagation.

During forward propagation, the input data is fed into the neural network, and each neuron performs a weighted sum of its inputs, followed by the application of an activation function. This process is repeated layer by layer until the output is obtained. The output is then compared to the desired output, and the error is calculated using a loss function.

In backward propagation, the error is propagated back through the network to update the weights and biases. This is where gradients come into play. Gradients represent the rate of change of the error with respect to each weight and bias in the network. By using the chain rule of calculus, the error is backpropagated layer by layer, and the gradients are computed to determine how much each weight and bias should be adjusted.

Backpropagation Unveiled: Unraveling the Black Box of Neural Networks

Neural networks are often referred to as black boxes since their decision-making process can be challenging to interpret. However, backpropagation gives us a peek inside this black box by providing insight into how the network learns.

The backward propagation of errors allows us to identify which weights and biases contribute the most to the error. By examining these contributions, we can gain valuable insights into the network’s decision-making process. This information can be used to debug the network, optimize its performance, and understand the underlying patterns that it has learned.

Backpropagation has made it possible to train neural networks with multiple layers, known as deep neural networks. These architectures have demonstrated remarkable performance in various domains, including image recognition, natural language processing, and even autonomous driving. The power of backpropagation has propelled the development of sophisticated AI systems that can surpass human performance in complex tasks.

Common Challenges and Pitfalls in Backpropagation

In the journey of understanding and implementing backpropagation, there are several challenges and pitfalls that one may encounter. Let’s explore some of the common obstacles faced in the world of backpropagation and how to overcome them.

Challenge #1: Vanishing and Exploding Gradients

One of the primary difficulties in training neural networks using backpropagation is the issue of vanishing and exploding gradients. The gradients can become extremely small or exceptionally large as they are backpropagated through the layers, making the learning process unstable.

To address this challenge, various techniques have been developed, such as weight initialization strategies, gradient clipping, and using activation functions that alleviate the vanishing gradients problem, such as the rectified linear unit (ReLU).

Challenge #2: Overfitting and Underfitting

Overfitting and underfitting are common problems in machine learning, including when training neural networks with backpropagation. Overfitting occurs when the network learns the training data too well, resulting in poor generalization to unseen data. Underfitting, on the other hand, happens when the network fails to capture the underlying patterns in the data.

To combat overfitting, techniques like regularization, dropout, and early stopping can be employed. These methods prevent the network from memorizing the training data and help it generalize to new examples. To address underfitting, increasing the size or complexity of the network may be necessary.

Challenge #3: Computational Complexity and Training Time

Training neural networks using backpropagation can be computationally intensive and time-consuming, especially when dealing with large datasets and complex architectures. The amount of data, the number of layers, and the network’s size all contribute to the computational complexity and training time.

To mitigate these challenges, optimizing the training process through techniques like mini-batch gradient descent and parallel computing can significantly speed up the training process. Additionally, hardware acceleration using GPUs or specialized chips like TPUs can dramatically reduce training time.

Backpropagation: Empowering Neural Networks to Shape Our Future

Backpropagation has emerged as a foundational concept in the field of artificial intelligence and machine learning, driving advancements that have transformed our world. By understanding the mechanics of backpropagation, overcoming its challenges, and harnessing its power, we have unlocked the potential of neural networks to tackle complex problems and pave the way for a future powered by intelligent machines.

Applications of Backpropagation: From Healthcare to Finance

Backpropagation has found applications across various domains, revolutionizing industries and enabling unprecedented advancements. Here are some notable areas where backpropagation has made a significant impact:

Healthcare

Backpropagation has played a crucial role in medical imaging, disease diagnosis, and drug discovery. Neural networks trained using backpropagation have demonstrated exceptional performance in tasks like identifying cancerous cells from medical images, predicting the progression of diseases, and designing new drugs.

Finance

In the world of finance, backpropagation has revolutionized stock market prediction, fraud detection, and algorithmic trading. Neural networks trained with backpropagation can analyze complex financial data, identify patterns, and make informed predictions about stock prices, market trends, and trading opportunities.

Natural Language Processing

Backpropagation has powered significant advancements in natural language processing, enabling machines to understand and generate human-like language. From language translation to sentiment analysis and chatbots, neural networks trained with backpropagation have become integral to processing vast amounts of text data and extracting meaningful insights.

Tips for Understanding and Implementing Backpropagation

Here are some tips to help you grasp and utilize the power of backpropagation effectively:

1. Start with the basics: Familiarize yourself with the fundamentals of neural networks and their architecture before diving into backpropagation.

2. Break it down: Understand the step-by-step mechanics of forward and backward propagation to get a clear picture of the algorithm.

3. Experiment with small networks: Start with simple neural networks to practice implementing backpropagation and observe its effects on learning.

4. Visualize the process: Use visual aids, such as diagrams or animations, to visualize the flow of data and gradients during backpropagation.

5. Make use of resources: Take advantage of online tutorials, courses, and forums that offer practical exercises and examples to hone your skills.

6. Stay up-to-date: Follow the latest research and advancements in the field of backpropagation to stay informed about new techniques and best practices.

By following these tips and continuously learning and experimenting, you’ll be well on your way to mastering backpropagation and unlocking the immense potential of neural networks.

The Future of Backpropagation: Advancements on the Horizon

As technology continues to evolve, so does the field of backpropagation. Researchers and engineers are constantly exploring new techniques and methodologies to enhance the performance and efficiency of backpropagation.

One direction of advancement is the exploration of alternative optimization algorithms, such as gradient-free methods or biologically inspired algorithms. These approaches aim to overcome the limitations and challenges of traditional backpropagation, opening up new possibilities for training neural networks.

Another area of focus is the development of explainability techniques for neural networks trained with backpropagation. Understanding and interpreting the decisions made by these complex models is crucial for building trust and transparency. Researchers are actively exploring methods to shed light on the intricate decision-making process of neural networks.

In addition, the use of specialized hardware, such as neuromorphic chips, holds promise in accelerating the training and inference processes of neural networks trained with backpropagation. These chips are designed to mimic the structure and functionality of the human brain, offering potential improvements in efficiency and performance.

As the field of backpropagation continues to advance, we can expect even more remarkable breakthroughs that will shape the future of AI and machine learning. By staying curious and engaged in this ever-evolving field, we can make significant contributions and unlock the true potential of backpropagation.

Wrap-Up: Backpropagation Unveiled: From Theory to Practice

Backpropagation is a cornerstone of modern artificial intelligence and machine learning. It empowers neural networks to learn from data, make accurate predictions, and solve complex problems. By understanding its mechanics, overcoming challenges, and applying best practices, we can harness the power of backpropagation to shape the future of technology and unlock new possibilities. So, embrace the algorithm behind the magic, and let the world of backpropagation unfold before you.

Key Takeaways: Explaining the Concept of Backpropagation

- Backpropagation is a method used to train neural networks.

- It involves calculating the error between predicted and actual outputs.

- Neural networks adjust their weights based on this error.

- Backpropagation helps neural networks learn and improve over time.

- It’s like a feedback loop that helps the network get better at making correct predictions.

Frequently Asked Questions

Welcome to our FAQ section on the concept of backpropagation. Below, you’ll find answers to common questions related to this neural network training algorithm. Let’s dive in!

1. How does backpropagation work?

Backpropagation is a key component of training artificial neural networks. It involves the calculation of gradients that help adjust the weights and biases of the network. The process consists of two main steps: forward propagation and backward propagation.

During forward propagation, the input data is passed through the network, and each neuron performs a mathematical operation to produce an output. The output is then compared with the desired output to calculate an error value. In backward propagation, this error value is used to adjust the weights and biases of each neuron, aiming to minimize the overall error. This process is repeated for multiple iterations until the network learns to make accurate predictions.

2. Why is backpropagation important in neural networks?

Backpropagation is crucial because it enables neural networks to learn from data and improve their performance over time. By adjusting the weights and biases of the network based on the calculated gradients, backpropagation allows the network to minimize errors and make more accurate predictions.

Without backpropagation, neural networks would not be able to adapt and refine their internal parameters, making them ineffective in complex tasks such as image recognition, natural language processing, or playing games. Backpropagation provides the mechanism for fine-tuning the network’s parameters, enabling it to learn patterns and make more accurate predictions with each iteration.

3. Can backpropagation be used with any neural network architecture?

Yes, backpropagation can be used with various neural network architectures. It is a general-purpose algorithm applicable to feedforward neural networks, where information flows in only one direction from the input to the output.

However, backpropagation may not be suitable for certain types of neural networks, such as recurrent neural networks (RNNs) or reinforcement learning models. In these cases, alternative training algorithms, like backpropagation through time (BPTT) for RNNs or policy gradient methods for reinforcement learning, are often used.

4. What are the challenges of backpropagation?

Backpropagation is a powerful algorithm, but it does come with some challenges. One challenge is the potential for getting stuck in local optima, where the network converges to a suboptimal solution instead of finding the global minimum of the error function.

Another challenge is the issue of vanishing or exploding gradients. Deep neural networks with many layers can suffer from gradients that either become too small or too large, leading to learning difficulties. Techniques like weight initialization, activation functions, and gradient clipping are often employed to mitigate these issues.

5. Are there any alternatives to backpropagation?

Yes, there are alternative training algorithms to backpropagation. One popular alternative is called genetic algorithms, where the network’s parameters are optimized using principles inspired by evolutionary biology, such as selection, mutation, and crossover.

Other alternatives include unsupervised learning algorithms like clustering and self-organizing maps or reinforcement learning methods that use a trial-and-error approach to learning. Each alternative has its own strengths and weaknesses, and the choice of algorithm depends on the specific problem and available data.

Backpropagation in Neural Networks | Back Propagation Algorithm with Examples | Simplilearn

Summary

In this article, we learned about backpropagation, which is how neural networks learn from their mistakes. Backpropagation works by adjusting the weights of connections between neurons based on the error they produce. This allows the network to get better at making predictions over time.

Backpropagation involves two main steps: forward propagation and backward propagation. In forward propagation, the neural network makes predictions and calculates the error. In backward propagation, the network uses the error to update the weights and improve its predictions. This process is repeated many times until the network becomes accurate in its predictions.

By understanding backpropagation, we can see how neural networks learn and improve their performance. It’s like learning from our own mistakes to get better at something. Backpropagation is an essential concept in artificial intelligence and machine learning, helping us create intelligent systems that can make accurate predictions and solve complex problems.