Imagine you’re diving into the fascinating world of deep learning. Picture yourself exploring cutting-edge technology and uncovering the secrets of artificial intelligence. Are you ready? Great! Today, we’re going to explore a fascinating challenge in deep learning called the vanishing gradient problem.

Now, you might be wondering, what on earth is a vanishing gradient problem? Well, let me break it down for you in simple terms. Imagine you’re training a neural network to recognize objects in images, like a cat or a dog. The network learns from example images, adjusting its internal parameters to get better and better at the task. But here’s the catch: sometimes, during the learning process, the network gets stuck.

You see, when training a deep neural network with multiple layers, the information flows through each layer, and the network adjusts the weights accordingly. However, the issue arises when the gradients, which indicate how much the weights should change, become extremely small as they propagate through the layers. This vanishing gradient problem makes it difficult for the network to learn effectively, and it often leads to slower or even unsuccessful training.

So, why does this vanishing gradient problem occur? And what can we do about it? Stick with me, and we’ll delve into the exciting world of deep learning and unravel the mysteries behind the vanishing gradient problem. Get ready to expand your knowledge and embark on a learning adventure! Let’s dive in!

Contents

- Understanding the Vanishing Gradient Problem in Deep Learning

- The Basics of Deep Learning

- The Significance of Residual Networks in Deep Learning

- Challenges with Residual Networks and Potential Solutions

- Conclusion

- Key Takeaways: Explaining the Vanishing Gradient Problem in Deep Learning

- Frequently Asked Questions

- Q: Why does the vanishing gradient problem happen in deep learning?

- Q: How does the vanishing gradient problem affect deep learning performance?

- Q: Are there any solutions to address the vanishing gradient problem?

- Q: Does the vanishing gradient problem affect all deep learning architectures equally?

- Q: How does the vanishing gradient problem relate to the exploding gradient problem?

- Summary

Understanding the Vanishing Gradient Problem in Deep Learning

Deep learning has revolutionized various fields, from natural language processing to computer vision. However, it faces certain challenges that can hinder its performance and convergence. One such challenge is the vanishing gradient problem. In this article, we will delve into the concept of the vanishing gradient problem, its causes, and its impact on deep learning models. We will also explore some techniques used to mitigate this problem and improve model training and performance.

The Basics of Deep Learning

Before we dive into the vanishing gradient problem, let’s establish a foundational understanding of deep learning. Deep learning is a subset of machine learning that relies on artificial neural networks inspired by the human brain. These networks consist of interconnected layers of nodes, or neurons, that process and transform data as it passes through the network. Each node applies an activation function to the input it receives and passes the transformed output to the next layer. This hierarchical structure allows deep learning models to learn complex patterns and representations from large amounts of data.

Deep learning neural networks are typically trained using an optimization algorithm called stochastic gradient descent (SGD). During the training process, the model parameters are updated iteratively to minimize a predefined loss function. The update of these parameters relies on calculating gradients, which indicate the direction and magnitude of changes needed to minimize the loss function. The accuracy and efficiency of gradient calculation play a crucial role in training deep learning models effectively.

The Vanishing Gradient Problem: Causes and Effects

The vanishing gradient problem refers to the phenomenon where the gradients used to update the parameters of earlier layers in a deep neural network become extremely small during the backward propagation process. When this occurs, the model struggles to learn and adjust the parameters effectively, leading to slow convergence and reduced performance.

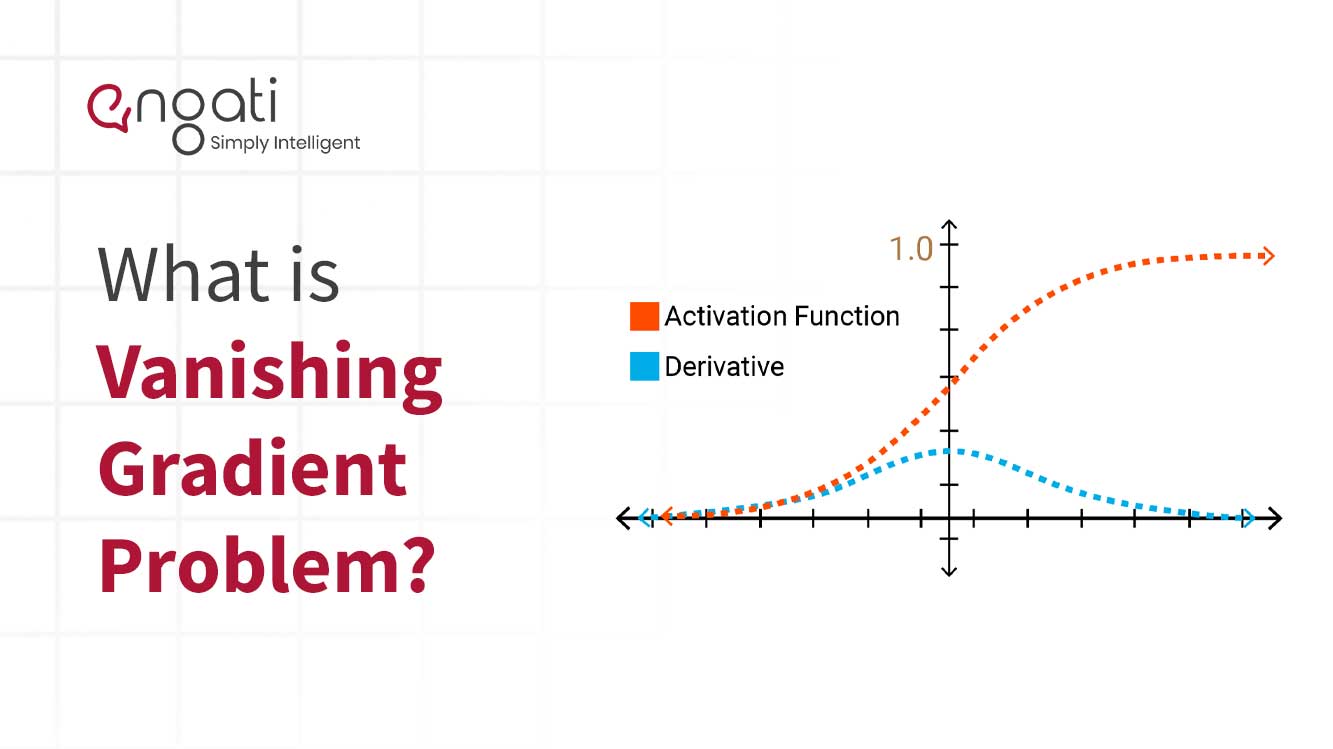

One of the main causes of the vanishing gradient problem is the choice of activation functions. Activation functions introduce non-linearity into the neural network, allowing it to learn complex mappings between inputs and outputs. However, certain activation functions, such as the sigmoid and hyperbolic tangent functions, have saturation points where the derivative becomes close to zero. When gradients repeatedly pass through these saturation points during backpropagation, they become exponentially small, hence the term “vanishing” gradients.

The vanishing gradient problem can greatly impact deep learning models. In the context of recurrent neural networks (RNNs), which process sequential data, vanishing gradients can prevent the model from effectively capturing long-term dependencies. In deep feedforward neural networks, the problem can limit the learning capacity of earlier layers, causing information loss and hindering the training process.

Techniques to Mitigate the Vanishing Gradient Problem

Researchers and practitioners have devised several techniques to mitigate the vanishing gradient problem and improve the training of deep learning models. Here, we will discuss some of the commonly used techniques:

- Weight Initialization: Proper initialization of the network weights can help alleviate the vanishing gradient problem. Techniques like Xavier initialization and He initialization ensure that the weights are initialized in a way that avoids saturation points and keeps the gradients within a reasonable range.

- Activation Functions: Choosing activation functions wisely can have a significant impact on the occurrence of the vanishing gradient problem. Rectified Linear Units (ReLUs) and variants like leaky ReLU and parametric ReLU are popular choices as they do not suffer from saturation and can provide improved gradient flow.

- Gradient Clipping: Gradient clipping involves capping the magnitude of gradients during the backpropagation process. By constraining the gradients to a predefined threshold, gradient exploding can be prevented, allowing the model to learn more effectively.

Other techniques to mitigate the vanishing gradient problem include using skip connections, utilizing gating mechanisms like Long Short-Term Memory (LSTM) and Gated Recurrent Units (GRUs), and employing different optimization algorithms like AdaGrad and Adam.

The Significance of Residual Networks in Deep Learning

Residual Networks, also known as ResNets, have emerged as a breakthrough architecture to address the vanishing gradient problem and enable the training of very deep neural networks. Introduced by researchers at Microsoft Research in 2015, ResNets utilize skip connections that enable the free flow of gradients through the network, bypassing several layers. These skip connections allow the model to learn residual mappings, making it easier to optimize deep networks by avoiding the vanishing gradient problem.

Challenges with Residual Networks and Potential Solutions

While ResNets have overcome the vanishing gradient problem to a large extent, they still face certain challenges. One notable challenge is the degradation problem, where deeper networks perform worse than shallower ones due to increased difficulty in optimization. Researchers have proposed several solutions to tackle this challenge:

Conclusion

In conclusion, the vanishing gradient problem poses a significant obstacle in training deep neural networks. It hampers the flow of gradients and reduces the learning capacity of earlier layers, leading to slow convergence and degraded performance. However, through techniques like weight initialization, choosing appropriate activation functions, gradient clipping, and the introduction of Residual Networks, researchers have been able to mitigate the vanishing gradient problem and make significant advancements in deep learning. By understanding the causes and effects of this problem and implementing the appropriate techniques, we can further improve the training and performance of deep learning models.

Key Takeaways: Explaining the Vanishing Gradient Problem in Deep Learning

- The vanishing gradient problem occurs in deep learning when gradients become extremely small during the training process.

- This problem hinders the ability of neural networks to learn and make accurate predictions.

- As gradients become small, the updates to the weights in the network become negligible.

- This issue is more prevalent in deep architectures with many layers and can lead to poor convergence or slow learning.

- To mitigate the vanishing gradient problem, techniques like weight initialization, activation functions, and gradient clipping can be used.

Frequently Asked Questions

The vanishing gradient problem is a common issue in deep learning. It occurs when the gradient (rate of change) of the loss function becomes extremely small as it propagates through layers of a neural network. This can negatively impact the network’s ability to learn and make accurate predictions.

Q: Why does the vanishing gradient problem happen in deep learning?

The vanishing gradient problem occurs due to the specific activation functions and architectures used in deep learning models. When these functions have small derivatives over their input range, the gradients can diminish exponentially as they backpropagate through layers, leading to vanishing gradients. This happens particularly with sigmoid and hyperbolic tangent activation functions.

For example, if the derivative of the activation function is close to zero, the gradient will become smaller and smaller with each layer, making it difficult for the network to update the weights effectively and learn meaningful representations. As a result, the earlier layers of the network may not receive sufficient updates, limiting their ability to contribute meaningfully to the learning process.

Q: How does the vanishing gradient problem affect deep learning performance?

The vanishing gradient problem can have a detrimental effect on the performance of deep learning models. When the gradients become very small, the weights of the earlier layers in the network are updated much slower compared to the later layers. This leads to slower convergence and makes it difficult for the model to learn complex patterns and relationships within the data.

In extreme cases, the gradients can become so small that the model effectively stops learning, resulting in a plateau in performance. This can prevent the model from reaching its full potential accuracy, and in some cases, it may not even be able to learn at all. Additionally, the vanishing gradient problem can cause instability in the training process, making it harder for the network to converge to an optimal solution.

Q: Are there any solutions to address the vanishing gradient problem?

There are several techniques that can be used to address the vanishing gradient problem in deep learning:

1. Initialization methods: Using appropriate weight initialization techniques, such as Xavier or He initialization, can help alleviate the vanishing gradient problem by ensuring that the weights are initialized in a way that avoids extreme values.

2. Non-saturating activation functions: Replacing the sigmoid or hyperbolic tangent activation functions with alternatives like ReLU (Rectified Linear Unit) can mitigate the vanishing gradient problem. ReLU has a non-zero derivative over most of its input range, allowing gradients to flow more easily.

Q: Does the vanishing gradient problem affect all deep learning architectures equally?

The vanishing gradient problem tends to be more pronounced in deep architectures with many layers, such as recurrent neural networks (RNNs) and deep feedforward neural networks. These architectures have long paths for the gradients to propagate, increasing the chances of the gradients vanishing or becoming very small. Convolutional neural networks (CNNs), on the other hand, are less affected by the vanishing gradient problem due to their localized receptive fields and shared weights, which mitigate the gradient attenuation.

However, it’s important to note that even though some architectures are less prone to the vanishing gradient problem, they may still face challenges related to exploding gradients, where the gradients become excessively large and cause instability in the training process.

Q: How does the vanishing gradient problem relate to the exploding gradient problem?

The vanishing gradient problem and the exploding gradient problem are two sides of the same coin. While the vanishing gradient problem refers to gradients becoming extremely small, the exploding gradient problem occurs when gradients become extremely large during the backpropagation process in deep learning models.

The exploding gradient problem can cause the weights to update by a large magnitude, leading to instability and preventing the model from converging to a good solution. Both the vanishing and exploding gradient problems can hinder the learning process and impact the performance of deep learning models. Various techniques, such as gradient clipping and weight regularization, can be used to address both these issues.

Summary

The vanishing gradient problem happens when deep learning models struggle to learn from past mistakes. This happens because the gradient, which tells the model how much to adjust its weights, becomes extremely small and makes learning difficult. This can lead to slower training, poor performance, and even the model not learning at all.

To overcome this problem, researchers have developed different techniques. One solution is to use activation functions like ReLU, which helps prevent the gradient from vanishing. Another approach involves initializing the weights of the model in a smart way or using specialized layers like LSTM. These techniques help the model better capture long-term dependencies and improve its training.

In conclusion, the vanishing gradient problem is a challenge in deep learning that affects how models learn from their mistakes. By understanding and applying techniques to mitigate this issue, researchers can build more effective and accurate deep learning models.