Welcome! Have you ever wondered how to interpret feature importance scores? Well, you’re in the right place! In this article, we’ll explore the ins and outs of understanding feature importance scores in a fun and easy-to-understand way. So let’s dive right in!

Picture this: you’ve trained a machine learning model, and it’s performing pretty well. But now you want to know which features are contributing the most to its predictions. That’s where feature importance scores come into play. These scores help us understand the relative importance of different features in influencing the model’s decisions. Pretty cool, huh?

So, how can we interpret these feature importance scores? Don’t worry, it’s not as complicated as it sounds! We’ll walk you through various methods and techniques to make sense of these scores, whether you’re dealing with decision trees, random forests, or other machine learning algorithms. By the end of this article, you’ll have a solid understanding of how to interpret those intriguing feature importance scores. Let’s get started!

Feature importance scores reveal the relative impact of variables on a model’s predictions. To interpret these scores, follow these steps:

- Identify the feature importance scores for each variable in your model.

- Rank the variables by their scores, from highest to lowest.

- Focus on the top-ranked features, as they have the greatest influence.

- Analyze the directionality of the scores (positive or negative) to understand their impact on predictions.

- Consider the context of your specific model and domain knowledge to make informed decisions based on the feature importance scores.

Contents

- How can I interpret feature importance scores?

- Different Methods for Interpreting Feature Importance Scores

- Benefits of Interpreting Feature Importance Scores

- Tips for Interpreting Feature Importance Scores

- Conclusion:

- Key Takeaways: How can I interpret feature importance scores?

- Frequently Asked Questions

- 1. What are feature importance scores and why are they important?

- 2. How are feature importance scores calculated?

- 3. How can I interpret feature importance scores in a random forest model?

- 4. Can feature importance scores be used to determine causation?

- 5. How can I visualize feature importance scores?

- How to find Feature Importance in your model

- Summary

How can I interpret feature importance scores?

Feature importance scores play a crucial role in understanding the factors that contribute the most to a particular outcome or prediction. Whether you’re analyzing data for a machine learning model or trying to uncover the key drivers behind a business metric, interpreting feature importance scores can provide valuable insights. In this article, we will explore different methods and techniques to help you make sense of these scores and make informed decisions based on them. From understanding the underlying algorithms to leveraging visualization techniques, we will cover everything you need to know about interpreting feature importance scores.

Different Methods for Interpreting Feature Importance Scores

When it comes to interpreting feature importance scores, there are several methods and techniques you can use. Each method offers its own unique way of understanding and analyzing the importance of features. Let’s take a closer look at some of these methods:

1. Permutation Importance

Permutation importance is a popular method that calculates the decrease in a model’s performance when a specific feature is randomly shuffled. This method provides a measure of how much a feature contributes to the model’s predictive power. By comparing the decrease in performance before and after shuffling, you can determine the importance of each feature.

To calculate permutation importance, you need to follow these steps:

- Fit your model on the training data and record the initial performance metric (e.g., accuracy, AUC).

- Randomly shuffle the values of the feature you want to evaluate.

- Use the shuffled dataset to make predictions and calculate the performance metric.

- Calculate the decrease in performance compared to the initial metric.

- Repeat steps 2-4 multiple times and average the results to get a robust measure of feature importance.

By comparing the decrease in performance for different features, you can determine which features have the greatest impact on the model’s predictions.

2. Tree-based Methods

Tree-based models, such as Random Forests and Gradient Boosting Machines (GBMs), provide intrinsic feature importance measures. These models create a hierarchy of decision rules that split the data based on different features. The importance of a feature is then calculated based on how much each feature contributes to the reduction in impurity or the improvement in the objective function.

You can access the feature importance scores from tree-based models with the help of libraries like scikit-learn in Python. These scores can provide valuable insights into the contribution of each feature and help you prioritize your focus when analyzing and interpreting the results.

Another advantage of tree-based methods is their ability to handle both numerical and categorical features. Categorical variables are automatically converted into binary variables through one-hot encoding, allowing the model to account for the impact of each category on the outcome.

3. Correlation Analysis

Correlation analysis is a method that examines the relationship between feature importance scores and the target variable. By calculating the correlation coefficient between each feature and the target, you can determine how closely related they are and identify the most influential features.

The correlation coefficient, commonly denoted as r, ranges from -1 to 1. A positive value indicates a positive correlation, meaning that as the feature increases, the target variable also tends to increase. Conversely, a negative value indicates a negative correlation, where as one variable increases, the other tends to decrease.

Keep in mind that correlation does not imply causation. It simply measures the strength and direction of the relationship between variables. However, by combining correlation analysis with other methods, you can gain a deeper understanding of feature importance.

Benefits of Interpreting Feature Importance Scores

Interpreting feature importance scores offers numerous benefits in various fields. Here are some of the advantages:

1. Identifying Key Contributors

Feature importance scores allow you to identify the key contributors to a specific outcome or prediction. By understanding which features are most influential, you can focus your efforts and resources on optimizing or improving those specific factors. This targeted approach can lead to more efficient decision-making and resource allocation.

2. Explaining Model Predictions

Feature importance scores help you explain and understand the predictions made by your models. When presenting the results to stakeholders or non-technical audiences, it’s essential to have a clear understanding of which features played a significant role in the predictions. This can build trust and credibility in your models and ensure their effective use in decision-making processes.

3. Guiding Feature Selection

Feature importance scores can guide feature selection processes by highlighting the most informative features for a given task. By focusing on the most important features, you can simplify your models, reduce computational complexity, and improve interpretability. This is particularly important when dealing with high-dimensional datasets where feature selection is crucial.

Overall, interpreting feature importance scores helps you gain insights into the underlying factors that drive predictions or outcomes. It enables you to make informed decisions, optimize models, and communicate results effectively.

Tips for Interpreting Feature Importance Scores

While interpreting feature importance scores, here are some tips to keep in mind:

1. Consider the Context

Interpreting feature importance scores should always be done in the context of the specific problem or task at hand. The relevance and importance of features can vary depending on the domain or industry you are working in. Consider the broader context and the specific problem you are trying to solve to ensure the interpretation is meaningful and actionable.

2. Validate Results

Interpreting feature importance scores is not a one-time process. It’s important to validate and verify the results using different methods or alternative models. Different algorithms may provide different feature rankings, and it’s essential to understand the reasons behind these discrepancies. Validation ensures the reliability and robustness of the interpreted scores.

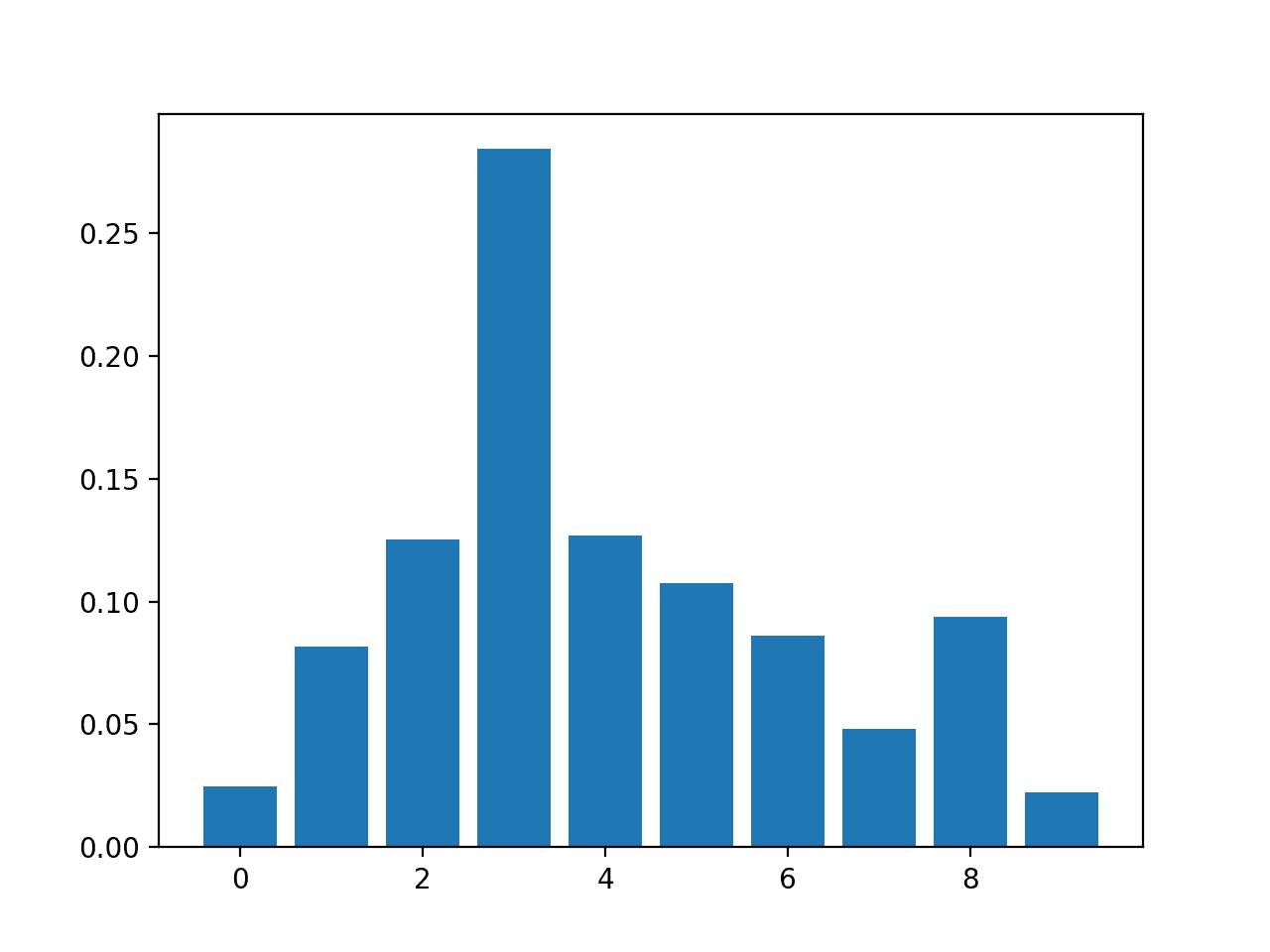

3. Visualize the Results

Visualizing feature importance scores can provide a clearer understanding of the relationships between features and their impact on the target variable. Use bar plots, heatmaps, or other visualizations to present the results in a more intuitive and easily digestible format. This can aid in the interpretation process and facilitate communication with stakeholders.

By following these tips, you can enhance your interpretation of feature importance scores and unlock the full potential of your analyses and models.

Conclusion:

Interpreting feature importance scores is a crucial step in understanding the factors that contribute to predictions or outcomes. By utilizing methods like permutation importance, tree-based methods, and correlation analysis, you can extract valuable insights from these scores. The benefits of interpreting feature importance scores are numerous, including identifying key contributors, explaining model predictions, and guiding feature selection. By considering the context, validating the results, and visualizing the scores, you can enhance your understanding and make informed decisions based on these important metrics.

Key Takeaways: How can I interpret feature importance scores?

- Feature importance scores tell us how much each feature contributes to a model’s predictions.

- High feature importance means the feature has a strong influence on the model’s output.

- Low or zero feature importance suggests the feature has little impact on the predictions.

- Understanding feature importance helps identify key drivers and prioritize feature selection.

- Interpreting feature importance depends on the specific model used and the context of the problem.

Frequently Asked Questions

Here are some commonly asked questions about interpreting feature importance scores and their answers:

1. What are feature importance scores and why are they important?

Feature importance scores are numerical representations of how much a feature contributes to the predictions made by a machine learning model. They help us understand which features are most influential in making predictions. These scores are essential because they guide us in feature selection, model optimization, and identifying the most relevant variables.

By interpreting feature importance scores, we can identify the key factors that drive the predictions and gain insights into the relationships between the input variables and the target variable. This understanding allows us to make informed decisions, improve our models, and gain valuable insights into the underlying data.

2. How are feature importance scores calculated?

Feature importance scores can be calculated using various techniques, depending on the machine learning algorithm used. Some common methods include permutation importance, mean decrease impurity, and coefficient weights in linear models.

For example, permutation importance involves randomizing the values of one feature while keeping the others unchanged and observing the impact on the model’s performance. The larger the drop in performance, the more important the feature is considered to be. Each algorithm may have its own specific calculations for determining feature importance scores.

3. How can I interpret feature importance scores in a random forest model?

In a random forest model, feature importance scores are typically calculated based on the mean decrease impurity or Gini index. These scores provide an indication of how much each feature reduces the impurity or randomness in the model’s predictions.

To interpret these scores, you can start by identifying the features with the highest importance scores. These are the features that have the most significant impact on the model’s predictions. You can then analyze the relationships between these important features and the target variable to understand the patterns or correlations that exist.

4. Can feature importance scores be used to determine causation?

No, feature importance scores cannot be directly used to establish causation between variables. They only provide insights into the relationship between features and predictions. Correlation does not imply causation, and other factors may be at play that influence the predictive power of a feature.

However, feature importance scores can be a starting point for further investigation and hypothesis generation. They can help identify potential influential factors that warrant deeper analysis to uncover causal relationships through experimental design or domain knowledge.

5. How can I visualize feature importance scores?

There are several ways to visualize feature importance scores. One common method is to create a bar plot or a horizontal bar chart where the features are listed on the y-axis, and the importance scores are represented on the x-axis. This allows for quick comparison and identification of the most important features.

Additionally, some libraries and tools provide advanced visualization techniques, such as tree-based plots or heatmaps, that give a more detailed understanding of the relationships between features. These visualizations can aid in communicating the importance of features to stakeholders and make the interpretation process more accessible and insightful.

How to find Feature Importance in your model

Summary

Feature importance scores tell us which factors are most influential in a model’s predictions. They help us understand which variables have the strongest impact on the outcome. These scores can be calculated in different ways, such as using trees or statistical methods.

It’s important to interpret feature importance scores based on the context and the model used. High scores indicate high importance, while low scores mean the feature has less impact. However, correlation and causation should be considered, as high importance doesn’t always mean a direct cause.

To interpret feature importance, look for patterns or trends, compare scores between features, and consider domain knowledge. Remember, feature importance is just one piece of the puzzle, and it’s essential to assess other factors before making conclusions or decisions based solely on these scores.