Imagine a world where machines can understand and respond to our voices just like humans do. Sounds fascinating, right? Well, that’s exactly what deep learning is helping us achieve in the field of speech recognition. So, how does deep learning improve speech recognition? Let’s dive in and find out!

When it comes to understanding spoken language, deep learning algorithms are like the superheroes of the tech world. They use artificial neural networks to simulate the human brain’s ability to process information. By analyzing huge amounts of data, these algorithms can learn patterns and features that enable them to accurately transcribe and interpret spoken words.

Thanks to deep learning, speech recognition technology has come a long way from the early days of robotic and often inaccurate interpretations. Now, machines can recognize speech with impressive accuracy, making it easier for us to interact with devices through voice commands and even helping those with physical disabilities communicate more effectively. With deep learning at its core, speech recognition has become more intuitive and responsive than ever before.

Contents

- How Does Deep Learning Improve Speech Recognition?

- Key Takeaways: How Deep Learning Improves Speech Recognition

- Frequently Asked Questions

- 1. How does deep learning improve speech recognition accuracy?

- 2. Can deep learning improve speech recognition in noisy environments?

- 3. How does deep learning handle different accents and dialects in speech recognition?

- 4. Can deep learning improve real-time speech recognition?

- 5. How does deep learning improve speech recognition for low-resource languages?

- Summary

How Does Deep Learning Improve Speech Recognition?

Deep learning is revolutionizing the field of speech recognition by enabling more accurate and efficient systems. Through the use of neural networks and advanced algorithms, deep learning algorithms are capable of learning and extracting intricate patterns from large amounts of speech data. This allows for improved accuracy, better noise robustness, and enhanced natural language understanding in speech recognition technology. In this article, we will explore the various ways in which deep learning improves speech recognition and the benefits it brings.

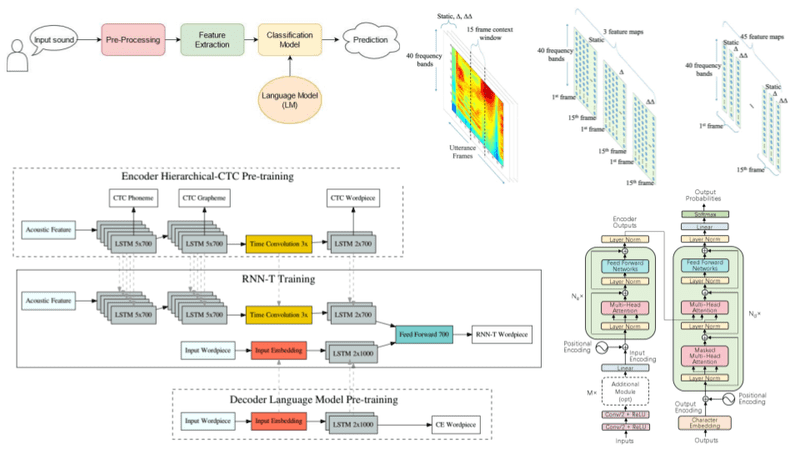

1. Neural Networks in Speech Recognition

Neural networks form the foundation of deep learning and have been instrumental in advancing speech recognition technology. Deep neural networks (DNNs) are particularly effective in speech recognition tasks as they can learn multiple layers of representations, allowing for a more nuanced and detailed understanding of spoken language. DNNs utilize large amounts of labeled speech data to train their parameters, enabling them to extract complex features from raw audio signals. This significantly improves accuracy and reduces errors in speech recognition systems.

One of the key advantages of using neural networks in speech recognition is their ability to capture contextual information. Traditional speech recognition systems relied heavily on handcrafted features and statistical models. However, with deep learning, neural networks can take advantage of the sequential structure of speech data, allowing for context-aware processing. This contextual understanding enhances the accuracy and fluency of speech recognition systems by considering the dependencies between words and phrases.

2. Convolutional Neural Networks (CNNs) for Acoustic Modeling

Convolutional Neural Networks (CNNs) have emerged as a powerful tool for acoustic modeling in speech recognition. These networks are particularly effective at learning local patterns within speech signals, making them well-suited for tasks such as phoneme recognition and speech feature extraction. CNNs operate by convolving filters across the input data, capturing important features at various scales. These extracted features are then passed through fully connected layers for classification.

One of the significant advantages of using CNNs for acoustic modeling is their ability to handle variable-length inputs. Unlike traditional approaches where fixed-length windows are used, CNNs can take variable-length audio segments as input, making them more flexible and adaptable to different speech utterances. This capability allows for improved modeling of temporal dependencies and better representation of speech signals, resulting in enhanced accuracy and robustness.

3. Recurrent Neural Networks (RNNs) for Language Modeling

Recurrent Neural Networks (RNNs) play a crucial role in language modeling, a fundamental component of speech recognition. RNNs are designed to handle sequential data by maintaining an internal state or memory, allowing them to capture long-term dependencies in the input signal. This capability is particularly valuable in language modeling, where the context of previous words is crucial in determining the likelihood of the next word.

By using RNNs, deep learning models can analyze context and predict the most likely word given the surrounding words in a sentence. This allows for more accurate and natural language understanding in speech recognition systems. RNNs are also capable of handling variable-length sequences, making them highly adaptable to different speech inputs. With their ability to capture temporal dependencies and model complex language structures, RNNs greatly enhance the performance of speech recognition systems.

4. Benefits of Deep Learning in Speech Recognition

Deep learning has brought several significant benefits to the field of speech recognition. Firstly, it has significantly improved the accuracy of speech recognition systems, leading to more reliable and precise results. Deep learning models are capable of learning intricate patterns and representations from large amounts of data, enabling them to discriminate between different sounds and phonemes with high accuracy.

Secondly, deep learning has greatly enhanced the noise robustness of speech recognition systems. Traditional systems were often sensitive to background noise, resulting in decreased performance in real-world environments. With the use of deep learning techniques, robust acoustic models can be trained to handle noisy input and filter out unwanted disturbances, ensuring accurate recognition even in challenging acoustic conditions.

Finally, deep learning allows for better natural language understanding in speech recognition. The sequential and contextual information captured by neural networks enables systems to understand and interpret speech in a manner closer to human comprehension. This results in more fluent and contextually accurate transcriptions, improving the overall user experience.

Overall, deep learning has revolutionized the field of speech recognition by significantly improving accuracy, noise robustness, and natural language understanding. Through the use of neural networks, including DNNs, CNNs, and RNNs, deep learning algorithms have brought tremendous advancements to speech recognition technology, making it more efficient and effective in various applications.

Key Takeaways: How Deep Learning Improves Speech Recognition

- 1. Deep learning uses neural networks to process human speech and improve speech recognition technology.

- 2. It allows computers to learn patterns and features from large amounts of speech data.

- 3. Deep learning algorithms can extract important information from background noise, improving accuracy.

- 4. It helps in understanding different accents, dialects, and speech variations, making speech recognition more inclusive.

- 5. Deep learning continuously improves speech recognition systems through iterative training and feedback.

Frequently Asked Questions

Welcome to our FAQ section, where we explore the ways in which deep learning enhances speech recognition. Dive into these questions to learn more about this fascinating topic!

1. How does deep learning improve speech recognition accuracy?

Deep learning improves speech recognition accuracy by utilizing artificial neural networks that can analyze vast amounts of data and identify patterns. Traditional speech recognition systems relied on rule-based algorithms, but deep learning allows the system to learn from large datasets and make accurate predictions.

Deep learning models, such as deep neural networks, use multiple hidden layers to process audio input and extract relevant features. These models can automatically learn to differentiate between different speech sounds, accents, and languages, resulting in improved accuracy and better overall performance.

2. Can deep learning improve speech recognition in noisy environments?

Yes, deep learning can improve speech recognition in noisy environments. In traditional systems, background noise often poses a challenge, causing errors in speech recognition. Deep learning models can be trained on data containing various levels of background noise, enabling them to better adapt and filter out unwanted noise during the recognition process.

By learning to separate speech signals from background noise, deep learning models can enhance the accuracy of speech recognition even in challenging acoustic environments. Using advanced techniques like noise reduction, signal enhancement, and adaptive filtering, deep learning algorithms excel at improving speech recognition performance in noisy conditions.

3. How does deep learning handle different accents and dialects in speech recognition?

Deep learning excels at handling different accents and dialects in speech recognition. Traditional systems often struggled with recognizing speech from individuals with diverse accents, making it challenging to accurately transcribe their words. Deep learning models can be trained on diverse datasets that include a wide range of accents and dialects, allowing them to better understand and interpret different speech patterns.

Deep learning algorithms can automatically learn and extract relevant accent-specific features, allowing for better adaptation and recognition of speech from various regions and languages. By leveraging the power of neural networks, deep learning significantly improves the accuracy of speech recognition across different accents, dialects, and languages.

4. Can deep learning improve real-time speech recognition?

Yes, deep learning can improve real-time speech recognition. Real-time speech recognition requires fast and efficient processing to transcribe spoken words without significant delays. Deep learning models, with their parallel processing capabilities and optimized algorithms, can achieve near real-time speech recognition with high accuracy.

By leveraging techniques such as recurrent neural networks (RNNs) or convolutional neural networks (CNNs), deep learning algorithms can process audio input in real-time, making immediate predictions and generating accurate transcriptions. This enables applications like voice assistants, transcription services, and voice-controlled systems to provide prompt and accurate responses in real-world scenarios.

5. How does deep learning improve speech recognition for low-resource languages?

Deep learning can improve speech recognition for low-resource languages by leveraging transfer learning and data augmentation techniques. Transfer learning allows models trained on resource-rich languages to be fine-tuned for low-resource languages, significantly reducing the data requirements for training. This approach enables deep learning models to achieve good performance even with limited labeled data for low-resource languages.

Data augmentation techniques, such as speed perturbation, add variability to the existing limited dataset, making the model more robust and adaptable to different speech characteristics. By combining transfer learning and data augmentation, deep learning can bridge the gap and enhance speech recognition accuracy for low-resource languages, opening up new possibilities for these languages in various applications.

Summary

Deep learning is a powerful technology that helps improve speech recognition systems. By using artificial neural networks, deep learning allows computers to learn and understand human speech better. This means that speech recognition software can become more accurate and reliable over time.

With deep learning, computers can analyze vast amounts of data and learn patterns in human speech. This leads to better accuracy in recognizing words and understanding context. Deep learning also helps address common challenges in speech recognition, such as dealing with background noise and different accents.

In conclusion, deep learning is a game-changer for speech recognition. It enables computers to understand and transcribe human speech with improved accuracy and reliability, making our interactions with technology more natural and seamless. Exciting advancements in artificial intelligence continue to make speech recognition systems even smarter and more efficient.