In the vast forest of machine learning algorithms, there’s one type that stands out for its ability to keep things fresh – random forests. But you might be wondering, “How do random forests keep things fresh?” Well, my young friend, allow me to enlighten you.

Imagine you’re in a forest, surrounded by countless trees. Each tree represents a decision tree model. Random forests bring together multiple decision trees to create a diverse and robust ensemble.

But what sets random forests apart is their ingenious way of injecting freshness into the mix. They introduce an element of randomness by selecting a random subset of features for each individual tree. This ensures that each tree sees only a part of the full picture, avoiding overfitting and encouraging diverse perspectives.

So, dear reader, the secret to how random forests keep things fresh lies in their ability to embrace randomness and diversity. By combining the wisdom of many trees, they create a powerful algorithm that can handle complex problems and make accurate predictions.

Shall we delve deeper into the magical world of random forests and uncover more of their secrets? Let’s journey together and explore the wonders that await us!

Contents

- How do Random Forests Keep Things Fresh?

- The Basics of Random Forests

- Key Takeaways: Exploring How Random Forests Keep Things Fresh

- Frequently Asked Questions

- 1. How does a random forest algorithm maintain freshness in its predictions?

- 2. Can you explain how random forests prevent overfitting and maintain freshness?

- 3. How does the random selection of features in random forests contribute to freshness?

- 4. How does the technique of bootstrapping contribute to the freshness of random forests?

- 5. Can random forests adapt to changes in the input data and maintain freshness?

- Summary

How do Random Forests Keep Things Fresh?

Random forests, a popular machine learning algorithm, have gained significant traction in recent years. These algorithms have proven to be highly effective in various fields, including data analysis, image recognition, and fraud detection. But what exactly is a random forest, and how does it keep things fresh? In this article, we will delve deep into the intricacies of random forests, exploring their inner workings and understanding why they are considered a powerful tool in the realm of machine learning.

The Basics of Random Forests

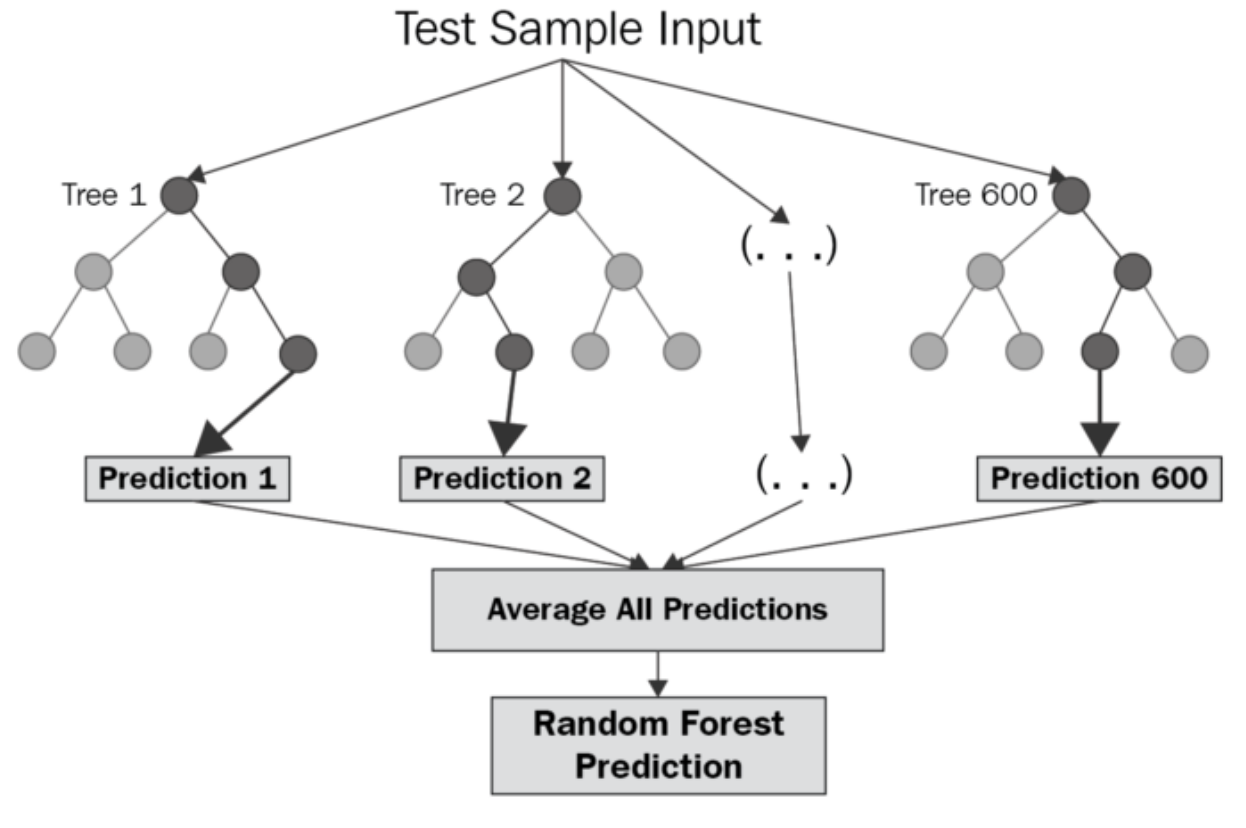

Random forests are a type of ensemble learning algorithm, which means they combine the predictions of multiple decision trees to produce a more accurate final prediction. Each decision tree is constructed using a random subset of the training data and a random subset of features. This randomness injected into the training process is what sets random forests apart from other algorithms.

The randomization of the data and features helps prevent overfitting, a common issue in machine learning where the model learns the training data too well and fails to generalize to new, unseen data. By using random subsets, each decision tree in the random forest learns different aspects of the data, reducing the chances of overfitting and improving the overall predictive power of the ensemble.

The Power of Randomness

Randomness plays a crucial role in the success of random forests. By injecting randomness in various stages of the algorithm, random forests are able to keep things fresh and maintain a high level of accuracy.

Firstly, during the construction of each decision tree, random subsets of the training data are used. This involves randomly selecting a portion of the training data for each tree. This process ensures that each tree in the forest has a diverse set of training instances, preventing the model from being biased towards specific patterns or outliers in the data. This randomness helps to reduce the variance of the model and improve its ability to generalize to unseen data.

In addition to random sampling of the training data, random forests also employ random feature selection. Rather than using all available features for each tree, only a subset of features is randomly chosen. This ensures that each tree focuses on different aspects of the data, capturing diverse patterns and reducing the correlation among the trees in the forest. The result is a more robust model that is less prone to overfitting and can adapt to different types of data.

Randomness doesn’t stop there. During the prediction phase, each tree in the random forest independently produces a prediction. The final prediction is then determined by combining the predictions from all the trees, typically through voting or averaging. This aggregation of predictions further enhances the accuracy and robustness of the random forest model.

The Benefits of Random Forests

Random forests offer numerous benefits that make them a compelling choice for many machine learning tasks. Here are some key advantages of using random forests:

1.

High Accuracy:

Random forests are known for their ability to achieve high accuracy in predictions. By combining multiple decision trees, each trained on different subsets of data and features, random forests can capture intricate patterns and make accurate predictions.

2.

Robustness:

The randomness injected into random forests provides robustness against noise and outliers in the data. Each tree in the forest learns from a slightly different perspective, reducing the impact of individual noisy instances or features.

3.

Feature Importance:

Random forests can also provide insights into feature importance. By measuring the impact of each feature on the overall performance of the model, random forests can help identify the most influential features in the dataset.

4.

Efficiency:

Despite their complexity, random forests can be trained efficiently on large datasets. The parallelizability of the algorithm allows for faster training times compared to some other machine learning algorithms.

5.

Interpretability:

Unlike some other complex machine learning models, random forests are relatively easy to interpret. The decision trees within the forest can provide insights into how the model reaches its predictions, allowing for greater transparency and understanding.

In conclusion, random forests are powerful machine learning algorithms that harness the power of randomness to achieve high accuracy and robustness. By constructing decision trees on random subsets of data and features, random forests are able to keep things fresh and adapt to various types of datasets. The benefits of random forests, including their accuracy, robustness, and interpretability, make them a valuable tool in the field of machine learning. Whether you’re tackling fraud detection or image recognition, random forests can provide fresh and reliable predictions.

Key Takeaways: Exploring How Random Forests Keep Things Fresh

- Random forests are a powerful type of machine learning algorithm.

- They consist of multiple decision trees working together as a team.

- Each decision tree is trained on a random subset of the training data.

- The randomness helps prevent overfitting and keeps the algorithm adaptable.

- By taking a vote from all the trees, random forests make predictions with high accuracy.

Frequently Asked Questions

Welcome to our FAQ section on how random forests keep things fresh! Here, we have addressed some common questions related to the topic.

1. How does a random forest algorithm maintain freshness in its predictions?

The random forest algorithm maintains freshness in its predictions by using a technique called bootstrapping. It creates multiple subsets of the original dataset, and each subset is used to build a different decision tree. These decision trees are then combined to make predictions. By using a random selection of subsets, the algorithm introduces variability into the predictions, which helps keep them fresh.

In addition to bootstrapping, random forests also incorporate a feature called feature randomization. This means that at each split in the decision tree, a random subset of features is considered for splitting instead of using all the features. This further adds randomness to the predictions and helps keep them fresh by preventing overfitting.

2. Can you explain how random forests prevent overfitting and maintain freshness?

Random forests prevent overfitting by combining multiple decision trees and incorporating randomness. Overfitting occurs when a model learns patterns from the training data that are specific to that data but may not generalize well to new data. By building multiple decision trees with different subsets of the data and using random feature selection, random forests introduce variability into the model, which helps prevent overfitting.

This variance introduced by using multiple decision trees and feature randomization allows random forests to maintain freshness in their predictions. Since the predictions are based on an ensemble of trees with different subsets of data and features, the model is less likely to get stuck in patterns specific to the training data. Instead, it adapts to the variability in the data and provides fresher predictions.

3. How does the random selection of features in random forests contribute to freshness?

The random selection of features in random forests contributes to freshness by reducing the correlation between the decision trees. By considering only a random subset of features at each split, the algorithm ensures that different decision trees focus on different aspects of the data. This randomness prevents the trees from becoming too similar to each other and promotes diversity in the predictions.

With this diversity, random forests can capture a wider range of patterns and make predictions that are less biased towards a specific set of features. This variation in the predictions helps keep them fresh because the model does not rely heavily on one particular set of features, but instead considers different aspects of the data to make more robust predictions.

4. How does the technique of bootstrapping contribute to the freshness of random forests?

Bootstrapping is a technique used in random forests that involves creating multiple subsets of the original dataset by randomly sampling the data with replacement. These subsets are then used to build different decision trees. The bootstrapping process introduces variability into the training process because each decision tree is trained on a different subset of the data.

This variability contributes to freshness because the predictions of the random forest are based on an ensemble of decision trees that have been trained on different subsets. Each tree captures different aspects of the data, and combining them leads to a more diverse set of predictions. As a result, the random forest is less likely to get stuck in specific patterns and can adapt to new or unseen data, thus keeping the predictions fresh.

5. Can random forests adapt to changes in the input data and maintain freshness?

Yes, random forests can adapt to changes in the input data and maintain freshness. One reason for this is that random forests are an ensemble of decision trees, which means they can quickly incorporate new information by adding or updating decision trees. When new data is available, the random forest can be retrained using both the existing data and the new data.

This retraining process with updated data ensures that the model adapts to changes and maintains freshness. The random forest can capture new patterns or changes in the relationships between the features and the target variable. By combining the knowledge from previous trees with new trees in the ensemble, the random forest keeps its predictions up-to-date and fresh.

Summary

Random forests are a cool way to make predictions and keep things fresh. They work by combining the knowledge of many different decision trees. Each tree makes its own prediction, and then the final result is determined by voting.

The great thing about random forests is that they can handle large datasets and are less likely to overfit than a single decision tree. They also have the ability to deal with missing data and can handle both numerical and categorical features. With their versatility and accuracy, random forests are a popular choice for many machine learning tasks.