So, you’re curious about how do you train a neural network, huh? Well, you’ve come to the right place! Neural networks are like super-smart computer brains that can learn and make decisions on their own. But just like any brain, they need training to become really good at what they do. In this article, we’ll dive into the fascinating world of neural networks and uncover the secrets of training them to perform amazing feats!

Now, you might be wondering, what exactly is a neural network and why does it need training? Think of a neural network as a virtual brain made up of interconnected nodes called neurons. These neurons work together to process information and make predictions. But here’s the catch – when a neural network is first created, it doesn’t know anything. It’s like a blank slate waiting to be filled with knowledge. That’s where training comes in!

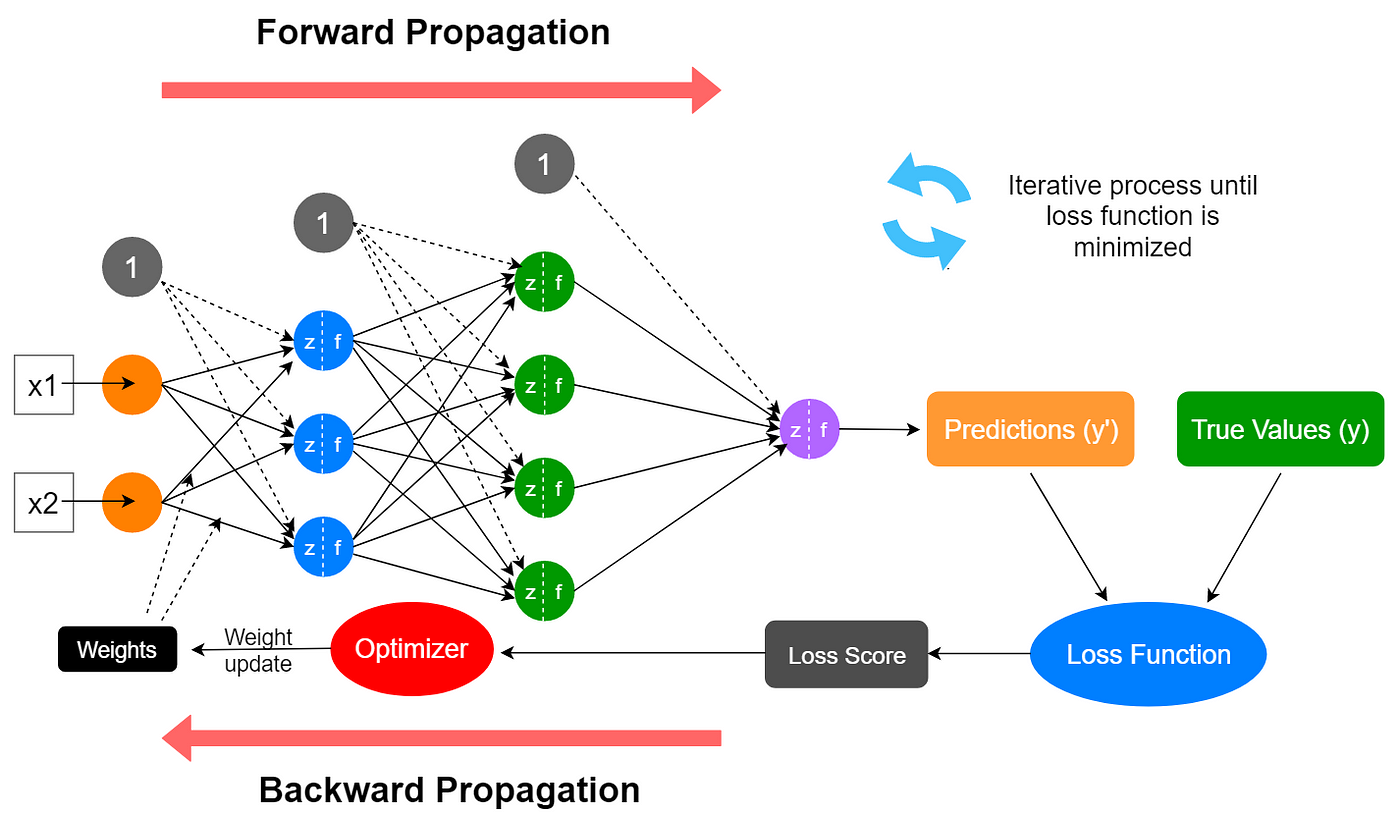

Training a neural network involves feeding it lots and lots of data, just like when you learn something new by studying examples. The more data you give it, the smarter it becomes! The network learns patterns and relationships in the data through a process called “forward propagation,” where it makes predictions and compares them to the correct answers. If it gets something wrong, it adjusts its internal connections in a process called “backpropagation,” kind of like correcting its mistakes.

So, my eager learner, get ready to embark on a thrilling journey into the world of neural network training. We’ll uncover the inner workings of this incredible technology and explore the step-by-step process of turning a brainchild into a powerhouse of intelligence. Strap in, because it’s time to uncover the secrets of training a neural network!

1. Prepare your dataset by organizing and cleaning the data.

2. Split your dataset into training and testing sets.

3. Choose a suitable neural network architecture for your task.

4. Initialize the weights and biases of the neural network.

5. Implement the forward propagation algorithm to compute the output.

6. Calculate the loss function to measure the network’s performance.

7. Use backpropagation to update the weights and minimize the loss.

8. Repeat steps 5-7 for multiple epochs to improve the network’s accuracy.

Contents

- How Do You Train a Neural Network, You Ask?

- Advancements in Neural Network Training

- Training Neural Networks for Specific Tasks

- Key Takeaways: How do you train a neural network, you ask?

- Frequently Asked Questions

- Training a Neural Network: Everything You Need to Know

- 1. How does training a neural network work?

- 2. What is the role of the loss function in training a neural network?

- 3. How do you prevent overfitting during neural network training?

- 4. How long does it take to train a neural network?

- 5. Can a pre-trained neural network be used instead of training from scratch?

- Training a Neural Network explained

- Summary

How Do You Train a Neural Network, You Ask?

Neural networks are a fundamental component of artificial intelligence and machine learning algorithms. These complex systems are designed to recognize patterns and make predictions based on large sets of data. However, training a neural network is no simple task. It requires careful preparation, data preprocessing, and fine-tuning to achieve optimal results. In this article, we will explore the process of training a neural network in detail, providing you with the knowledge and tools to embark on this fascinating journey.

1. Understanding the Basics of Neural Networks

Before diving into the training process, it’s crucial to grasp the basics of how neural networks work. A neural network consists of layers of interconnected nodes, known as neurons. These neurons receive inputs, process them through a mathematical function, and produce output values. Each neuron has associated weights and biases that determine its significance in the network. During training, these weights and biases are adjusted to optimize the network’s performance.

Training a neural network involves two fundamental steps: feedforward and backpropagation. In the feedforward step, input data is passed through the network, and the output is computed. This output is then compared to the expected output, and an error metric is calculated. The backpropagation step uses this error metric to adjust the weights and biases of the network through an optimization algorithm, such as gradient descent. This process is repeated iteratively until the network reaches a satisfactory level of accuracy.

2. Preparing Your Data

Data preparation is a crucial aspect of training a neural network. High-quality and well-structured data can significantly impact the performance of your network. Start by cleaning your data, removing any inconsistencies or outliers that could introduce biases. Then, split your data into training, validation, and testing sets.

The training set is used to adjust the network’s weights and biases, while the validation set helps you monitor the network’s progress and prevent overfitting. Finally, the testing set is used to evaluate the network’s performance on unseen data. It’s essential to ensure a balance between the three sets to avoid biased results. Additionally, consider normalizing or standardizing your data to improve convergence during training.

3. Choosing the Right Network Architecture

The architecture of your neural network plays a crucial role in its performance. It determines the number of layers, the number of neurons in each layer, and the connections between them. Choosing the right architecture depends on the complexity and nature of your data. Deep neural networks with multiple hidden layers are suitable for complex tasks, while shallow networks with fewer layers might be sufficient for simpler problems.

Experiment with different architectures, adjusting the number of layers, neurons, and activation functions to find the best fit for your task. Keep in mind that larger networks can be computationally expensive and more prone to overfitting, so regularize your network by applying techniques like dropout or L1/L2 regularization when necessary.

4. Training Parameters and Techniques

When training a neural network, several parameters and techniques need to be considered to optimize performance. The learning rate determines the step size at which the network adjusts its weights and biases during backpropagation. A high learning rate may result in overshooting the optimal weights, while a low learning rate can lead to slow convergence. Experiment with different learning rates to find the sweet spot.

Batch size refers to the number of training samples presented to the network before weight updates occur. Large batch sizes can speed up training, but smaller batch sizes may provide better generalization. Another crucial parameter is the number of epochs, which defines the number of times the entire training set is presented to the network. Too few epochs can result in underfitting, while too many epochs can lead to overfitting.

Lastly, techniques like early stopping, which halts training when the validation error starts to increase, and learning rate decay, which gradually reduces the learning rate over time, can be valuable in improving network performance and preventing overfitting.

5. Evaluating and Fine-Tuning Your Network

Once your network has been trained, it’s crucial to evaluate its performance and make any necessary adjustments. Start by assessing metrics such as accuracy, precision, recall, and F1-score to measure how well your network is performing. If the results are not satisfactory, consider revisiting your data, network architecture, or training parameters.

Fine-tuning your network involves adjusting its hyperparameters or introducing regularization techniques to improve performance. You can experiment with different optimization algorithms, activation functions, regularization methods, or network architectures until you achieve the desired results. It’s crucial to strike a balance between optimization and overfitting, as fine-tuning too much can result in a network that performs well on the training data but fails to generalize to unseen data.

6. Keeping Up with Latest Advances and Best Practices

The field of neural networks is continuously evolving, with new techniques and best practices emerging regularly. As a neural network practitioner, it’s essential to stay updated with the latest research and advancements in the field. Follow reputable journals, attend conferences and workshops, and engage with the online machine learning community to stay on top of current trends.

Additionally, be proactive in experimenting with new architectures, optimization algorithms, and regularization techniques to push the boundaries of your network’s performance. Participate in Kaggle competitions or other data science challenges to gain practical experience and learn from the achievements and techniques of others.

With a solid understanding of neural network fundamentals, data preparation, network architecture, training techniques, and continuous learning, you’ll be well-equipped to tackle the challenges of training neural networks effectively.

Advancements in Neural Network Training

Neural network training has come a long way since its inception. Over the years, researchers and practitioners have developed numerous advancements and techniques to enhance the training process and improve network performance. Let’s explore some of the notable advancements that have revolutionized neural network training.

**1. Transfer Learning:** Transfer learning allows you to leverage pre-trained models and transfer their knowledge to new, related tasks. Instead of training a network from scratch, you can take advantage of the features learned by a model on a large-scale dataset. This technique saves time and computational resources while achieving good performance on new tasks with limited data.

**2. Batch Normalization:** Batch normalization is a technique that normalizes the activations of each layer in a neural network. It helps address the internal covariate shift problem and accelerates training by reducing the impact of vanishing or exploding gradients. Batch normalization also acts as a regularizer, reducing the need for other regularization techniques like dropout.

**3. Adaptive Learning Rates:** Adam (Adaptive Moment Estimation) is an optimization algorithm that adapts the learning rate for each parameter individually. This technique combines the benefits of both adaptive learning rates and momentum-based optimization, resulting in faster convergence and better optimization. Adam has become a popular choice for neural network training due to its robustness and ease of use.

**4. Generative Adversarial Networks (GANs):** GANs are a class of neural networks that consist of two components: a generator and a discriminator. The generator tries to produce realistic data, while the discriminator tries to distinguish between real and generated data. Through an adversarial training process, both components improve their performance iteratively. GANs have revolutionized tasks like image synthesis and style transfer, pushing the boundaries of what neural networks can achieve.

**5. Regularization Techniques:** Regularization techniques like dropout, weight decay, and early stopping have played a significant role in enhancing the training of neural networks. Dropout randomly deactivates a fraction of neurons during training, preventing overfitting and improving network generalization. Weight decay adds a penalty to the loss function based on the weights, encouraging the network to learn simpler and more general representations. Early stopping halts the training process when the validation error starts to increase, preventing overfitting and enhancing network performance.

**6. Reinforcement Learning:** Reinforcement learning involves training a neural network to make decisions based on rewards or punishments received from its environment. This technique has been applied to complex tasks like game playing, autonomous navigation, and robotics. By employing concepts from psychology and neuroscience, reinforcement learning has advanced the field of intelligent systems and expanded the capabilities of neural networks.

Training Neural Networks for Specific Tasks

Neural networks can be trained for a wide variety of tasks, from image recognition to language translation. Let’s explore how the training process differs for different applications:

**1. Image Classification:** To train a neural network for image classification, start by gathering a large labeled dataset of images. Preprocess the images by resizing them to a consistent size and normalizing the pixel values. Choose an architecture suitable for image classification, such as convolutional neural networks (CNNs). Train the network by presenting the images as inputs and their corresponding labels as targets. Fine-tune the network using techniques like data augmentation, regularization, and hyperparameter tuning.

**2. Natural Language Processing (NLP):** NLP tasks, such as sentiment analysis or text classification, require a different approach. Preprocess the text data by tokenizing it, removing stopwords, and converting it into numerical representations like word embeddings or one-hot encodings. Choose an architecture suitable for NLP, such as recurrent neural networks (RNNs) or transformers. Train the network by presenting the textual inputs and their corresponding labels or target outputs. Use techniques like word embeddings, attention mechanisms, and language modeling to enhance network performance.

**3. Object Detection:** Object detection involves identifying and localizing objects within an image. This task requires bounding box annotations in addition to image labels. To train a neural network for object detection, use a dataset with bounding box annotations and corresponding labels. Choose an architecture suitable for object detection, such as a combination of CNNs and region proposal networks (RPNs). Train the network by presenting the images as inputs and their corresponding bounding box coordinates and labels as targets. Fine-tune the network using techniques like anchor box optimization, non-maximum suppression, and multi-scale training.

**4. Generative Modeling:** Generative models aim to generate new data that resembles a given training dataset. They are commonly used for tasks like image synthesis, text generation, and music composition. To train a generative model, choose an appropriate architecture such as generative adversarial networks (GANs) or variational autoencoders (VAEs). Prepare a dataset of the desired data type (e.g., images or text) and train the network to generate outputs that resemble the training data. Use techniques like Wasserstein distance, latent space interpolation, and label conditioning to improve the quality and diversity of generated samples.

**5. Time Series Analysis:** Time series analysis involves predicting future values based on historical data points. To train a neural network for time series analysis, preprocess the data by splitting it into sequential input-output pairs. Choose an architecture suitable for sequential data, such as recurrent neural networks (RNNs) or long short-term memory (LSTM) networks. Train the network by presenting the input sequences and their corresponding target output sequences. Use techniques like sliding window prediction, stateful training, and attention mechanisms to improve prediction accuracy.

**6. Reinforcement Learning:** Training neural networks with reinforcement learning involves an agent interacting with an environment and learning a policy that maximizes the cumulative reward. Preprocess the environment state into a suitable representation, such as images or state vectors. Design a reward function that provides feedback to the agent based on its actions. Choose an architecture suitable for reinforcement learning, such as deep Q-networks (DQNs) or policy gradient models. Train the network by iteratively optimizing the policy using techniques like value iteration, policy gradients, or actor-critic methods.

By understanding the nuances of training neural networks for specific tasks, you can tailor the process to achieve optimal performance and tackle a wide range of real-world challenges.

Key Takeaways: How do you train a neural network, you ask?

- Neural networks are trained using a process called backpropagation.

- During training, the network adjusts the weights and biases of its individual neurons.

- The training data is fed to the network multiple times to improve its accuracy.

- Regularization techniques can be used to prevent overfitting and improve generalization.

- Training a neural network requires patience and experimentation to find the optimal settings.

Frequently Asked Questions

Training a Neural Network: Everything You Need to Know

Neural networks are an integral part of modern technology, powering applications such as image recognition and speech synthesis. If you’re curious about how these networks are trained, we’ve put together some frequently asked questions to help demystify the process. Read on to learn more!

1. How does training a neural network work?

Training a neural network involves feeding it with a large dataset and adjusting its internal parameters, called weights, to minimize the difference between the network’s predictions and the desired outputs. The process consists of forward propagation, where data passes through the network’s layers, and backward propagation, where the network adjusts the weights based on the calculated errors.

During training, the network learns to recognize patterns and make accurate predictions by iteratively adjusting the weights and fine-tuning its internal representation. This process continues until the network achieves a satisfactory level of accuracy on the training data.

2. What is the role of the loss function in training a neural network?

The loss function is a critical component in training a neural network. It quantifies the discrepancy between the predicted outputs and the actual desired outputs. By evaluating the loss, the network can determine how well it is performing and make adjustments to improve its predictions.

The choice of loss function depends on the specific problem being solved. For example, in classification tasks, where the goal is to assign input data to predefined categories, the cross-entropy loss function is commonly used. In regression tasks, where the goal is to predict a continuous value, the mean squared error (MSE) loss function is often used. By minimizing the loss function, the network can improve its predictive accuracy.

3. How do you prevent overfitting during neural network training?

Overfitting occurs when a neural network becomes too specialized in the training data and fails to generalize well to unseen examples. To prevent overfitting, several techniques can be employed. One common approach is to use regularization techniques, such as L1 or L2 regularization, which add a penalty term to the loss function to constrain the complexity of the network.

Another technique is to incorporate dropout layers. Dropout randomly deactivates a fraction of the neurons during each training iteration, forcing the network to learn more robust and generalizable features. Additionally, techniques like early stopping and data augmentation can also help combat overfitting by terminating training early or artificially expanding the training dataset, respectively.

4. How long does it take to train a neural network?

The time required to train a neural network can vary greatly depending on the size and complexity of the network, as well as the amount and quality of the training data. Smaller networks with fewer layers and parameters tend to train faster than larger, more complex networks.

Training time can range from minutes for simpler tasks to several hours, days, or even weeks for more complex problems. Additionally, specialized hardware such as GPUs or TPUs can significantly speed up the training process, enabling faster convergence and reduced training times.

5. Can a pre-trained neural network be used instead of training from scratch?

Yes, pre-trained neural networks can be a valuable tool, especially when working with limited data or limited computational resources. By using a pre-trained network, you can leverage the knowledge captured by the network from a previous task and apply it to a similar problem.

Transfer learning is a technique that involves using a pre-trained network as a starting point and fine-tuning it on a new, related task. By reusing the learned features and weights, the network can often achieve better performance in less time compared to training from scratch. This is particularly useful in scenarios where obtaining a large amount of labeled data may be impractical or time-consuming.

Training a Neural Network explained

Summary

So, training a neural network is basically teaching a computer how to do something. You start with a bunch of examples, tell the computer what the right answer is for each example, and let it figure out how to get to the right answer on its own. This is done by adjusting the connections between artificial neurons based on the feedback it receives.

The process involves breaking down the problem into smaller steps, using a loss function to measure how well the network is doing, and using an optimizer to update the network’s parameters. It takes time and patience, but with practice and more data, a neural network can become really good at what it’s trained for. So, if you’re interested in training your own neural network, go ahead and give it a try!