When it comes to tackling the challenges of AI scalability, OpenAI takes the lead. So how exactly does OpenAI address these issues? Let’s find out! From innovative approaches to careful planning, OpenAI has developed strategies that ensure AI systems can grow and adapt effectively. In this article, we’ll explore the ways OpenAI addresses challenges related to AI scalability. So buckle up and get ready to dive into the world of cutting-edge technology!

Contents

- How OpenAI Overcomes Challenges in AI Scalability

- Advancements in AI Scalability Achieved by OpenAI

- Conclusion

- Key Takeaways: How does OpenAI address challenges related to AI scalability?

- Frequently Asked Questions

- 1. How does OpenAI ensure scalability in AI models?

- 2. How does OpenAI handle the computational challenges in AI scalability?

- 3. What measures does OpenAI take to address the limitations of AI scalability?

- 4. How does OpenAI ensure the reliability and stability of scalable AI systems?

- 5. How does OpenAI address the ethical considerations in scalable AI development?

- Summary

How OpenAI Overcomes Challenges in AI Scalability

Artificial Intelligence (AI) has become an integral part of our lives, transforming industries and driving innovation. As AI advances, one of the critical challenges that arise is scalability. How can AI systems handle increasing amounts of data, complex computations, and the growing demands of users? OpenAI, the leading research organization in the field of AI, has taken significant steps to address these challenges and push the boundaries of AI scalability. In this article, we will explore how OpenAI tackles the obstacles related to AI scalability, ensuring that AI systems can handle the complexities of the future.

1. Scaling Hardware Infrastructure

One of the primary challenges in AI scalability is the need for substantial computational power. OpenAI addresses this challenge by continuously scaling its hardware infrastructure. The organization invests in high-performance computing resources, including powerful GPUs and specialized hardware, to support the development and deployment of AI models. By expanding its hardware capabilities, OpenAI ensures that AI systems can process vast amounts of data and perform complex computations in a timely manner.

Furthermore, OpenAI collaborates with technology partners to leverage their hardware advancements. This collaboration allows OpenAI to stay at the forefront of AI scalability, benefiting from the latest innovations in hardware technology. Through these efforts, OpenAI is able to push the boundaries of what is possible in AI and develop scalable solutions that can handle the increasing demands of AI applications.

One notable example of OpenAI’s commitment to scaling hardware infrastructure is its partnership with leading chipmaker Nvidia. By working closely with Nvidia, OpenAI can take advantage of their cutting-edge GPUs, which are essential for training and running complex AI models. This collaboration ensures that OpenAI has access to the computational power necessary to address the scalability challenges in AI effectively.

2. Building Efficient and Parallelizable Models

Another vital aspect of AI scalability is the design of models that can efficiently process and parallelize computations. OpenAI invests significant resources into research and development to create models that are not only powerful but also scalable. The organization focuses on designing architectures that can handle large-scale datasets and distribute computations across multiple devices in a parallelized manner.

Parallelization allows AI systems to divide a task into smaller subtasks that can be executed simultaneously, significantly reducing the time required for computation. OpenAI incorporates parallel processing techniques into its models to ensure efficient and scalable performance. By utilizing the power of parallel computation, OpenAI can handle the computational demands of complex AI tasks and achieve scalability without compromising performance.

In addition to designing efficient and parallelizable models, OpenAI also emphasizes the importance of model compression and optimization. By compressing and optimizing models, OpenAI reduces their computational requirements while maintaining high accuracy and performance. This optimization process is crucial for achieving scalability, as it allows AI systems to handle larger datasets and more complex tasks without overwhelming computational resources.

3. Embracing Distributed Computing

A significant challenge in AI scalability is the ability to distribute computations across multiple devices and systems effectively. OpenAI tackles this challenge by leveraging distributed computing techniques. By splitting computations across multiple devices, such as GPUs or CPUs, OpenAI can process large amounts of data and execute complex AI models more efficiently.

OpenAI utilizes distributed computing frameworks like TensorFlow and PyTorch, which allow for seamless distribution of computations across multiple processing units. These frameworks enable OpenAI to scale AI systems horizontally, adding more devices to distribute computations as the workload increases. By leveraging distributed computing, OpenAI ensures that AI systems can handle the growing demands of scalability without sacrificing performance.

Furthermore, OpenAI explores the potential of distributed data storage and processing, such as using cloud-based solutions. Storing and processing data on distributed systems provides better scalability, as it allows for increased storage capacity and parallel processing of data. OpenAI’s embrace of distributed computing technologies enables them to harness the power of multiple devices and systems, effectively addressing the scalability challenges in AI.

4. Collaborating with the AI Community

OpenAI recognizes that solving the challenges of AI scalability requires collaboration and knowledge sharing within the AI community. To address these challenges collectively, OpenAI actively collaborates with leading researchers and organizations. By fostering collaboration, OpenAI can share insights, exchange ideas, and collectively develop solutions that can drive AI scalability forward.

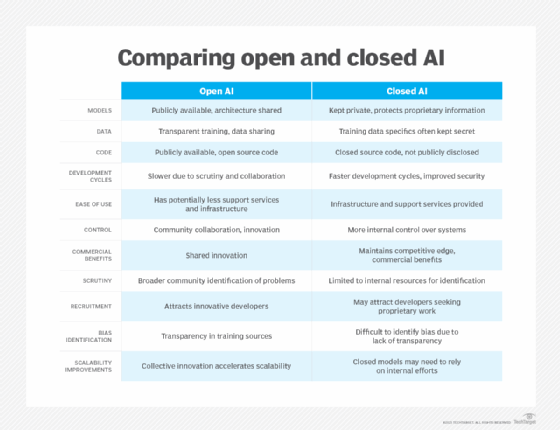

OpenAI publishes its research findings and shares code implementations, allowing the wider AI community to benefit from their advancements. By making their work open and accessible, OpenAI encourages collaboration and accelerates progress in AI scalability. This collaborative approach ensures that the challenges related to AI scalability are addressed holistically, with various perspectives and expertise contributing to innovative solutions.

Moreover, OpenAI actively participates in conferences, workshops, and forums focused on AI scalability, where researchers and practitioners discuss and share their experiences. These gatherings provide valuable opportunities to learn from others, explore new approaches, and establish partnerships. OpenAI’s commitment to collaboration strengthens the collective efforts in addressing the challenges of AI scalability and paves the way for further advancements in the field.

Advancements in AI Scalability Achieved by OpenAI

OpenAI’s efforts to address the challenges of AI scalability have resulted in significant advancements and breakthroughs in the field. The organization’s contributions have pushed the boundaries of what is achievable in AI and have paved the way for future developments. Here are three prominent advancements OpenAI has achieved in AI scalability:

1. Efficient Language Models

OpenAI has developed language models that can efficiently process and understand vast amounts of textual data. These models, such as GPT-3 (Generative Pre-trained Transformer 3), demonstrate impressive language generation capabilities while maintaining scalability. By optimizing the design and computational requirements of language models, OpenAI allows AI systems to process and generate text at a large scale, opening up possibilities for various applications.

This advancement has significant implications for natural language processing tasks, such as chatbots, translation services, and content generation. OpenAI’s language models can effectively handle the complexity and scale of these tasks, providing accurate and contextually appropriate responses. The efficiency of these language models allows for real-time operations, making them highly applicable in scenarios that require rapid processing and decision-making.

OpenAI’s efficient language models serve as a testament to the organization’s dedication to achieving scalability without compromising performance. By addressing the challenges related to language understanding and generation in large-scale models, OpenAI has made significant strides in enhancing AI scalability.

2. Reinforcement Learning at Scale

OpenAI has also made notable advancements in the area of reinforcement learning at scale. Reinforcement learning is a method of training AI agents to make decisions by interacting with an environment and receiving feedback in the form of rewards or penalties. Scaling reinforcement learning to handle complex tasks has been a challenge, primarily due to the computational demands it imposes.

To overcome this challenge, OpenAI developed methods that allow reinforcement learning algorithms to train on massive amounts of compute resources. Through distributed computing and optimization techniques, OpenAI can scale reinforcement learning to handle complex tasks that were previously considered computationally infeasible.

These advancements in reinforcement learning at scale have enabled OpenAI to achieve remarkable results in domains such as robotics, gaming, and autonomous vehicles. OpenAI’s breakthroughs in this area demonstrate the organization’s commitment to pushing the boundaries of AI scalability and its potential applications.

3. Democratizing AI

OpenAI’s efforts in addressing AI scalability challenges go beyond technological advancements. The organization is committed to making AI accessible and inclusive. OpenAI aims to democratize AI by providing tools, resources, and frameworks that enable developers, researchers, and organizations to harness the power of AI at scale.

OpenAI has developed libraries and APIs that allow developers to easily integrate AI models into their applications and services. These tools abstract the complexities of AI scalability, enabling developers to focus on the application logic while leveraging OpenAI’s scalable models. By providing user-friendly and accessible interfaces, OpenAI empowers a broader range of users to utilize AI capabilities and contribute to the advancements in AI scalability.

Furthermore, OpenAI actively supports educational initiatives, providing resources and courses to educate and train individuals in AI. By equipping individuals with the knowledge and skills to work with AI technologies, OpenAI helps foster a more inclusive and diverse AI community. This commitment to democratizing AI not only addresses the existing challenges of scalability but also ensures that future developments in AI are driven by a diverse range of perspectives and insights.

Conclusion

In conclusion, OpenAI has taken significant steps to address the challenges related to AI scalability. Through scaling hardware infrastructure, building efficient and parallelizable models, embracing distributed computing, and collaborating with the AI community, OpenAI has achieved remarkable advancements in the field of AI scalability. These advancements have resulted in efficient language models, reinforcement learning at scale, and the democratization of AI.

OpenAI’s commitment to pushing the boundaries of AI scalability and making AI accessible sets a precedent for the future of AI research and development. By addressing the challenges related to AI scalability, OpenAI paves the way for more powerful, efficient, and scalable AI systems that can handle the complexities of the future.

- OpenAI uses distributed computing to scale AI models across multiple machines.

- They employ techniques like model parallelism and data parallelism to make AI systems more scalable.

- OpenAI focuses on developing efficient algorithms that optimize resource utilization for better scalability.

- They invest in research and development to improve scalability and performance of AI models.

- OpenAI collaborates with the tech community to address challenges and share best practices in scaling AI technologies.

Frequently Asked Questions

In order to address challenges related to AI scalability, OpenAI employs various strategies and approaches. Here are some commonly asked questions regarding how OpenAI tackles these challenges:

1. How does OpenAI ensure scalability in AI models?

OpenAI addresses scalability in AI models by focusing on both hardware and software improvements. They use distributed computing systems, such as clusters of GPUs, to train large-scale models efficiently. Additionally, OpenAI develops software tools and frameworks, like the open-source library TensorFlow, that facilitate distributed training and deployment of AI models. These advancements in hardware and software enable OpenAI to scale AI models to handle larger datasets and more complex tasks.

Moreover, OpenAI also invests in research and development to improve the efficiency of AI algorithms, reducing the computational requirements for training and deploying models. By continuously optimizing both hardware and software aspects, OpenAI ensures scalability in AI models.

2. How does OpenAI handle the computational challenges in AI scalability?

OpenAI tackles computational challenges by leveraging cloud computing resources. They utilize powerful cloud infrastructure provided by platforms like Amazon Web Services (AWS) to distribute the computational workload across multiple machines. This allows OpenAI to efficiently process large amounts of data and perform complex computations required for training and running AI models.

Additionally, OpenAI employs techniques such as model parallelism and data parallelism. Model parallelism involves dividing a large model into smaller parts that can be processed simultaneously on different machines, while data parallelism involves parallelizing the processing of multiple training samples across different machines. These strategies help in effectively handling the computational challenges and achieving scalable AI solutions.

3. What measures does OpenAI take to address the limitations of AI scalability?

OpenAI takes several measures to address the limitations of AI scalability. They invest in research and development to improve the efficiency and effectiveness of AI algorithms, enabling better scalability. OpenAI also collaborates with the research community and industry partners to share knowledge and insights, fostering the development of scalable AI solutions.

Furthermore, OpenAI emphasizes the development of open-source libraries and tools that promote interoperability and compatibility among different AI systems. This helps in avoiding vendor lock-in and enables easy integration of scalable AI solutions into existing workflows or applications. By adopting a collaborative and open approach, OpenAI effectively addresses the limitations of AI scalability.

4. How does OpenAI ensure the reliability and stability of scalable AI systems?

OpenAI prioritizes the reliability and stability of scalable AI systems through rigorous testing and validation procedures. They conduct extensive testing to identify and fix potential issues or vulnerabilities in their AI models and systems before deployment. OpenAI also emphasizes the use of best practices in software engineering, such as version control, automated testing, and continuous integration, to ensure the stability and reliability of their software pipelines.

In addition, OpenAI actively collects feedback from users and the research community to gather insights and improve the performance and robustness of their scalable AI systems. By continuously monitoring and refining their models and systems, OpenAI aims to provide reliable and stable AI solutions at scale.

5. How does OpenAI address the ethical considerations in scalable AI development?

OpenAI places a strong emphasis on ethical considerations in scalable AI development. They prioritize the principles of transparency, fairness, and accountability in their AI systems. OpenAI actively engages in responsible AI research and ensures that their models and systems are designed and trained to avoid biases and discriminatory behavior.

Moreover, OpenAI actively seeks external input through partnerships and collaborations with external organizations and experts in the field of ethics in AI. They also encourage public discourse and solicit feedback from a wide range of stakeholders, including users and the general public, to address ethical concerns in their AI development processes. By taking these measures, OpenAI strives to develop and deploy scalable AI systems that are ethically sound and beneficial to society.

Summary

OpenAI faces challenges in scaling AI since training models requires a lot of computing power and resources. They use techniques like model parallelism and data parallelism to distribute the workload and train models more efficiently. By breaking down the process and utilizing multiple resources, OpenAI can tackle large-scale AI projects.

OpenAI also develops new techniques to improve AI scalability, such as algorithms that reduce the computational cost of training models, and methods to distribute a model’s parameters across multiple machines. These innovations enable OpenAI to handle complex AI tasks while making the most of available resources. Through their scalability efforts, OpenAI aims to create AI technologies that can benefit everyone.