Do you ever wonder if artificial intelligence has its own biases? That’s a question many people have been asking about Chat GPT.

Chat GPT, a powerful language model, has the ability to generate human-like responses in conversational settings. But here’s the thing: it learns from vast amounts of training data. And that training data has biases of its own.

So, the big question is: Is Chat GPT influenced by the biases in its training data? Let’s dig deeper to find out!

When it comes to the question of whether Chat GPT is influenced by biases in its training data, it’s important to consider the nature of the training process. Chat GPT learns from a vast amount of online text, which inevitably includes biases. OpenAI, the organization behind Chat GPT, acknowledges this issue and is actively working on reducing biases in AI systems. While Chat GPT strives to be a useful tool, it’s essential to approach its responses critically and be aware of potential biases.

Contents

- Is Chat GPT influenced by the biases in its training data?

- Overview of Chat GPT

- Training Data Bias: Origins and Concerns

- Key Takeaways: Is Chat GPT influenced by the biases in its training data?

- Frequently Asked Questions

- Q1: How is Chat GPT trained and does it have biases?

- Q2: What kind of biases might be present in Chat GPT?

- Q3: How does OpenAI address biases in Chat GPT’s training data?

- Q4: What actions can users take to reduce biases in Chat GPT?

- Q5: How does OpenAI plan on making Chat GPT’s biases more understandable and controllable?

- ChatGPT and large language model bias | 60 Minutes

- Summary

Is Chat GPT influenced by the biases in its training data?

Welcome to our in-depth exploration of the question: Is Chat GPT influenced by the biases in its training data? Chat GPT is an advanced language model developed by OpenAI that uses deep learning techniques to generate human-like text. While it has been praised for its ability to engage in conversational interactions with users, concerns have been raised about the potential biases present in its training data and the impact this may have on its responses. In this article, we will delve into this topic, examining the origins of Chat GPT’s training data, the potential biases, and the implications for users.

Overview of Chat GPT

Before we dive into the question of biases in Chat GPT’s training data, let’s first understand what this remarkable language model is capable of. Trained on a vast corpus of text from the internet, Chat GPT is designed to generate text that is coherent and contextually relevant. It can engage in conversations, answer questions, provide explanations, and even tell jokes.

Through its sophisticated learning algorithms, Chat GPT picks up patterns and structures in the training data, allowing it to generate responses that mimic human conversation. However, as powerful as this AI is, it is essential to consider the potential biases that may originate from the data it was trained on.

Training Data Bias: Origins and Concerns

One of the primary concerns about Chat GPT relates to the biases that may be present in its training data. The training process involved exposing the AI model to a wide variety of text sources from the internet. This extensive dataset could inadvertently include content that reflects societal biases, prejudices, and discriminatory language.

This raises the question: if Chat GPT has learned from biased data, will it not perpetuate and reinforce those biases in its responses? Studies have shown that language models can exhibit biased behavior due to the biases present in their training data. Users may encounter biased responses that echo the prejudices embedded in the training corpus, potentially leading to harmful or discriminatory outcomes.

Understanding the origins and concerns surrounding training data bias is vital for promoting transparency, fairness, and responsible AI development. Addressing these concerns requires a comprehensive examination of the training data sources and the processes employed by OpenAI to mitigate potential biases.

The Origins of Chat GPT’s Training Data

The training data used for Chat GPT is sourced from a wide range of publicly available text found on the internet. The model learns from books, websites, and other text-based sources to develop an understanding of the nuances and complexities of human language. While this approach allows for exposure to a vast array of information, the diverse nature of the training data carries both advantages and risks.

On the one hand, the internet offers a wealth of information that enables Chat GPT to generate rich, contextually appropriate responses. It learns by observing and analyzing patterns in language usage across various domains and topics, resulting in an impressive capacity to generate human-like text.

On the other hand, the open nature of the internet means that the training data can incorporate biased, offensive, or otherwise problematic content. This can include material that perpetuates stereotypes, discriminates against certain groups, or promotes misinformation. The challenge for developers is to strike a balance between leveraging the vastness of the internet while minimizing the potential harm posed by biased content.

Biases in Chat GPT’s Responses and Their Implications

Despite the efforts made by OpenAI to address potential biases during the AI model’s development, some biases may still persist in Chat GPT’s responses. When faced with prompts that touch upon sensitive or controversial topics, the model may inadvertently produce biased or inappropriate responses derived from the patterns it learned during training.

While OpenAI has implemented measures to mitigate explicit biases and offensive content, they acknowledge that the model’s responses may still not meet the desired standards of fairness and inclusivity. This highlights the ongoing challenge of ensuring unbiased AI systems and the need for continuous improvement and transparency in their development.

The implications of biases in Chat GPT’s responses are significant. Inaccurate or biased information generated by the model can spread misinformation or perpetuate prejudices. It can reinforce existing social biases and contribute to discrimination, particularly when interacting with vulnerable populations. Recognizing and mitigating these biases is crucial for building AI systems that positively impact society.

Key Takeaways: Is Chat GPT influenced by the biases in its training data?

- Chat GPT, an advanced AI language model, can be influenced by biases present in its training data.

- Biased training data may result in Chat GPT generating biased or discriminatory responses.

- Efforts are being made to mitigate biases by providing clearer instructions during training and using datasets with reduced biases.

- Continual monitoring and updates are crucial to ensure Chat GPT learns from diverse perspectives and avoids reinforcing existing biases.

- Community feedback plays an important role in identifying and addressing biases in Chat GPT.

Frequently Asked Questions

Welcome to our FAQ section where we answer your most pressing questions about Chat GPT and its training data biases. Here, we address concerns and provide insights regarding the influence of biases in Chat GPT’s training data.

Q1: How is Chat GPT trained and does it have biases?

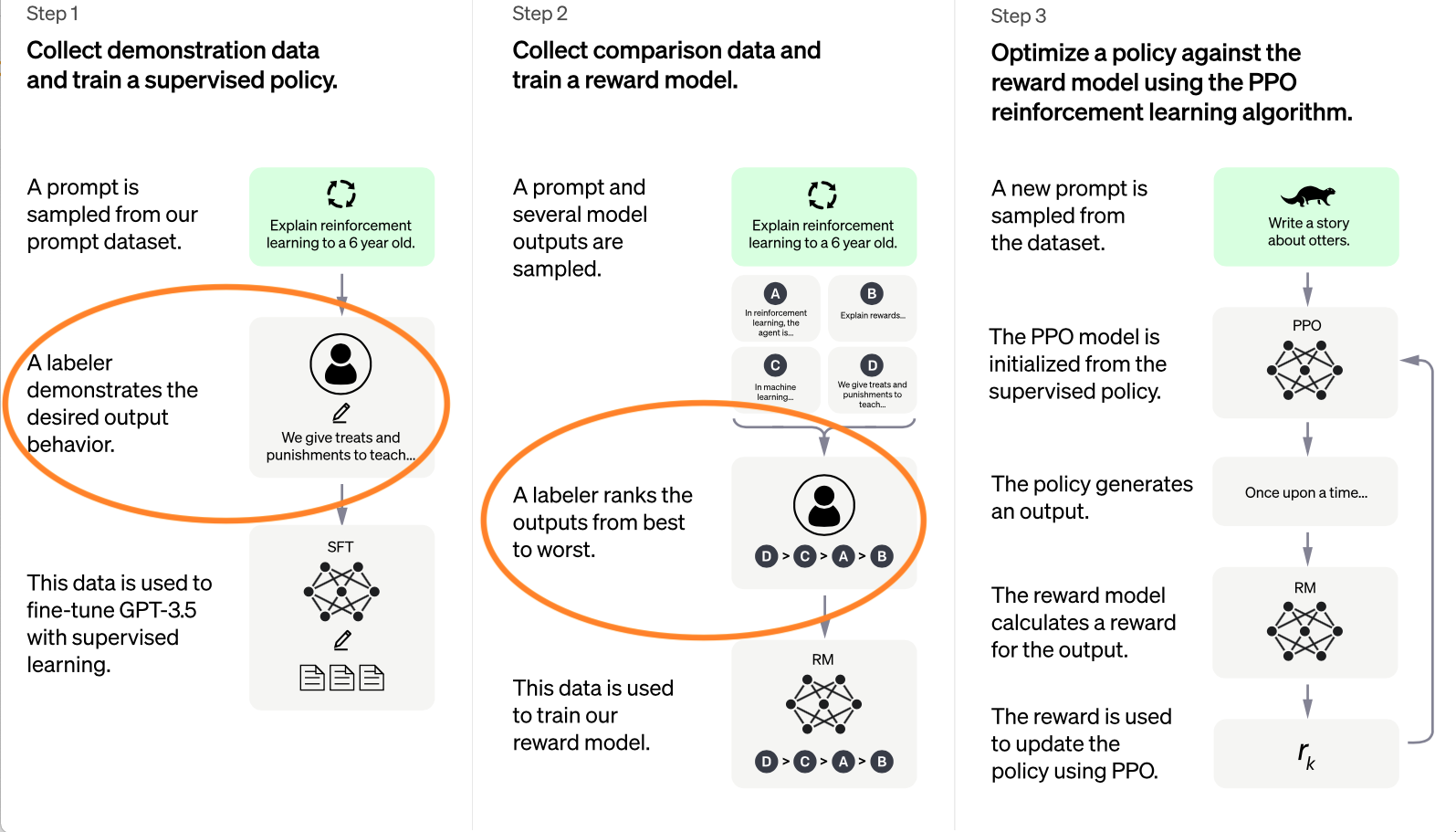

Chat GPT goes through a two-step training process. Initially, it is pretrained on a large corpus of internet text, which exposes it to a wide range of knowledge. During this phase, biases present in the training data can be learned by the model. The second step is fine-tuning, where human reviewers follow guidelines to make the model more useful and safe, aiming to reduce any potential biases. However, biases can still emerge due to a variety of factors.

To address these biases, OpenAI is working diligently to improve its guidelines and provide clearer instructions to reviewers. They are also investing in research and engineering to reduce biases and make the fine-tuning process more understandable and controllable.

Q2: What kind of biases might be present in Chat GPT?

Biases that could be present in Chat GPT include those related to race, gender, religion, politics, and other societal factors. Since the model is trained on internet text, which may contain biased content, it can inadvertently learn and reproduce those biases. Bias can also arise from potential limitations in the training and fine-tuning processes.

OpenAI recognizes the importance of mitigating these biases and is actively working to address them. They are committed to reducing both glaring and subtle biases to ensure a fairer and more unbiased AI model.

Q3: How does OpenAI address biases in Chat GPT’s training data?

To combat biases, OpenAI is focused on providing clearer instructions to human reviewers during the fine-tuning process. They are working on sharing aggregated demographic information about reviewers to address any potential biases that may arise. OpenAI aims to improve the clarity of guidelines and ensure that reviewers understand their role in reducing biases within the model.

OpenAI also encourages transparency and welcomes external input in order to help them identify and address biases effectively. By involving the public, they strive to create a more inclusive and fair AI system.

Q4: What actions can users take to reduce biases in Chat GPT?

OpenAI believes in providing user feedback to help identify and reduce biases in Chat GPT. Users can report instances where the model responds to prompts in biased ways by using OpenAI’s fine-tuning feedback tool. By highlighting these issues, users contribute to the collaborative efforts in improving the model’s performance and reducing biases.

OpenAI also relies on the collective intelligence of its user base to guide their research on reducing biases and making the fine-tuning process more transparent. By actively participating in giving feedback, users can play an active role in shaping a less biased AI model.

Q5: How does OpenAI plan on making Chat GPT’s biases more understandable and controllable?

OpenAI is committed to making the fine-tuning process more transparent and controllable. They aim to provide clearer instructions to reviewers by refining their guidelines and addressing potential pitfalls related to biases. By doing so, they hope to minimize any unintended amplification of biases present in the training data.

OpenAI is also investing in research to improve default behavior, allowing users to customize the AI’s responses within certain bounds. This approach ensures that users have more control over the AI system, making it a more useful and responsible tool. OpenAI welcomes public input on defining these bounds to ensure collective decision-making in shaping the AI’s behavior.

ChatGPT and large language model bias | 60 Minutes

Summary

Chat GPT, a language model developed by OpenAI, relies on training data from the internet. However, this data can sometimes contain biases that are passed on to the model. Biases can lead the model to provide inappropriate or incorrect responses, which raises concerns about the system’s reliability. OpenAI is working on improving Chat GPT by reducing both glaring and subtle biases in its responses, but challenges remain. It is important for users to be aware of these biases and use the system with caution.

Despite efforts to enhance the accuracy and fairness of Chat GPT, biases cannot be completely eliminated. OpenAI acknowledges the need for community input and external auditing to address this issue. Users are encouraged to provide feedback on problematic outputs and contribute to ongoing research in order to make the system more reliable and unbiased. While there is progress being made, it is crucial for users to be critically engaged with AI systems and consider their limitations.