Have you ever wondered if there’s a way to make machine learning models even more powerful? Well, buckle up, because today we’re going to explore the exciting world of gradient boosting. So, is gradient boosting like a turbocharger for models? Let’s find out!

Imagine you have a model that predicts whether it’s going to rain tomorrow. It’s pretty accurate, but you want to take it to the next level. That’s where gradient boosting comes in. It’s like strapping a turbocharger onto your model, giving it an extra boost of performance!

But wait, what exactly is gradient boosting? Well, it’s a technique that combines multiple weak models to create a stronger and more accurate one. It’s like getting a team of talented individuals together to tackle a problem. Each model focuses on a different aspect, and their collective efforts lead to exceptional results.

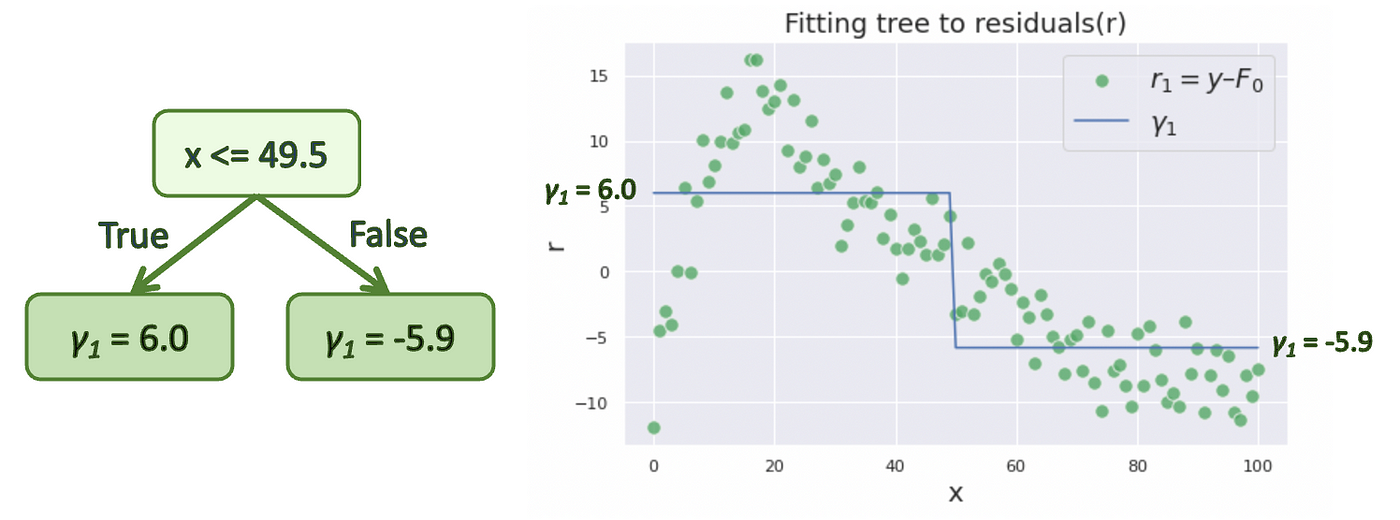

So, why is gradient boosting so effective? It’s all about learning from mistakes. Each weak model takes a shot at predicting the outcome but isn’t perfect. Gradient boosting uses the errors made by these models to adjust and fine-tune the subsequent models, improving the overall accuracy. It’s like learning from your mistakes and continuously improving until you reach your goal.

In a nutshell, gradient boosting is like a turbocharger for models. It takes them from good to great by combining their individual strengths and learning from their mistakes. So, fasten your seatbelt and get ready to explore the fascinating world of gradient boosting!

Contents

Is Gradient Boosting Like a Turbocharger for Models?

Welcome to our in-depth exploration of gradient boosting and its impact on model performance. In this article, we will dive into the world of gradient boosting, a powerful machine learning technique that has revolutionized the field. We will explore the concept of “turbocharging” models, examining the benefits, drawbacks, and tips for harnessing the power of gradient boosting. Join us as we unravel the mysteries of this cutting-edge approach and discover its true potential.

Understanding Gradient Boosting

Before we delve into the turbocharged effects of gradient boosting, let’s first understand what it is and how it works. Gradient boosting is an ensemble learning technique that combines multiple weak models to create a stronger, more accurate model. It does so by iteratively adding models to the ensemble, with each subsequent model compensating for the errors made by the previous models. This iterative process minimizes the overall error and improves the model’s predictive power.

Gradient boosting has gained popularity due to its ability to handle complex datasets and deliver high accuracy even with large feature sets. By capturing the relationships between variables and optimizing the model’s parameters, gradient boosting can uncover intricate patterns and make accurate predictions. With its impressive performance, gradient boosting has become a go-to choice for many data scientists and machine learning practitioners.

However, like any powerful tool, gradient boosting comes with its own challenges. The next section will provide a comprehensive overview of the benefits, drawbacks, and considerations when utilizing gradient boosting in your models.

The Benefits of Gradient Boosting

Gradient boosting offers several advantages that make it a standout technique in the world of machine learning. Let’s explore some of the key benefits:

- Improved Prediction Accuracy: Gradient boosting excels at handling complex datasets and capturing nonlinear relationships. It can integrate information from numerous weak models to create a powerful ensemble that delivers outstanding predictive accuracy.

- Feature Importance: Gradient boosting provides valuable insights into feature importance. By monitoring the contribution of each feature in the ensemble, it helps identify the key drivers behind the predictions, giving practitioners a deeper understanding of their data.

- Robustness to Outliers: Gradient boosting is known for its robustness to outliers and noisy data. It can handle extreme values without compromising the overall performance of the model, making it suitable for datasets with unpredictable variations.

With these benefits, gradient boosting has proven to be a powerful tool for various applications, ranging from fraud detection to risk assessment. However, it is crucial to acknowledge the potential drawbacks and challenges that come with this technique.

The Drawbacks and Considerations

While gradient boosting offers immense benefits, it is essential to consider its limitations and potential challenges. Here are a few factors to keep in mind:

- Complexity and Overfitting: Gradient boosting is inherently complex and can be prone to overfitting. It is crucial to fine-tune the model’s hyperparameters, such as the learning rate and number of estimators, to prevent overfitting and ensure optimal performance.

- Increased Training Time: Gradient boosting involves an iterative process, which can significantly increase training time compared to other machine learning techniques. The training time can be further impacted by the number of weak models in the ensemble and the size of the dataset.

- Data Preprocessing and Feature Engineering: Gradient boosting requires careful preprocessing of data and feature engineering to achieve optimal results. It is crucial to handle missing values, outliers, and categorical variables appropriately, and create informative features that enhance the model’s performance.

Despite these considerations, with the right approach and understanding, gradient boosting can be a game-changer in the field of machine learning. The next section will provide tips and best practices for maximizing the benefits of gradient boosting.

Tips for Harnessing Gradient Boosting

Now that we’ve examined the benefits and challenges of gradient boosting, let’s dive into some practical tips for harnessing its power effectively:

- Optimize Hyperparameters: Fine-tune the hyperparameters of the gradient boosting algorithm to ensure optimal performance and prevent overfitting. Experiment with different values for parameters such as learning rate, number of estimators, and maximum depth.

- Perform Cross-Validation: Implement cross-validation techniques when training and evaluating the model. This will help assess the model’s generalization ability and ensure robust performance on unseen data.

- Feature Selection: Utilize feature selection techniques to identify the most informative variables. Removing irrelevant or redundant features can enhance the model’s performance and reduce complexity.

By following these tips and taking a thoughtful approach, you can harness the power of gradient boosting effectively and turbocharge your models to achieve remarkable results.

Real-World Applications of Gradient Boosting

Now that we have explored the ins and outs of gradient boosting, let’s take a closer look at its applications in real-world scenarios. Whether it’s predicting customer churn, detecting anomalies, or optimizing marketing strategies, gradient boosting has proven to be a valuable tool across various industries and domains. Let’s explore some of the exciting applications:

Application 1: Fraud Detection

Gradient boosting provides excellent results in fraud detection applications. By analyzing large volumes of transactional data and identifying patterns indicative of fraudulent behavior, gradient boosting models can effectively flag potential instances of fraud, helping organizations protect themselves and their customers.

Tips for Fraud Detection using Gradient Boosting:

- Balance the dataset to address class imbalance issues.

- Consider ensemble methods, such as XGBoost and LightGBM, to further enhance performance.

- Use feature engineering techniques, including time-based features and aggregations, to capture temporal patterns and improve fraud detection.

Application 2: Demand Forecasting

Gradient boosting is well-suited for demand forecasting, where accurate predictions of future demand can drive efficient supply chain management and inventory optimization. By taking into account historical sales data, seasonality, and other factors, gradient boosting models can deliver reliable demand forecasts.

Tips for Demand Forecasting using Gradient Boosting:

- Normalize the target variable to account for varying scales and improve model performance.

- Incorporate external factors such as weather data or promotions to capture additional influences on demand.

- Experiment with different levels of temporal aggregation to strike a balance between capturing granular details and optimizing model complexity.

Application 3: Recommender Systems

Gradient boosting techniques have also found success in recommender systems, which play a crucial role in personalized recommendations across various platforms. By leveraging user preferences, historical data, and item features, gradient boosting models can accurately predict user preferences and deliver tailored recommendations.

Tips for Recommender Systems using Gradient Boosting:

- Utilize techniques like matrix factorization and collaborative filtering in conjunction with gradient boosting to improve recommendation accuracy.

- Consider incorporating signals such as user demographics, item popularity, or previous engagement to enhance the model’s performance.

- Implement ensemble methods, such as stacking or blending, to combine the strengths of multiple gradient boosting models.

These are just a few examples of how gradient boosting can be applied to real-world problems. The versatility and power of this technique make it an essential tool for practitioners across different domains.

In Conclusion

Gradient boosting is indeed like a turbocharger for models, enabling them to achieve unprecedented accuracy and performance. With its ability to handle complex datasets, capture intricate patterns, and deliver robust predictions, gradient boosting has proven to be a game-changer in the field of machine learning. By understanding the benefits, drawbacks, and considerations, as well as implementing best practices and tips, you can harness the full potential of gradient boosting and take your models to new heights.

Key Takeaways

Gradient boosting acts like a turbocharger for models, making them more powerful and accurate.

It combines multiple weak models into a strong ensemble, creating a more robust prediction.

Gradient boosting is widely used in machine learning competitions and real-world applications.

It helps overcome the limitations of individual models and improves overall performance.

By iteratively adding models to correct previous mistakes, gradient boosting achieves high accuracy.

Frequently Asked Questions

When it comes to enhancing models, gradient boosting is often compared to a turbocharger. Below are some common queries related to this analogy.

How does gradient boosting improve the performance of models?

Gradient boosting is a powerful machine learning technique that improves the performance of models by combining multiple weak algorithms. It works by sequentially training new models to correct the errors made by the previous models. This process allows the model to learn from its mistakes and make better predictions.

Think of it this way: each new model in the boosting process is like a turbocharger for the overall model. It adds extra power and accuracy, allowing the model to make more precise predictions.

What are the benefits of using gradient boosting?

Gradient boosting offers several benefits that make it a popular choice in machine learning:

1. Improved Accuracy: By combining weak models, gradient boosting can achieve higher accuracy than using a single model alone.

2. Handling Complex Relationships: Gradient boosting can effectively capture complex relationships between features, allowing it to make accurate predictions even in highly non-linear scenarios.

3. Feature Importance: Gradient boosting provides insight into which features are most important for making predictions. This information can be valuable for feature selection and understanding the underlying patterns in the data.

Does gradient boosting work well with all types of models?

Gradient boosting can be applied to a wide range of models, including decision trees, linear regression, and even neural networks. However, it is particularly effective with decision trees. The boosting process allows decision trees to work together and compensate for each other’s weaknesses, resulting in a more robust and accurate model.

It’s worth mentioning that gradient boosting is not suitable for all situations. For example, if you have a small dataset or want interpretable models, gradient boosting may not be the best choice. In such cases, other techniques like regularized linear models or support vector machines may be more suitable.

Can gradient boosting overfit the data?

Gradient boosting is prone to overfitting if not properly tuned. Overfitting occurs when the model becomes too complex and starts to memorize the training data instead of generalizing well to new, unseen data.

To prevent overfitting, various techniques can be applied during the gradient boosting process, such as limiting the depth of the trees, adding regularization terms, or using early stopping to stop training when the model’s performance on the validation set starts to deteriorate. These techniques help strike the right balance between model complexity and generalization.

Are there any alternatives to gradient boosting?

Yes, there are alternative boosting algorithms, such as AdaBoost and XGBoost, which also aim to improve model performance by combining weak models. Each algorithm has its own characteristics and strengths, so the choice depends on the specific problem and data at hand.

Aside from boosting techniques, other model enhancement methods include bagging (e.g., random forests), ensemble learning, and deep learning. The key is to understand the strengths and weaknesses of each technique and choose the one that best suits the problem domain and data characteristics.

Summary

Gradient boosting is like a turbocharger for models, making them more powerful and accurate. It combines multiple weaker models to create a stronger one. This boosts the model’s ability to make better predictions and can be used in various fields like finance and healthcare. However, it’s important to be cautious as gradient boosting can also overfit, which means it may become too focused on the training data and not be as good with new data. Overall, gradient boosting is a valuable tool that can enhance the performance of models and help us solve complex problems.