Do you want to know if transfer learning is a shortcut to model greatness? Well, you’ve come to the right place! In this article, we’ll explore the exciting world of transfer learning and uncover its potential in creating top-notch models. So, fasten your seatbelts as we dive into the fascinating realm of artificial intelligence and machine learning!

Imagine this: you’re a budding data scientist with dreams of creating groundbreaking models. But wait, there’s a catch! Building a model from scratch can be time-consuming and resource-intensive. That’s where transfer learning comes into play. It’s like having a head start on your journey to model greatness, leveraging knowledge from existing models. Let’s unravel the mystery behind transfer learning and see if it truly lives up to its reputation!

Are you ready to embark on an adventure that could revolutionize the way we train models? Transfer learning might just be the secret sauce you’ve been looking for! Get ready to explore its capabilities, benefits, and potential limitations. Whether you’re a curious learner or an aspiring data scientist, there’s something exciting waiting for you in the realm of transfer learning. Let’s unlock the doors to model greatness together!

Contents

Is Transfer Learning a Shortcut to Model Greatness?

In the realm of machine learning and artificial intelligence, transfer learning has emerged as a powerful technique that holds the promise of accelerating model development and achieving exceptional results. By leveraging pre-trained models and transferring their learned knowledge to new tasks, transfer learning offers the potential to minimize training time and optimize performance. But is transfer learning truly a shortcut to model greatness? In this article, we will explore the intricacies of transfer learning, its benefits, limitations, and provide insights into when and how it can be leveraged effectively.

The Basics: What is Transfer Learning?

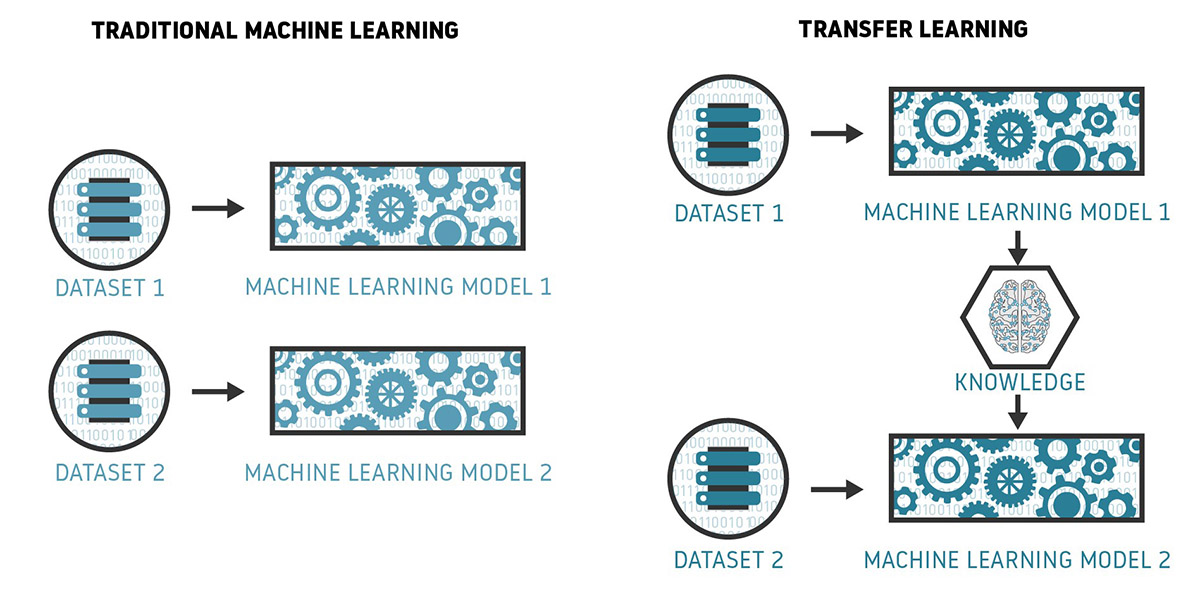

Before delving into the question of whether transfer learning is a shortcut to model greatness, it is important to understand the fundamentals of this technique. Transfer learning involves utilizing knowledge gained from solving one problem and applying it to a different but related problem. In the context of machine learning, it refers to using the knowledge acquired by a pre-trained model on a large dataset and transferring it to improve the performance of a model on a new, smaller dataset.

Transfer learning involves two key steps. First, a pre-trained model is selected, typically trained on a large dataset for a specific task, such as image classification or natural language processing. Then, this pre-trained model is modified or fine-tuned on a smaller dataset relevant to the target task. This process allows the model to generalize better and perform more accurately on the new task.

Benefits of Transfer Learning

1. Speeds up Model Training: One of the notable advantages of transfer learning is its ability to significantly reduce the time and computational resources required for training a model from scratch. By starting with a pre-trained model, which has already learned useful features from a large dataset, the model can quickly converge to a satisfactory solution on a smaller dataset.

2. Improved Generalization: Transfer learning enables a model to generalize better by leveraging the knowledge extracted from a pre-trained model. This is particularly useful in scenarios where the target dataset is limited, noisy, or lacks diversity. The pre-trained model provides a solid foundation, allowing the model to learn from a broader knowledge base.

3. Effective in Low Data Regimes: In practical scenarios, obtaining a large annotated dataset can be costly and time-consuming. Transfer learning offers a solution by allowing models to perform well even with limited data. By transferring knowledge from a pre-trained model, it enhances the model’s ability to generalize and make accurate predictions with less training data.

Pitfalls and Limitations of Transfer Learning

While transfer learning holds immense potential, it is important to acknowledge its limitations and potential pitfalls.

- Data Mismatch: The success of transfer learning is heavily dependent on the similarity between the source and target datasets. If there is a significant difference in data distribution, the transferred knowledge may not provide substantial benefits. Therefore, careful consideration must be given to ensure the relevance and compatibility of the pre-trained model and the target task.

- Task Dependency: Transfer learning is most effective when the source task and the target task share similar features and underlying characteristics. If the tasks are inherently different, the pre-trained model might not possess relevant knowledge for the target task.

- Overfitting: Fine-tuning a pre-trained model on a new dataset can introduce the risk of overfitting. This occurs when the model becomes too specialized to the source dataset and fails to generalize well to new data. Regularization techniques and data augmentation can help mitigate this issue.

Optimizing Transfer Learning for Model Greatness

While transfer learning offers the potential for accelerated model development and improved performance, it is essential to follow some best practices to harness its power effectively.

1. Choose a Suitable Pre-trained Model: Select a pre-trained model that aligns closely with the target task. Consider factors such as architecture, dataset size, and the similarity between the source and target domains.

2. Perform Proper Pre-processing: Ensure that the data used for both pre-training and fine-tuning is appropriately pre-processed to maintain consistency. Normalize the input data and apply relevant transformations specific to the problem domain.

3. Strategically Choose Layers for Fine-tuning: Determine which layers of the pre-trained model should be fine-tuned for the target task. Generally, lower-level layers capture more generic features, while higher-level layers learn task-specific representations.

4. Data Augmentation: Augment the target dataset with variations of the existing data to increase its diversity and size. Techniques such as rotation, scaling, and flipping can help generate additional training samples and improve model generalization.

5. Regularization Techniques: Apply regularization techniques such as dropout and weight decay to prevent overfitting during fine-tuning. These techniques help the model generalize better to unseen data by reducing the impact of noisy or irrelevant features.

By following these guidelines and adapting the transfer learning process to the specific task at hand, it is possible to unlock the true potential of transfer learning and achieve model greatness.

Key Takeaways: Is transfer learning a shortcut to model greatness?

- Transfer learning is a technique where knowledge from one model is applied to another.

- It can save time and resources by using a pre-trained model as a starting point.

- However, transfer learning is not a guaranteed shortcut to model greatness.

- It requires careful selection of the pre-trained model and adaptation to the new task.

- Proper tuning and fine-tuning are crucial for achieving optimal performance.

Frequently Asked Questions

When it comes to model greatness, many wonder if transfer learning is a shortcut to achieve it. Let’s explore some commonly asked questions on this topic.

1. How does transfer learning work?

Transfer learning is a technique where a pre-trained model is used as a starting point to solve a different but related problem. The pre-trained model has already learned useful features from a large dataset, making it a valuable starting point to build upon. Instead of training a model from scratch, transfer learning allows us to leverage the knowledge of the pre-trained model and apply it to a new task.

By using transfer learning, we can save significant time and computational resources. This approach is particularly useful when we have limited labeled data for our specific task, as the pre-trained model provides a head start in learning relevant patterns and features. However, it’s important to fine-tune the pre-trained model to adapt it to the new task and achieve optimal performance.

2. Can transfer learning be applied to any task?

Transfer learning can be applied to various tasks, but its effectiveness depends on the similarities between the pre-trained model’s original task and the new task at hand. If the pre-trained model was trained on a task that shares similar characteristics, such as images or text, with the new task, then transfer learning can be highly effective.

For example, a pre-trained model trained on a large dataset of images can be used as a starting point for tasks such as image classification or object detection. However, if the two tasks are vastly different, transfer learning may not yield the desired results. It’s important to consider the domain knowledge and similarities between the tasks before deciding to employ transfer learning.

3. Are there any limitations to transfer learning?

While transfer learning is a powerful technique, it does have some limitations. One limitation is that the pre-trained model’s learned features may not be completely applicable to the new task, especially if the tasks have significant differences in data distribution or required features.

Another limitation is that the pre-trained model may carry biases from its original task. These biases can influence the performance of the model on the new task and may require additional fine-tuning or data augmentation to address.

4. How can transfer learning benefit deep learning models?

Transfer learning can greatly benefit deep learning models. Deep learning models often require a large amount of labeled training data to perform well. However, labeled data is not always readily available. By using transfer learning, we can leverage pre-trained models that have been trained on massive datasets and transfer their knowledge to the new task, even with limited labeled data.

This approach allows deep learning models to achieve better performance in a shorter amount of time, as the initial layers of the pre-trained model have already learned generic features that are useful for various tasks.

5. How do I choose the right pre-trained model for transfer learning?

Choosing the right pre-trained model for transfer learning depends on the specific task you’re working on. You should consider factors such as the size and diversity of the pre-trained model’s original dataset, the architecture of the model, and the similarities between its original task and your task.

It’s common to use popular pre-trained models like VGG, ResNet, or BERT, which are widely adopted and have demonstrated high performance across different tasks. However, it’s also essential to experiment with different models and fine-tuning approaches to find the best fit for your particular task.

A Transfer Learning Secret!

Summary

Transfer learning can be a great shortcut to improve models and save time. It allows us to use knowledge learned from one task to solve another. By leveraging pre-trained models and fine-tuning them for specific tasks, we can achieve better performance with less data. Transfer learning is like building on what others have already learned and applying it to our own problems. It’s a powerful tool that can help us create better models and advance our understanding of artificial intelligence. So, if you’re looking for a way to make your models great, don’t forget to give transfer learning a try!