Deep learning is revolutionizing the world of technology, enabling machines to perform tasks with human-like intelligence. But amidst the excitement, there’s a concept that can trip up even the most experienced developers: overfitting. So, what exactly is overfitting in deep learning? Let’s dive in and find out!

Imagine you have a friend who is really good at memorizing things. They can easily recall a long list of facts, but when it comes to understanding the underlying concepts, they struggle. That’s similar to what happens with deep learning models and overfitting. In simple terms, overfitting occurs when a model becomes too focused on the specific examples it was trained on, while failing to generalize and understand the larger patterns.

Think of it like this: Imagine you have a robot that only knows how to do a task in a specific house, with a specific set of objects. If you move that robot to a different house with different objects, it won’t know what to do because it was never taught to adapt. That’s overfitting in a nutshell – a model becomes so fixated on the training data that it struggles to make accurate predictions in new and unseen scenarios.

But why is overfitting a problem? Well, the whole purpose of deep learning is to build models that can generalize well and make accurate predictions on new data. Overfitting undermines that goal, leading to poor performance and unreliable results. Understanding overfitting is crucial for developers and researchers alike, as it helps them identify and mitigate potential issues in their deep learning models.

In the rest of this article, we’ll explore the causes and consequences of overfitting, as well as techniques to prevent and overcome it. So buckle up and get ready to unravel the mysteries of overfitting in the fascinating world of deep learning!

Contents

Understanding Overfitting in Deep Learning

Welcome to our in-depth exploration of overfitting in deep learning. In this article, we will delve into the concept of overfitting and its impact on the performance of deep learning models. We will discuss what overfitting is, how it occurs, and the various strategies that can be employed to mitigate its effects. Whether you are a seasoned deep learning practitioner or just starting out, this article will equip you with valuable insights to improve the robustness of your models.

The Basics of Overfitting

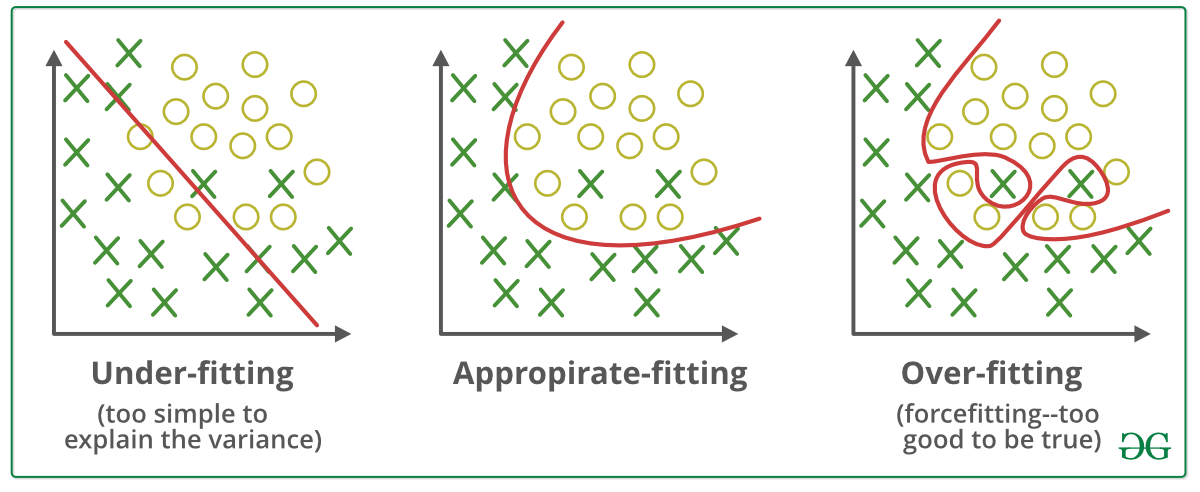

In the realm of deep learning, when building models to make predictions or classify data, one common phenomenon that can occur is overfitting. Overfitting happens when a model is trained too well on the training data to the point that it becomes too specific. This means that the model is overly optimized to perform well on the training data, but it fails to generalize when exposed to new, unseen data. Essentially, the model has “memorized” the training data, rather than learning the underlying patterns that would allow it to make accurate predictions on new data.

Overfitting can have detrimental effects on the performance of deep learning models. It can lead to poor generalization, where the model performs well on the training data but fails to accurately predict or classify new data. This is a common challenge in machine learning, and specifically in deep learning due to the complexity and depth of neural network architectures. Understanding the causes and techniques to combat overfitting is crucial for developing robust and reliable deep learning models that can be effectively deployed in real-world scenarios.

The Causes of Overfitting

There are several factors that contribute to the occurrence of overfitting in deep learning models. Understanding these causes is essential in order to implement effective strategies to mitigate its effects.

Strategies to Mitigate Overfitting

Fortunately, there are several strategies that can be employed to mitigate the effects of overfitting in deep learning models. Here are some common techniques:

Cross-Validation and Regularization

Cross-validation is a powerful technique that can help in detecting and preventing overfitting in deep learning models. It involves dividing the available data into multiple subsets or folds, and training the model on different combinations of these folds. By testing the model on the validation set after each training run, it is possible to identify the point at which the model starts to overfit and then adjust the hyperparameters accordingly.

Regularization is another key technique used to combat overfitting. It involves adding a penalty term to the loss function during training to discourage the model from becoming too complex. This penalty term can be based on various regularization methods such as L1 regularization (Lasso), L2 regularization (Ridge), or a combination of both (Elastic Net). Regularization helps to limit the flexibility of the model and prevents it from fitting the noise in the training data.

By applying cross-validation and regularization techniques, it is possible to train deep learning models that are more robust and less prone to overfitting. These techniques help in striking a balance between model complexity and generalization ability, resulting in improved performance on unseen data.

Data Augmentation

Data augmentation is a powerful technique used to increase the diversity and size of the training data, thereby reducing the risk of overfitting. It involves applying various transformations or modifications to the existing training data to create new training samples. For example, in image classification tasks, data augmentation techniques such as random rotations, translations, flips, and scaling can be used to create additional training samples with variations in pose, lighting, or other factors.

Data augmentation helps in exposing the model to a wider range of variations and ensures that it learns to generalize across different instances of the same class. By providing the model with more diverse examples during training, it becomes less likely to overfit to specific patterns in the original training data. Data augmentation is especially useful when the available training data is limited or imbalanced.

Overall, data augmentation is a valuable technique to enhance the generalization ability of deep learning models and reduce the risk of overfitting. It is widely used in various domains, including computer vision, speech recognition, and natural language processing.

Early Stopping

Early stopping is a technique used to prevent overfitting by monitoring the performance of the model on a validation set during the training process. It involves stopping the training process when the performance on the validation set starts to degrade, indicating that the model has reached a point of overfitting.

The idea behind early stopping is to find the optimal balance between model complexity and generalization ability. By monitoring the validation set, it helps to identify the point at which the model starts to overfit and prevents it from further training, saving computational resources and avoiding the risk of overfitting.

Implementing early stopping requires defining a suitable metric to monitor (e.g., validation loss or accuracy), and specifying a patience parameter that determines the number of epochs to wait before stopping. Early stopping is a simple yet effective technique that can be applied to prevent overfitting in deep learning models.

The Impact of Overfitting on Model Performance

Overfitting can have a significant impact on the performance of deep learning models. Here are some of the consequences that can arise:

Tips to Avoid Overfitting in Deep Learning Models

As we have seen, overfitting is a common issue in deep learning models, but there are steps you can take to mitigate its effects. Here are some tips to avoid overfitting:

Conclusion

Overfitting is a common challenge in deep learning, but with the right understanding and strategies, it can be effectively mitigated. By implementing techniques such as cross-validation, regularization, data augmentation, and early stopping, it is possible to train deep learning models that generalize well and perform reliably on unseen data. Remember to always consider the trade-off between model complexity and generalization ability, and make use of the available tools and techniques to ensure the robustness of your models. Happy training!

Key Takeaways:

- Overfitting in Deep Learning is when a model becomes too specialized to the training data, resulting in poor performance on new, unseen data.

- It occurs when the model learns to memorize specific examples instead of understanding the underlying patterns.

- To prevent overfitting, techniques like regularization, dropout, and early stopping can be used.

- Overfitting can be minimized by using a larger and more diverse training dataset.

- It is important to strike a balance between model complexity and generalization to avoid overfitting.

Frequently Asked Questions

In this section, we will answer some common questions related to the concept of overfitting in Deep Learning.

What is overfitting in Deep Learning?

Overfitting is a phenomenon in Deep Learning where a machine learning model performs well on the training data, but fails to generalize to new, unseen data. It occurs when the model learns to fit the noise or random fluctuations in the training data rather than capturing the underlying patterns or trends.

Think of it as a student who memorizes the exact answers to specific questions, but struggles to solve new problems that require a deeper understanding of the subject matter. In Deep Learning, overfitting leads to poor performance on real-world data and limits the model’s ability to learn and make accurate predictions.

What are the causes of overfitting in Deep Learning?

There are several causes of overfitting in Deep Learning. One common cause is having a complex model with too many parameters relative to the available data. When the model is too flexible, it can capture even the noise or outliers in the training data, resulting in overfitting.

Another cause is when the training data is limited or unrepresentative of the true population. If the training data doesn’t capture the full variability of the real-world data, the model may not generalize well. Additionally, overfitting can occur when the model is trained for too long, causing it to memorize the training examples instead of learning the underlying patterns.

How can overfitting be prevented in Deep Learning?

To prevent overfitting in Deep Learning, there are several strategies you can employ. One approach is to use regularization techniques such as L1 or L2 regularization. These techniques penalize complex models, encouraging them to generalize better.

Another strategy is to increase the amount of training data. By having more diverse and representative data, the model can learn general patterns instead of memorizing specific examples. You can also use techniques like dropout, which randomly switches off a portion of the neurons during training, preventing the model from relying too heavily on specific features.

How can we detect if a model is overfitting?

There are several ways to detect if a model is overfitting in Deep Learning. One method is to split the available data into a training set and a validation set. Train the model on the training set and evaluate its performance on the validation set. If the model performs significantly better on the training set compared to the validation set, it’s a sign of overfitting.

You can also monitor the model’s performance during training by plotting the training loss and the validation loss over time. If the training loss continues to decrease while the validation loss starts to increase or stagnate, it suggests overfitting. Lastly, you can use techniques like cross-validation or holdout validation to get a more robust estimate of the model’s generalization performance.

What are the consequences of overfitting in Deep Learning?

The consequences of overfitting in Deep Learning can be severe. When a model is overfitting, it may give misleading results and fail to perform well on unseen data. This can lead to incorrect predictions, unreliable insights, and poor decision-making based on the model’s outputs.

In practical applications, overfitting can have significant consequences, especially in domains where accurate predictions are vital, such as healthcare or financial sectors. Overfit models are less robust and more prone to errors, jeopardizing the reliability and usability of the Deep Learning model.

Summary

Overfitting in Deep Learning happens when a machine learning model becomes too focused on the training data. It’s like memorizing answers instead of understanding concepts. This can lead to poor performance on new, unseen data.

To avoid overfitting, we can use techniques like regularization, which adds a penalty for complex models. We can also split our data into separate training and validation sets, to check if our model is generalizing well. It’s important to find the right balance between model complexity and generalization to make accurate predictions.