Welcome to the world of Deep Learning! Today, we’re going to explore an exciting concept called reinforcement learning. So, what is reinforcement learning in Deep Learning? Let’s dive right in and find out!

Imagine you’re playing a video game, and as you progress through the levels, your character learns to make better decisions. That’s exactly what reinforcement learning is all about. It’s a branch of Deep Learning where an algorithm learns how to perform different tasks by receiving feedback in the form of rewards or punishments.

Reinforcement learning is like training a pet or teaching a friend how to ride a bike. You reward them when they do something right and correct them when they make a mistake. Similarly, in reinforcement learning, the algorithm learns from trial and error, continuously improving its actions based on the feedback it receives.

With reinforcement learning, computers can learn to navigate complex environments, play games, control robots, and even make strategic decisions. It’s a fascinating field that has the potential to revolutionize various industries, from healthcare to transportation and beyond.

So, are you ready to delve deeper into the world of reinforcement learning and discover how it’s shaping the future of Deep Learning? Let’s embark on this exciting journey together!

Now that we have a grasp of what reinforcement learning is, it’s time to explore its inner workings and understand how it enables machines to learn and make intelligent decisions.

Reinforcement learning in Deep Learning is a technique that enables an AI system to learn and improve through trial and error. It involves an agent interacting with an environment and taking actions to maximize a reward. Unlike supervised learning, the agent doesn’t have predefined labels, but learns from the consequences of its actions. This approach has been used to train AI models to play games, control robots, and make decisions in complex scenarios. It’s a powerful tool in the field of artificial intelligence.

Contents

- Understanding Reinforcement Learning in Deep Learning

- Training Deep Reinforcement Learning Models

- Key Takeaways: What is reinforcement learning in Deep Learning?

- Frequently Asked Questions

- Q1: How does reinforcement learning differ from other types of learning techniques in deep learning?

- Q2: What are the key components of reinforcement learning in deep learning?

- Q3: What is the role of exploration and exploitation in reinforcement learning?

- Q4: What are some common algorithms used in reinforcement learning?

- Q5: What are some real-world applications of reinforcement learning in deep learning?

- Summary

Understanding Reinforcement Learning in Deep Learning

Deep learning has revolutionized the field of artificial intelligence, enabling machines to learn and make predictions like never before. One powerful technique within deep learning is reinforcement learning. In this article, we will explore what reinforcement learning is and how it fits into the broader landscape of deep learning. Whether you are an aspiring data scientist or simply curious about the latest advancements in AI, this article will provide you with a comprehensive understanding of reinforcement learning in deep learning.

What is Reinforcement Learning?

Reinforcement learning is a type of machine learning where an agent learns to make decisions by interacting with an environment. The agent is like an AI entity that receives feedback in the form of rewards or punishments based on its actions. The goal of reinforcement learning is to enable the agent to learn the optimal behavior or policy that maximizes the cumulative rewards over time.

In reinforcement learning, the agent takes actions and observes the resulting state of the environment and the corresponding reward. By repeatedly experiencing this interaction, the agent learns to associate actions with desirable outcomes and adjusts its behavior accordingly. Unlike supervised learning, where the agent is provided with labeled examples, reinforcement learning relies on trial and error to discover the most effective strategies.

Reinforcement learning can be applied to a wide range of problems, such as game playing, robotics, finance, and even healthcare. It allows machines to learn from experience and adapt their behavior in dynamic and uncertain environments, making it a powerful tool for creating intelligent systems.

The Components of Reinforcement Learning

Reinforcement learning consists of three key components: the agent, the environment, and the rewards. Let’s delve deeper into each of these components to understand how they work together to enable learning.

The Agent

The agent is the learner in the reinforcement learning process. It is the entity that takes actions based on its observations of the environment. The agent’s objective is to maximize the cumulative rewards it receives over time. It does this by selecting actions based on its current state and the expected future rewards associated with those actions.

The agent also has a policy, which is a set of rules or strategies that determine how it selects actions. The policy can be deterministic, meaning it always chooses the same action given a specific state, or stochastic, meaning it selects actions based on probabilities. The agent’s goal is to learn the optimal policy that maximizes its rewards.

To explore different policies and make informed decisions, it uses a value function that estimates the expected cumulative rewards for each state-action pair. The value function helps the agent evaluate the long-term consequences of its actions and guides it towards actions that lead to higher rewards.

The Environment

The environment represents the external world in which the agent operates. It is the entity that provides feedback to the agent in the form of rewards or punishments based on the agent’s actions. The environment can be as simple as a game board or as complex as a simulated physical environment.

The environment has its own state, and the agent interacts with the environment by taking actions. The environment then transitions to a new state based on the agent’s action, and the agent receives a reward or punishment based on the new state. This feedback informs the agent about the consequences of its actions and guides its future behavior.

The environment can be deterministic, where the next state and reward are entirely determined by the current state and action, or stochastic, where there is some randomness involved in the state transitions or reward assignments.

The Rewards

Rewards play a vital role in reinforcing the learning process. They represent the feedback the agent receives from the environment for its actions. Rewards can be positive or negative, providing the agent with an indication of whether its actions were beneficial or detrimental.

The agent’s objective is to maximize the cumulative rewards it receives over time. To achieve this, it must learn to associate actions with desirable outcomes, seeking actions that lead to higher rewards and avoiding actions that result in penalties. The rewards serve as a signal for the agent to learn the optimal behavior that maximizes its long-term performance.

Designing the reward structure is a crucial aspect of reinforcement learning. The rewards should be carefully defined to encourage desirable behaviors and discourage undesirable ones. The right balance in reward design can guide the agent towards the desired objectives.

Benefits of Reinforcement Learning in Deep Learning

Reinforcement learning offers several advantages when applied within the field of deep learning.

- Handling Complex Environments: Reinforcement learning allows deep learning models to tackle complex environments by learning through trial and error. It enables machines to navigate uncertain and dynamic environments, making it suitable for applications like robotics and autonomous systems.

- End-to-End Learning: With reinforcement learning, deep learning models can learn directly from raw sensory input, eliminating the need for manual feature engineering. This enables end-to-end learning, where the model learns to perceive the environment, make decisions, and take actions, all within a single integrated framework.

- Adaptability: Reinforcement learning is well-suited for scenarios where the optimal strategy may change over time. By continuously exploring and exploiting different actions, the agent can adapt its behavior to dynamic environments and evolving objectives.

- Generalization: Deep reinforcement learning models have the ability to generalize their learned policies to new, unseen environments. This allows them to transfer knowledge and perform well in diverse scenarios without the need for extensive retraining.

- Human-level Performance: In certain domains, reinforcement learning has achieved human-level or even superhuman performance. Successes in areas like game playing and board games showcase the immense potential of this approach in achieving high levels of performance and intelligence.

Training Deep Reinforcement Learning Models

Training deep reinforcement learning models involves optimizing their policies and value functions through iterative learning processes. These processes often require significant computational resources and careful tuning of hyperparameters. Let’s explore some key techniques used in training deep reinforcement learning models.

1. Q-Learning

Q-Learning is a classic reinforcement learning algorithm that estimates the optimal action-value function, known as the Q-function. The Q-function represents the expected cumulative rewards for taking a specific action in a given state and following an optimal policy thereafter.

The Q-Learning algorithm iteratively updates the Q-values based on the observed rewards and state transitions. It gradually refines the estimates by using a greedy or epsilon-greedy policy to select actions and updating the Q-values by taking into account the observed rewards and the potential rewards of the next state.

This process continues until the Q-values converge to the optimal values, and the agent learns the optimal policy that maximizes its rewards.

2. Deep Q-Networks (DQN)

Deep Q-Networks (DQN) is a breakthrough technique that combines deep learning with reinforcement learning. It uses deep neural networks to approximate the Q-function, enabling the agent to handle high-dimensional input spaces.

The DQN algorithm employs a technique called experience replay, where it stores the agent’s experiences (consisting of state, action, reward, and resulting state) in a replay buffer. During training, the DQN samples batches of experiences from the replay buffer to update the Q-network. This enhances the learning process by breaking the sequential correlation of experiences and increasing data efficiency.

By leveraging deep neural networks, DQN has been able to achieve remarkable performance in a wide range of game-playing tasks and other complex environments.

Key Takeaways: What is reinforcement learning in Deep Learning?

- Reinforcement learning is a type of machine learning where an agent learns to make decisions based on trial and error.

- In reinforcement learning, the agent interacts with an environment and receives feedback in the form of rewards or punishments.

- The goal of reinforcement learning is to optimize the agent’s actions to maximize long-term rewards.

- Popular algorithms used in reinforcement learning include Q-learning and Deep Q Network (DQN).

- Reinforcement learning has applications in various fields such as robotics, game playing, and autonomous vehicles.

Frequently Asked Questions

In the world of deep learning, reinforcement learning is a powerful technique that allows machines to learn and make decisions through interaction with their environment. It is an important concept that has gained significant attention due to its applications in areas such as robotics, game playing, and autonomous systems. Here are some commonly asked questions about reinforcement learning in deep learning:

Q1: How does reinforcement learning differ from other types of learning techniques in deep learning?

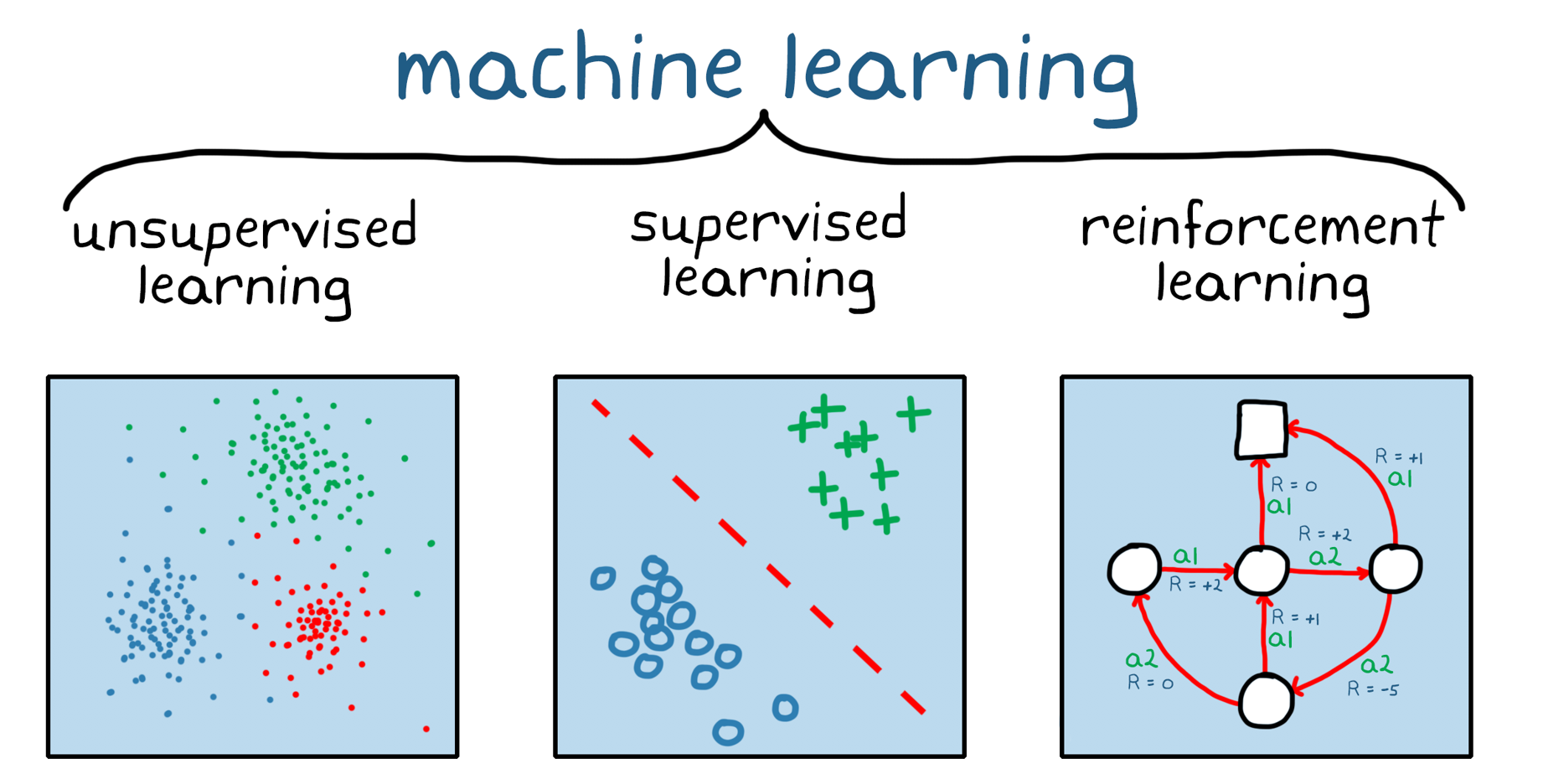

Unlike other types of learning techniques, such as supervised learning or unsupervised learning, reinforcement learning involves an agent that learns to interact with an environment and maximize its reward. The agent takes actions in the environment based on its current state and receives feedback in the form of rewards or penalties. By learning from this feedback, the agent can adjust its actions accordingly to achieve its goal.

Supervised learning, on the other hand, relies on labeled training data to learn patterns and make predictions, while unsupervised learning focuses on finding patterns and relationships in the data without any specific guidance. Reinforcement learning is unique in its ability to learn through trial and error and dynamically adapt its behavior based on the feedback from the environment.

Q2: What are the key components of reinforcement learning in deep learning?

Reinforcement learning consists of three key components: the agent, the environment, and the reward signal. The agent is the learner or decision-maker that takes actions in the environment. The environment is the external system or world in which the agent operates. The reward signal is the feedback mechanism that guides the agent’s learning process by providing positive or negative feedback based on its actions.

Together, these components form a loop. The agent interacts with the environment by taking actions, and in response, the environment provides feedback in the form of rewards or penalties. The agent’s goal is to learn an optimal policy, which is a mapping from states to actions, that maximizes its cumulative reward over time.

Q3: What is the role of exploration and exploitation in reinforcement learning?

In reinforcement learning, exploration and exploitation are two fundamental strategies that the agent employs to make decisions. Exploration involves exploring new actions and states that the agent has not yet experienced, allowing it to gather information about the environment and better understand its dynamics. Exploitation, on the other hand, involves the agent using its current knowledge to make decisions that maximize its expected rewards based on that knowledge.

Both exploration and exploitation are crucial for successful reinforcement learning. Exploration helps the agent discover new, potentially better actions and states, while exploitation allows the agent to exploit its current knowledge to make optimal decisions. Striking the right balance between exploration and exploitation is a challenging task in reinforcement learning.

Q4: What are some common algorithms used in reinforcement learning?

There are several well-known algorithms used in reinforcement learning, each with its own strengths and limitations. Some popular algorithms include Q-learning, policy gradients, deep Q-networks (DQNs), and actor-critic methods. These algorithms vary in their approach to modeling the learning process, handling continuous or discrete actions, and dealing with large state and action spaces.

For example, Q-learning is a model-free algorithm that learns the values of state-action pairs through iterative updates. Policy gradients, on the other hand, directly optimize the policy of the agent using gradient-based optimization methods. DQNs combine reinforcement learning with deep neural networks to handle high-dimensional state spaces, while actor-critic methods use both a policy network and a value network to estimate the value of actions and improve the policy.

Q5: What are some real-world applications of reinforcement learning in deep learning?

Reinforcement learning finds applications in various domains, showcasing its versatility and potential impact. Some real-world applications of reinforcement learning in deep learning include robotics, autonomous systems, game playing, recommendation systems, and supply chain management.

In robotics, reinforcement learning can be used to teach robots to perform complex tasks by learning from trial and error. In autonomous systems, reinforcement learning can enable self-driving cars or drones to navigate challenging environments. In game playing, reinforcement learning algorithms have achieved remarkable results, beating world champions in games like Chess, Go, and Dota 2. In recommendation systems, reinforcement learning can personalize and optimize recommendations based on user feedback. In supply chain management, reinforcement learning can optimize inventory control and resource allocation decisions.

Summary

Reinforcement learning is like a video game for computers. They learn by taking actions, getting rewards, and adjusting strategies. It’s used in self-driving cars and teaching robots to do tasks. The computer learns through trial and error, which makes it really cool!

In reinforcement learning, the computer uses a system of rewards to learn how to make good decisions. It’s like training a dog with treats. The computer gets positive rewards for good actions and negative rewards for bad ones. This helps it improve and find the best way to solve problems. So, with reinforcement learning, computers can teach themselves and become smarter over time!