Have you ever wondered what’s the role of activation functions in Deep Learning? Activation functions play a crucial role in determining the output of a neural network. They introduce non-linearity, enabling the network to learn complex patterns and make accurate predictions. Without activation functions, deep learning models would simply be linear functions, limiting their ability to solve a wide range of problems.

So, what exactly do activation functions do? Well, think of them as decision-makers within the neural network. They take in the weighted sum of inputs from the previous layer, apply a mathematical function to it, and produce an output. This output determines whether the neuron should be activated or not, based on a certain threshold. By introducing non-linear transformations, activation functions enable the network to model complex relationships between inputs and outputs.

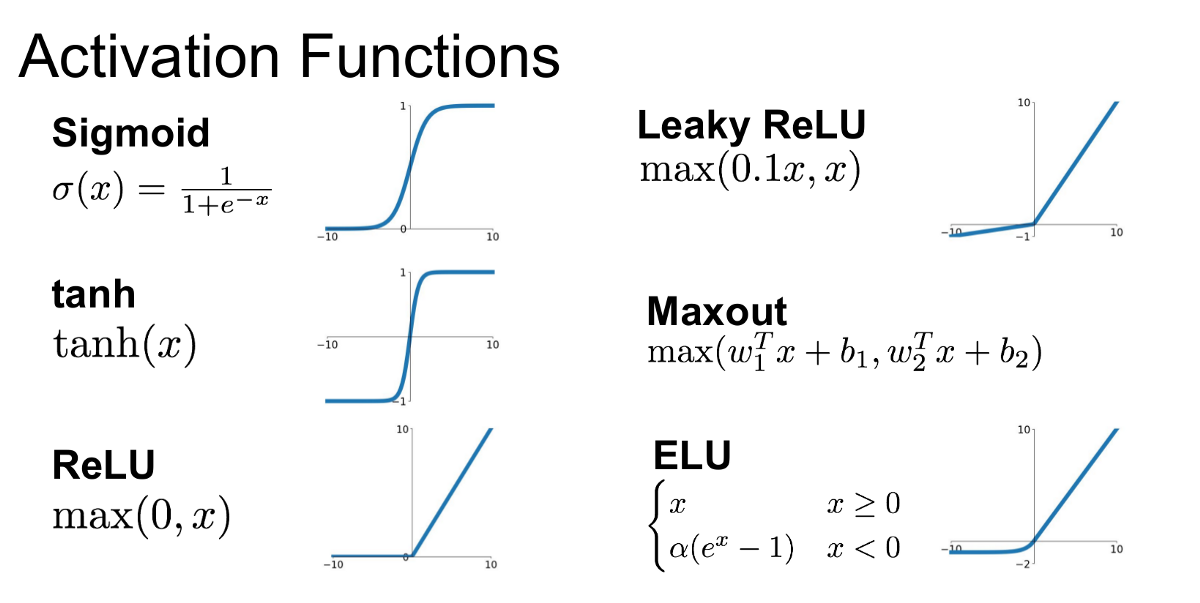

There are various types of activation functions commonly used in deep learning, such as the sigmoid, tanh, and ReLU (Rectified Linear Unit). Each activation function has its own characteristics and strengths, making them suitable for different scenarios. Understanding the role of activation functions is essential for designing effective deep learning models that can solve real-world problems. So, let’s dive deeper into the world of activation functions and uncover their fascinating role in deep learning!

Contents

- Unlocking the Power of Activation Functions in Deep Learning

- 1. Understanding Activation Functions: A Foundation for Deep Learning

- 2. The Sigmoid Function: Modeling Probability-Like Behavior

- 3. The Hyperbolic Tangent Function: Improved Version of the Sigmoid

- 4. Unleashing the Power of ReLU: Overcoming Limitations

- 5. Choosing the Right Activation Function: A Delicate Balance

- The Impact of Activation Functions on Deep Learning Performance

- Activation Function Selection Strategies and Best Practices

- Key Takeaways: What’s the role of activation functions in Deep Learning?

- Frequently Asked Questions

- 1. Why do deep learning models need activation functions?

- 2. What are some commonly used activation functions in deep learning?

- 3. Can I use any activation function in a deep learning model?

- 4. Are there any drawbacks to using activation functions in deep learning?

- 5. Can I use multiple activation functions in a single deep learning model?

- Summary

Unlocking the Power of Activation Functions in Deep Learning

In the realm of deep learning, activation functions play a critical role in shaping the behavior and performance of neural networks. These functions act as nonlinear transformation layers, introducing nonlinearity into the network and enabling it to learn complex patterns and relationships in the data. Activation functions are crucial for achieving the desired outputs and facilitating the training process by enabling gradient-based optimization algorithms to converge effectively. In this article, we will delve into the intricacies of activation functions and explore their significance in deep learning models.

1. Understanding Activation Functions: A Foundation for Deep Learning

Activation functions serve as the building blocks of deep learning models. They introduce nonlinearity into the network, allowing it to model complex, nonlinear relationships in the data. Without activation functions, neural networks would be limited to performing linear transformations, severely restricting their ability to capture and learn intricate patterns.

An activation function takes the weighted sum of the inputs to a neuron, applies a transformation to it, and outputs the result as the activation value. Commonly used activation functions include the sigmoid, tanh, and ReLU (Rectified Linear Unit) functions. Each of these functions offers unique properties that impact the behavior and training of deep learning models.

Choosing the right activation function for a specific task is crucial. Different activation functions have various characteristics and suitability for different tasks, and understanding their properties and limitations is essential for achieving optimal performance in deep learning models.

2. The Sigmoid Function: Modeling Probability-Like Behavior

The sigmoid function, also known as the logistic function, is a widely used activation function in early neural networks. It maps the input values to a range between 0 and 1, effectively modeling a probability-like behavior. The sigmoid function’s smooth, S-shaped curve allows it to introduce nonlinearity to the network, facilitating the modeling of complex patterns.

Despite its advantages, the sigmoid function comes with certain limitations. One major drawback is the vanishing gradient problem, where the gradients of the sigmoid function become extremely small for very high or low input values. This, in turn, hinders the learning process and slows down convergence during training. As a result, the sigmoid function is often avoided in deeper neural networks.

While the sigmoid function has fallen out of favor for hidden layers in deep learning models, it is still commonly used in the output layer for binary classification tasks, where the output needs to be interpreted as a probability.

3. The Hyperbolic Tangent Function: Improved Version of the Sigmoid

The hyperbolic tangent function, commonly known as tanh, addresses some of the limitations of the sigmoid function. The tanh function maps input values to a range between -1 and 1, offering a stronger activation compared to the sigmoid function.

Like the sigmoid function, tanh also suffers from the vanishing gradient problem. However, the tanh function’s outputs are centered around zero, making it more effective in capturing both positive and negative aspects of the data. This property makes the tanh function more suitable for hidden layers in deep neural networks where nonlinearity is required.

Although the tanh function helps alleviate the vanishing gradient problem to a certain extent, it is still not the ideal choice for all scenarios. Recent advancements in deep learning have led to the dominance of the Rectified Linear Unit (ReLU) activation function.

4. Unleashing the Power of ReLU: Overcoming Limitations

The Rectified Linear Unit (ReLU) is arguably the most popular activation function in deep learning models today. Unlike the sigmoid and tanh functions, ReLU is linear for positive values and zero for negative values. This linearity helps address the vanishing gradient problem, allowing for faster convergence and improved learning in deep neural networks.

ReLU offers several benefits that contribute to its widespread adoption. It introduces sparsity in the network by selectively activating only certain neurons, resulting in more efficient utilization of computational resources. Additionally, ReLU does not suffer from the vanishing gradient problem for positive inputs, unlike sigmoid and tanh functions. However, ReLU can cause the “dying ReLU” problem, where some neurons become permanently inactive, hindering the learning process.

To mitigate the “dying ReLU” problem, variations of ReLU such as Leaky ReLU, Parametric ReLU (PReLU), and Exponential Linear Unit (ELU) have been introduced, offering improved performance and addressing the limitations of the original ReLU function. These variations provide alternative activation options for different use cases, allowing practitioners to choose the most suitable function for their specific needs.

5. Choosing the Right Activation Function: A Delicate Balance

The choice of activation function significantly impacts the performance, convergence, and generalization ability of deep learning models. It is essential to consider the properties of the activation functions and select the most appropriate one for the task at hand.

While ReLU and its variations have become the go-to choice for many deep learning practitioners, there are scenarios where other activation functions might yield better results. For example, the sigmoid function is still commonly used in binary classification tasks where an interpretable probability output is desired. The tanh function might be suitable for tasks where the data has negative values and capturing their polarity is important.

Adaptability is key in determining the ideal activation function. Experimenting with different functions, monitoring model performance, and staying updated with advancements in the field is crucial to making informed decisions and harnessing the full potential of activation functions in deep learning.

The Impact of Activation Functions on Deep Learning Performance

The role of activation functions in deep learning precedes beyond just nonlinearity. Their impact extends to various aspects of neural network behavior and performance, ultimately influencing the model’s accuracy, convergence speed, and ability to generalize to unseen data. In this section, we will explore the significance of activation functions in deep learning performance through the lens of various parameters.

1. Model Convergence and Gradient Flow

Activation functions play a vital role in ensuring smooth and efficient model convergence during the training phase. One crucial aspect is the behavior of gradients that flow backward through the network during the backpropagation process. Activation functions directly influence the gradient flow, and their choice can impact how gradients are propagated and updated during optimization.

In the case of sigmoid and tanh functions, the gradients can become vanishingly small for certain inputs, leading to a slow convergence rate. On the other hand, ReLU and its variants mitigate this issue, promoting better gradient flow and faster convergence. The choice of the activation function can significantly affect the optimization process, potentially reducing training time and improving the efficiency of the model.

Understanding the gradient flow and its relationship with different activation functions is crucial when designing and training deep learning models. By selecting appropriate activation functions, practitioners can optimize convergence speed, stabilize training, and facilitate efficient gradient-based optimization.

2. Avoiding Overfitting and Enhancing Generalization

Overfitting is a common challenge in deep learning models, where the model learns to memorize the training data instead of generalizing and performing well on unseen data. Activation functions can play a role in mitigating the risk of overfitting and improving the model’s ability to generalize to new examples.

Nonlinear activation functions like ReLU and its variants introduce a form of regularization by selectively activating neurons and promoting sparsity within the network. This regularization effect helps prevent overfitting, as the model is forced to focus on the most relevant features and patterns in the data. Moreover, the ability of activation functions to introduce nonlinearity allows deep learning models to capture intricate patterns and relationships, enhancing their generalization ability.

By choosing suitable activation functions, deep learning practitioners can strike a balance between capturing complex patterns and avoiding overfitting, ultimately leading to models that generalize well and perform accurately on unseen data.

3. Impact on Information Flow and Representational Power

Activation functions influence the flow of information through the neural network and shape the network’s capacity to represent and learn complex patterns. The choice of activation function can impact how well the model can learn and express different levels of abstraction and granularity in the data.

Functions like sigmoid and tanh tend to saturate for extreme input values, limiting their representational power and capacity to capture intricate patterns and fine-grained details. On the other hand, ReLU and its variants exhibit superior representation capability and allow the model to learn a wider range of features and relationships.

Understanding the interplay between activation functions and the expressiveness of deep learning models is crucial for achieving optimal performance. By selecting appropriate activation functions, neural networks can be empowered to comprehend and extract salient features and relationships in the data, increasing their capacity to handle complex tasks and achieve higher accuracy.

Activation Function Selection Strategies and Best Practices

Choosing the right activation function is a critical decision that impacts the performance, convergence, and capabilities of deep learning models. While there is no one-size-fits-all solution, here are some strategies and best practices to consider when selecting activation functions:

1. Task and Data Understanding

Gain a deep understanding of the task at hand and the characteristics of the data being used. Different tasks and data distributions might require different activation functions to capture the relevant patterns and relationships. Consider factors such as the data’s range, distribution, and the output requirements of the task.

2. Experiment and Compare

Conduct experiments by training the models with different activation functions and compare their performance. Monitor metrics such as accuracy, convergence speed, and generalization ability on validation and test datasets to evaluate the impact of different activation functions.

3. Consider Network Depth

For deeper networks, activation functions with better gradient flow, such as ReLU and its variants, are generally preferred. These functions help mitigate the vanishing gradient problem and facilitate training in deeper architectures. However, it is crucial to monitor the “dying ReLU” problem and consider using alternate activation functions or variants if necessary.

4. Beware of Saturated Regions

Avoid activation functions like sigmoid and tanh in hidden layers of deep neural networks, as they saturate for extreme input values, leading to vanishing gradients and slower convergence. Reserve these functions for specific use cases, such as binary classification tasks or when modeling probability-like behavior is required.

5. Leverage Advanced Activation Functions

Explore advanced activation functions and their variants, such as Leaky ReLU, Parametric ReLU (PReLU), and Exponential Linear Unit (ELU). These functions offer improved performance and address potential limitations of the traditional activation functions, providing better alternatives for various use cases.

6. Combine Different Activation Functions

Consider using a combination of activation functions in different layers of the neural network. This can leverage the strengths of different functions and tailor them to the specific requirements of each layer, optimizing the network’s representation and learning capabilities.

7. Regularization Techniques

Incorporate regularization techniques, such as dropout or batch normalization, in conjunction with activation functions to further enhance the model’s performance and generalization ability. These techniques can help address overfitting and improve the stability of training.

8. Stay Informed and Experiment

Stay updated with the latest advancements and research in the field of activation functions. New functions are continually being proposed, each with its own set of advantages and improvements. Keep experimenting with different activation functions and adapting as the field progresses.

By following these strategies and best practices, deep learning practitioners can optimize the selection of activation functions, leading to models that perform better, generalize well, and unlock the full potential of deep learning.

Key Takeaways: What’s the role of activation functions in Deep Learning?

- Activation functions determine the output of a neural network.

- They add non-linearity to the model, allowing it to learn complex patterns and relationships.

- Common activation functions include sigmoid, tanh, and ReLU.

- The choice of activation function depends on the task and the neural network architecture.

- Activation functions help prevent vanishing gradients and improve the convergence of the model.

Frequently Asked Questions

When it comes to deep learning, activation functions play a crucial role in the overall performance of neural networks. These functions introduce non-linearity, allowing the network to learn and capture complex patterns in the data. In this Q&A, we will explore the significance of activation functions in deep learning.

1. Why do deep learning models need activation functions?

Activation functions are essential in deep learning models because they introduce non-linearity, enabling the network to learn and interpret complex patterns in the data. Without activation functions, neural networks would simply be a series of linear transformations, severely limiting their ability to model intricate relationships.

Activation functions also determine the output of a neuron, defining whether it should be activated or not based on a specific threshold. This enables the network to capture non-linear relationships between inputs, making it capable of representing complex decision boundaries and solving more complex problems.

2. What are some commonly used activation functions in deep learning?

There are several activation functions commonly used in deep learning. One of the most popular is the Rectified Linear Unit (ReLU), which replaces negative values with zero and keeps positive values unchanged. ReLU is widely adopted due to its simplicity and effectiveness in overcoming the vanishing gradient problem.

Other commonly used activation functions include the Sigmoid function, which produces a smooth S-shaped curve, and the Hyperbolic Tangent (tanh) function, which is similar to the Sigmoid function but outputs values between -1 and 1. Both Sigmoid and tanh functions are useful in cases where we need to scale and normalize the output values.

3. Can I use any activation function in a deep learning model?

While there is no hard and fast rule, it’s important to consider the characteristics of different activation functions before choosing one for your deep learning model. Some activation functions, like ReLU, work well in most cases and are widely used. However, certain scenarios may require different activation functions.

For example, if your data is centered around zero and you want to scale and normalize it, using a function like tanh may be more appropriate. Additionally, in cases where you are dealing with a binary classification problem, the Sigmoid function can be useful as it squashes the output between 0 and 1, representing a probability.

4. Are there any drawbacks to using activation functions in deep learning?

While activation functions are essential for deep learning models, they do have some limitations. One common issue is the vanishing gradient problem, which occurs when gradients become too small during backpropagation due to certain activation functions. This can hinder the network’s ability to learn and update its weights effectively.

Another potential concern is the choice of activation function introducing bias into the model. Certain activation functions may not be suited for specific types of data or can lead to the saturation of neurons, resulting in slower convergence. It’s crucial to experiment with different activation functions and understand their impact on your specific problem domain.

5. Can I use multiple activation functions in a single deep learning model?

Absolutely! In fact, using multiple activation functions in different layers is quite common in deep learning. This is known as a multi-layer neural network. By employing different activation functions at each layer, the model can learn and capture more complex relationships in the data.

For instance, you could use ReLU in the hidden layers to introduce non-linearity and a sigmoid function in the output layer for binary classification. This combination of activation functions allows the model to effectively handle different aspects of the problem and optimize its performance.

Summary

Activation functions play a vital role in deep learning models. They help in determining the output of a neuron and introduce non-linearity to the network. Different activation functions like sigmoid, ReLU, and tanh have their own strengths and weaknesses. Sigmoid functions are good for binary classification, ReLU is effective in avoiding the vanishing gradient problem, while tanh offers a wider range of values.

Choosing the right activation function is crucial for the success of a deep learning model. It depends on the nature of the problem and the type of data being used. Proper selection helps in improving the model’s performance and achieving accurate predictions. So, don’t overlook the importance of activation functions when diving into the exciting world of deep learning!