What’s the scoop on ensemble learning? Well, let me tell you, it’s like a team of superheroes working together to save the day! 🦸🦸♀️ You see, ensemble learning is a powerful approach that combines the predictions of multiple machine learning models to make more accurate decisions. It’s like having a bunch of experts sharing their insights to solve a problem.

Ensemble learning takes advantage of the idea that different models have different strengths and weaknesses. By blending their predictions, we can create a stronger and more robust model that outperforms any individual model. It’s like assembling a dream team of superheroes, each with their unique superpower, to fight the bad guys!

The beauty of ensemble learning lies in its ability to reduce errors, improve generalization, and enhance predictive performance. It’s like having a safety net or a backup plan when one model falters. So, if you’re looking to boost your machine learning mojo, ensemble learning might just be the secret weapon you need! 💪 So, buckle up and let’s dive deeper into the intriguing world of ensemble learning.

Contents

- What’s the Scoop on Ensemble Learning?

- The Power of Ensemble Learning

- Types of Ensemble Learning Techniques

- Benefits of Ensemble Learning

- Tips for Implementing Ensemble Learning

- Real-World Applications of Ensemble Learning

- Challenges and Limitations of Ensemble Learning

- Ensuring Success with Ensemble Learning

- Key Takeaways: What’s the scoop on ensemble learning?

- Frequently Asked Questions

- 1. What is ensemble learning?

- 2. What are the advantages of ensemble learning?

- 3. How do ensemble learning models make predictions?

- 4. What are some popular ensemble learning algorithms?

- 5. How do you train an ensemble learning model?

- Ensemble Learning | Ensemble Learning In Machine Learning | Machine Learning Tutorial | Simplilearn

- Summary

What’s the Scoop on Ensemble Learning?

Ensemble learning has gained popularity in the field of machine learning due to its ability to improve prediction accuracy and generalization. It involves combining multiple individual learning models, known as base learners, to form a stronger and more robust model. By leveraging the diverse perspectives and strengths of these individual models, ensemble learning enhances the overall performance and reliability of predictions.

In this article, we will explore the concept of ensemble learning, its various techniques, and the benefits it offers. We will delve into the different types of ensemble learning algorithms, their applications, and tips for implementing ensemble learning effectively. So, sit back and get ready to uncover the scoop on ensemble learning!

The Power of Ensemble Learning

Ensemble learning taps into the wisdom of the crowd, harnessing the collective intelligence of multiple models to make better predictions. It functions on the premise that a diverse group of models can provide complementary perspectives, thereby reducing biases and errors in the predictions. This approach is often compared to the concept of democracy, where different models get a vote, and the final decision is made based on the majority or a weighted average.

Ensemble learning can be applied to a wide range of machine learning problems, including classification, regression, feature selection, and anomaly detection. It has delivered remarkable results in various domains, such as finance, healthcare, image recognition, and natural language processing. The robustness, accuracy, and generalization capabilities of ensemble models have made them go-to methods in many real-world applications. Now, let’s dive into the details of ensemble learning techniques and explore the secrets behind their success.

Types of Ensemble Learning Techniques

1. Bagging (Bootstrap Aggregating)

Bagging is a popular ensemble technique that involves creating multiple subsets of the training data through bootstrap sampling. Each subset is used to train a separate base learner, such as decision trees or neural networks. The final prediction is obtained by aggregating the predictions of all base learners. Bagging helps to reduce overfitting and variance in the model by introducing randomness and diversity.

2. Boosting

Unlike bagging, boosting focuses on sequentially building a strong model by giving more importance to misclassified instances. It starts with a weak base learner and emphasizes the misclassified samples in subsequent iterations. This iterative process helps the model to learn from its mistakes and gradually improve its performance. Common boosting algorithms include AdaBoost, Gradient Boosting, and XGBoost, each with its unique approach to weighting instances and updating the model.

3. Random Forest

Random Forest is a powerful ensemble technique that combines the strengths of bagging and random feature selection. It builds an ensemble of decision trees, where each tree is trained on a random subset of features. The final prediction is obtained by averaging or voting the predictions of individual trees. Random Forest provides robustness against overfitting, can handle large datasets efficiently, and offers valuable insights into feature importance.

4. Stacking

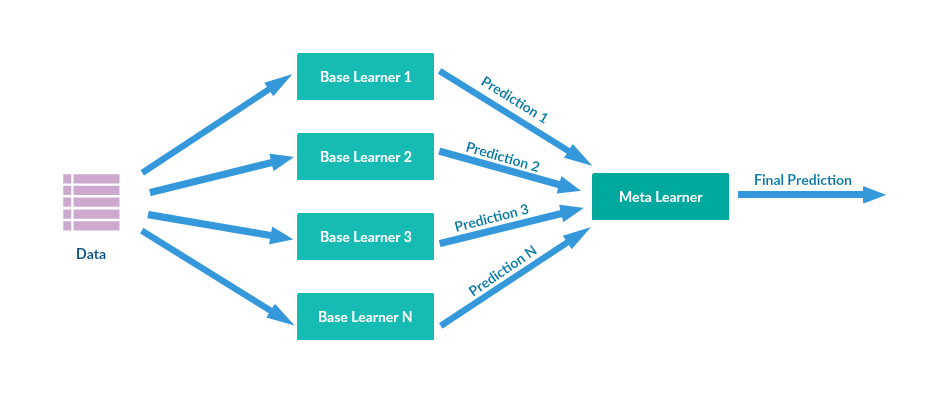

Stacking takes ensemble learning to the next level by introducing a meta-learner that combines the predictions of the base learners. Unlike bagging and boosting, stacking involves multiple layers. In the first layer, the base learners make predictions on the training data. These predictions become the input for the meta-learner, which then creates a final prediction. Stacking allows for more complex relationships and interactions between the individual models, resulting in improved performance.

5. Voting

Voting, also known as majority voting or ensemble averaging, involves combining the predictions of multiple base learners using a voting strategy. The base learners can be different algorithms or variations of the same algorithm with different hyperparameters. Voting can be performed through a simple majority vote, where the prediction with the highest occurrence is selected, or through weighted voting, where the predictions are weighted based on the confidence of the base learners.

6. Cascading

Cascading, also referred to as sequentially connected classifiers, is an ensemble technique where the output of one classifier becomes the input for the next classifier in a predefined order. Each classifier focuses on a particular subset of the data or a specific aspect of the problem. Cascading allows for building a pipeline of classifiers that can handle complex tasks by breaking them down into simpler subtasks.

7. Bayesian Model Averaging (BMA)

BMA combines the predictions of multiple models by computing the weighted average of their individual predictions. These weights are determined based on the evidence provided by the data and the statistical model. BMA is rooted in Bayesian statistics and provides a systematic framework for model averaging, taking into account the uncertainties associated with each model. It is particularly effective when dealing with small or noisy datasets.

Benefits of Ensemble Learning

Ensemble learning offers several key benefits that make it a popular choice in machine learning:

- Improved Accuracy: Ensemble models have a higher predictive accuracy compared to individual models as they leverage the collective knowledge of multiple models.

- Better Generalization: Ensemble learning reduces the risk of overfitting and enhances the ability of models to generalize to unseen data.

- Robustness: Ensemble models are more robust to noise and outliers in the data due to their diverse nature and collective decision-making.

- Enhanced Stability: Combining multiple models reduces the impact of model instability, resulting in more consistent and reliable predictions.

- Insights into Uncertainty: Ensemble models provide insights into the uncertainty associated with predictions, allowing for more informed decision-making.

Tips for Implementing Ensemble Learning

Implementing ensemble learning effectively requires careful consideration and planning. Here are some tips to help you make the most out of ensemble learning techniques:

- Diversity: Ensure that the base learners in your ensemble are diverse, either by using different algorithms or by varying the hyperparameters.

- Ensemble Size: Experiment with the number of base learners in the ensemble to find the right balance between performance and computational efficiency.

- Quality of Base Learners: Focus on building strong base learners with low bias and low variance to maximize the overall performance of the ensemble.

- Data Sampling: Consider using different sampling techniques, such as bootstrapping or stratified sampling, to create diverse subsets of data for training the base learners.

- Model Combination: Experiment with different combination methods, such as averaging, voting, or weighted voting, to find the most suitable approach for your problem.

- Regularization: Regularization techniques, such as dropout or L1/L2 regularization, can help prevent overfitting in ensemble models with high capacity.

- Tuning: Continuously monitor and fine-tune the hyperparameters of the individual base learners and the ensemble to optimize performance.

Real-World Applications of Ensemble Learning

Ensemble learning has proven its effectiveness in various real-world applications across different industries. Here are a few examples:

- Stock Market Prediction: Ensemble models have been used to predict stock market trends by combining predictions from multiple models and incorporating various financial indicators.

- Medical Diagnosis: Ensemble learning techniques help improve the accuracy of medical diagnosis by combining the opinions of multiple doctors or integrating multiple diagnostic tests.

- Image Classification: Ensemble models have been successfully applied to image classification tasks, where different models focus on different image features and combine their predictions for accurate results.

- Customer Churn Prediction: Ensemble learning has been employed in the telecommunications industry to predict customer churn by combining predictions from different models trained on customer behavior data.

- Natural Language Processing: Ensemble models have been utilized for tasks like sentiment analysis, machine translation, and named entity recognition, where multiple models provide different perspectives on the text data.

Challenges and Limitations of Ensemble Learning

While ensemble learning offers numerous benefits and has proven to be a successful approach, it also comes with its own set of challenges and limitations:

- Computational Complexity: Ensemble models can be computationally expensive and require significant computing resources, especially when dealing with large datasets or complex models.

- Training Time: The training time for ensemble models can be longer compared to individual models, as it involves training multiple base learners and potentially performing hyperparameter tuning.

- Model Interpretability: Ensemble models can be more challenging to interpret compared to individual models, as they incorporate multiple perspectives and decision-making processes.

- Data Dependency: Ensemble learning assumes that the base learners are independent and their errors are uncorrelated. If the base learners are trained on correlated data or have similar biases, the performance of the ensemble may not improve significantly.

- Overfitting: While ensemble learning helps reduce overfitting in most cases, there is still a risk of overfitting if the base learners are too complex or highly biased.

Ensuring Success with Ensemble Learning

Ensemble learning is a powerful technique that can significantly enhance the performance and accuracy of machine learning models. By combining the strengths of multiple base learners, ensemble models can overcome the limitations of individual models and provide more robust predictions. It is important to carefully select the appropriate ensemble technique, ensure diversity in the base learners, and monitor the performance to achieve the desired outcomes. With the right implementation and thoughtful experimentation, ensemble learning can unlock new possibilities and deliver superior results in various domains.

Key Takeaways: What’s the scoop on ensemble learning?

- Ensemble learning is a technique used in machine learning where multiple models are combined to make more accurate predictions.

- It works by training several individual models, called base models, and then combining their predictions using various methods.

- The idea behind ensemble learning is that the collective wisdom of multiple models can outperform a single model.

- Some popular ensemble learning methods include bagging, boosting, and stacking.

- Ensemble learning is used in various applications, such as in fraud detection, recommendation systems, and medical diagnosis.

Frequently Asked Questions

Are you curious to know more about ensemble learning? Look no further! Here are some commonly asked questions and answers that will give you the scoop on this intriguing approach to machine learning.

1. What is ensemble learning?

Ensemble learning is a technique that combines the results of multiple machine learning models to make more accurate predictions. Instead of relying on a single model, ensemble learning leverages the collective wisdom of multiple models to improve overall performance. Each model in the ensemble is trained on a different subset of the data or uses a different algorithm, increasing diversity and reducing the risk of overfitting.

Ensemble learning can take different forms, such as bagging, boosting, or stacking. Bagging involves training multiple models independently and aggregating their predictions, while boosting focuses on iteratively improving the performance of weak models. Stacking combines the predictions of multiple models by training a meta-model on their outputs.

2. What are the advantages of ensemble learning?

Ensemble learning offers several advantages over using a single model. Firstly, it tends to produce more accurate predictions by combining the strengths of different models. While individual models may have limitations or biases, ensemble learning can minimize their impact by considering multiple perspectives. Additionally, ensemble learning is more resilient to noise and outliers in the data, making it particularly useful in challenging or uncertain environments.

Another advantage of ensemble learning is its versatility. It can be applied to a wide range of machine learning tasks, including classification, regression, and anomaly detection. Ensemble learning can also handle large and complex datasets effectively. By dividing the data into subsets or training different models with different algorithms, ensemble learning can handle high-dimensional data and capture a wider range of patterns.

3. How do ensemble learning models make predictions?

Ensemble learning models make predictions by aggregating the individual predictions of multiple models in the ensemble. There are different methods for combining these predictions, depending on the type of ensemble learning used. In bagging, the predictions are typically combined through averaging or majority voting. Each model’s prediction is given equal weight, and the final prediction is determined by the majority or average of the individual predictions.

In boosting, the models are combined using weighted averaging, where each model’s contribution is weighted based on its performance. Weak models that perform poorly on the training data are given more weight in subsequent iterations, allowing the ensemble to focus on the harder-to-predict instances. Stacking, on the other hand, involves training a meta-model to learn how to combine the predictions of the individual models effectively.

4. What are some popular ensemble learning algorithms?

There are various ensemble learning algorithms that have been proven effective in different scenarios. Random Forest is a widely-used algorithm that uses bagging to train an ensemble of decision trees. AdaBoost is a boosting algorithm that focuses on instances that are difficult to classify correctly. Gradient Boosting Machines (GBM) and XGBoost are other boosting algorithms known for their performance and ability to handle complex datasets effectively.

Another popular ensemble learning algorithm is the Voting Classifier, which combines the predictions of multiple models by majority voting or weighted averaging. It can handle different types of models and is particularly useful when the individual models specialize in different aspects of the data or when dealing with heterogeneous datasets.

5. How do you train an ensemble learning model?

Training an ensemble learning model involves several steps. First, you need to select the appropriate ensemble learning algorithm based on the characteristics of your data and the problem you are attempting to solve. Once you have chosen the algorithm, you need to prepare the data by splitting it into training and testing sets.

Next, you train each model in the ensemble on a subset of the training data or using a different algorithm. After training the individual models, you combine their predictions using aggregation methods such as averaging or voting. Finally, you evaluate the performance of the ensemble learning model on the testing set to assess its accuracy and make any necessary adjustments.

Ensemble Learning | Ensemble Learning In Machine Learning | Machine Learning Tutorial | Simplilearn

Summary

Ensemble learning is when multiple models work together to make better predictions. Instead of relying on just one model, ensemble learning combines the strengths of different models to improve accuracy. This can help us make more accurate predictions in areas like weather forecasting or diagnosing diseases.

Ensemble learning uses techniques like bagging and boosting to train models on different subsets of data. Bagging helps reduce overfitting by training models on different samples of the data, while boosting focuses on improving models’ performances by giving more attention to the misclassified data points. By combining the predictions of these models, ensemble learning can produce more reliable and accurate results than using just one model. So, next time you hear about ensemble learning, remember that it’s like getting a group of friends together to solve a problem and make better predictions!