Have you ever wondered what’s the secret behind Support Vector Machines? Don’t worry, I’ve got you covered! Support Vector Machines, or SVMs for short, are like superheroes of the machine learning world. They have an incredible power to classify data with amazing accuracy. So, let’s dive in and uncover the secrets behind this remarkable algorithm!

Imagine you have a bunch of data points, and your task is to decide which category each point belongs to. That’s where Support Vector Machines come to the rescue! These powerful algorithms use a clever approach to draw a line (or a hyperplane, as the experts call it) that separates different classes of data points. But wait, there’s more to it than just drawing a line!

Support Vector Machines are not just about drawing lines; they’re actually masters at finding the best line that maximizes the margin between different data points. In other words, SVMs strive to draw a line that creates the widest possible gap between classes, giving them the highest chance of correctly classifying new, unseen data. Pretty cool, right?

So, how do SVMs really work their magic? Well, they transform data points into a higher-dimensional space, where the points become more distinguishable. This way, SVMs can find that perfect line that separates the classes most effectively. Whether it’s classifying emails as spam or non-spam, recognizing handwritten digits, or diagnosing diseases, Support Vector Machines can handle a wide range of problems with astonishing accuracy.

Now that you know a little bit about what makes Support Vector Machines such powerful tools, let’s take a closer look at how they turn complex data into awesome classification models. Get ready to unlock the secrets behind SVMs and discover the fascinating world of machine learning!

Contents

- The Secret Behind Support Vector Machines: Unveiling the Power of Machine Learning

- Understanding the Foundations: What Are Support Vector Machines?

- Key Takeaways: What’s the Secret behind Support Vector Machines?

- Frequently Asked Questions

- How does a Support Vector Machine classify data?

- What is the “kernel trick” in Support Vector Machines?

- Are Support Vector Machines suitable for large datasets?

- Do Support Vector Machines have any disadvantages?

- Can Support Vector Machines handle imbalanced datasets?

- Support Vector Machine (SVM) in 2 minutes

- Summary

The Secret Behind Support Vector Machines: Unveiling the Power of Machine Learning

Machine learning has become a hot topic in the world of technology, with Support Vector Machines (SVMs) being one of the most popular algorithms used in this field. But what really makes SVMs stand out from the rest? What’s the secret behind their success? In this article, we’ll dive deep into the inner workings of SVMs and explore the key factors that contribute to their effectiveness. So, put on your AI goggles and let’s explore the fascinating world of Support Vector Machines.

Understanding the Foundations: What Are Support Vector Machines?

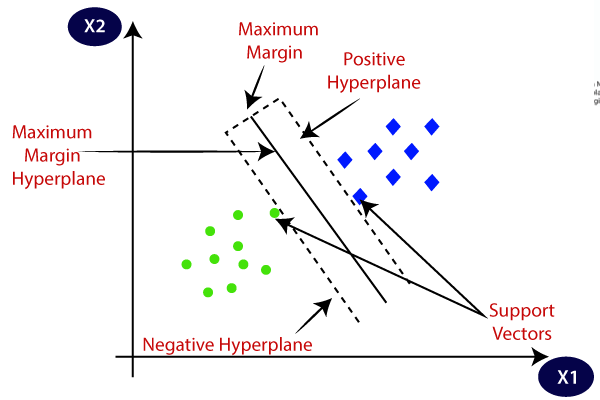

Before we unlock the secret behind SVMs, let’s first gain a solid understanding of what they actually are. Support Vector Machines are supervised machine learning models used for classification and regression problems. The main objective of an SVM is to find the best hyperplane that separates the data points of different classes in a high-dimensional feature space. But what sets SVMs apart from other algorithms is their ability to maximize the margin, or the distance between the hyperplane and the nearest data points of each class. This not only improves classification accuracy but also enhances the model’s generalization capabilities.

Under the hood, SVMs use a mathematical technique called kernel trick, which allows them to project the data into higher dimensions to separate it more effectively. By transforming the input data, SVMs can tackle complex problems that are not linearly separable in the original feature space. Additionally, SVMs have the unique property of being memory efficient. Since they rely only on a subset of the training data, known as support vectors, their computational complexity remains low. Now that we have a basic understanding of SVMs, let’s unravel the secret behind their exceptional performance.

The Power of Margin: Unleashing the Potential of SVMs

One of the key factors behind the success of Support Vector Machines is their focus on maximizing the margin. The margin represents the gap between the decision boundary, or hyperplane, and the data points of each class. By maximizing this margin, SVMs not only improve their ability to correctly classify new unseen data but also enhance their robustness to noise in the training set. The large margin principle has its roots in statistical learning theory, which suggests that models with larger margins are likely to have low generalization error.

How does maximizing the margin lead to better performance? Well, a larger margin allows SVMs to select a more robust decision boundary that separates the two classes with higher confidence. This reduces the risk of misclassification and makes the model more resistant to outliers. In other words, SVMs prioritize a safe zone between different classes, ensuring that even if there is some overlap in the feature space, the decision boundary remains stable and accurate. This emphasis on margin not only improves classification accuracy but also makes SVMs less prone to overfitting.

In addition to their focus on maximizing the margin, SVMs offer great flexibility in handling different types of data. They can handle both linearly separable and non-linearly separable data through the use of different kernel functions. These kernel functions enable SVMs to transform the data into higher dimensions where it becomes easier to find a separating hyperplane. By using kernel functions like polynomial, radial basis, or sigmoid, SVMs can uncover complex patterns and capture intricate relationships between features.

Regularization: Striking the Balance between Complexity and Simplicity

Another aspect that contributes to the success of Support Vector Machines is their ability to strike the right balance between complexity and simplicity, thanks to the regularization parameter, C. The regularization parameter controls the trade-off between maximizing the margin and allowing misclassifications. A high value of C puts more emphasis on classifying the training data correctly, potentially leading to a smaller margin. On the other hand, a low value of C prioritizes a larger margin, even if it means some training points are misclassified.

Why is finding the right balance important? Well, SVMs aim to generalize well to unseen data, which means their performance on the training set should be a good approximation of their performance on new data. If the model is too complex, with a small margin and a high number of support vectors, it might overfit the training data and fail to perform well on new instances. Conversely, if the model is too simple, with a large margin and a high number of misclassifications, it might underfit the training data and not capture the underlying patterns effectively. By adjusting the regularization parameter, SVMs can strike the optimal balance between complexity and simplicity, leading to better generalization and improved performance.

In conclusion, the secret behind the success of Support Vector Machines lies in their ability to maximize the margin, prioritize robustness to noise, and strike a balance between complexity and simplicity. By focusing on these key factors, SVMs outshine other machine learning algorithms and unleash the true power of artificial intelligence. Whether you’re a data scientist or a curious tech enthusiast, understanding SVMs and their secrets can pave the way for innovative solutions and cutting-edge advancements in the world of AI.

Key Takeaways: What’s the Secret behind Support Vector Machines?

- Support Vector Machines (SVM) is a powerful machine learning algorithm used for classification and regression tasks.

- The secret behind SVM lies in the concept of finding the best decision boundary that maximally separates different classes of data.

- SVM uses a mathematical technique called the kernel trick to transform the data into a higher-dimensional space where a linear decision boundary can be found.

- By finding the optimal decision boundary, SVM minimizes classification errors and generalizes well to new, unseen data.

- SVM’s ability to handle complex datasets and its robustness make it one of the most popular algorithms in the field of machine learning.

Frequently Asked Questions

Support Vector Machines (SVMs) are a powerful algorithm used in machine learning for classification and regression analysis. In this section, we will explore some common questions about the secret behind Support Vector Machines and how they work.

How does a Support Vector Machine classify data?

A Support Vector Machine (SVM) classifies data by finding the best decision boundary that separates different classes. It does this by mapping the input data into a higher-dimensional space using a kernel function. In this transformed space, SVM constructs a hyperplane that maximizes the margin between the data points of different classes. The data points that lie on the boundary or closest to it are called support vectors.

The decision boundary is determined by support vectors, and the SVM algorithm aims to find the hyperplane with the largest margin, as it is more likely to generalize well on unseen data. This makes SVMs a good choice for handling both linearly and non-linearly separable datasets.

What is the “kernel trick” in Support Vector Machines?

The “kernel trick” is a concept used in Support Vector Machines (SVMs) to handle non-linearly separable data. It transforms the input data into a higher-dimensional space where it becomes linearly separable. This transformation is achieved by applying a kernel function to the original feature space.

By using a kernel function, we can compute the dot products of the data points in the transformed space without explicitly calculating the transformation. This allows SVMs to effectively handle complex, non-linear decision boundaries without explicitly computing the mapping function. Some commonly used kernel functions include the linear kernel, polynomial kernel, and Gaussian kernel.

Are Support Vector Machines suitable for large datasets?

Support Vector Machines (SVMs) can be computationally expensive, especially for large datasets. As the number of training samples increases, the training time of SVMs tends to grow. Additionally, the memory requirements for storing the support vectors can also become significant for large-scale problems.

However, there are several techniques to mitigate these challenges. One approach is to use stochastic gradient descent (SGD) optimization algorithms, which update the model parameters based on a subset of the training data at each iteration. Another strategy is to employ kernel approximation methods that approximate the kernel matrix, reducing the memory and computational requirements. These techniques make SVMs more feasible for large datasets.

Do Support Vector Machines have any disadvantages?

While Support Vector Machines (SVMs) are a powerful algorithm, they do have a few limitations. One drawback is that SVMs can be sensitive to the choice of hyperparameters, such as the kernel type, regularization parameter, and kernel bandwidth. Selecting the right hyperparameters can significantly impact the performance of the SVM model.

Another limitation is that SVMs are not inherently probabilistic. Unlike some other algorithms, SVMs do not output the probability of a data point belonging to a particular class. To obtain probabilities, additional techniques like Platt scaling or cross-validation may be required.

Can Support Vector Machines handle imbalanced datasets?

Yes, Support Vector Machines (SVMs) can handle imbalanced datasets. Imbalanced datasets refer to situations where one class has significantly more instances than the other class. SVMs provide a mechanism to deal with this by introducing class weights. By assigning higher weights to the minority class, SVMs can prioritize the correct classification of the minority class without excessively penalizing errors on the majority class.

Additionally, techniques like oversampling the minority class or undersampling the majority class can be used in conjunction with SVMs to further address the class imbalance problem. These techniques help improve the performance of SVMs on imbalanced datasets.

Support Vector Machine (SVM) in 2 minutes

Summary

Support Vector Machines (SVM) are a type of algorithm used in machine learning. They work by finding a line or plane that separates different groups of data points. SVMs are powerful because they can handle complex data and make accurate predictions. They are commonly used in tasks like image recognition and text classification.

SVMs have some key advantages. They can work well with both small and large datasets, and they have good generalization abilities, which means they can make accurate predictions even with new, unseen data. SVMs are also versatile because they can use different types of mathematical functions to find the best separating line or plane.

To use SVMs effectively, it’s important to choose the right parameters and features for the algorithm. This involves finding the optimal values to fine-tune the model’s performance. Overall, SVMs are a valuable tool in machine learning because they offer a powerful and flexible approach to solving complex problems.