Sponsored Content

Google Cloud

Contents

Introduction

Enterprises manage a mix of structured data in organized tables and a growing volume of unstructured data like images, audio, and documents. Analyzing these diverse data types together is traditionally complex, as they often require separate tools. Unstructured media typically requires exports to specialized services for processing (e.g. a computer vision service for image analysis, or a speech-to-text engine for audio), which creates data silos and hinders a holistic analytical view.

Consider a fictional e-commerce support system: structured ticket details live in a BigQuery table, while corresponding support call recordings or photos of damaged products reside in cloud object stores. Without a direct link, answering a context-rich question like “identify all support tickets for a specific laptop model where call audio indicates high customer frustration and the photo shows a cracked screen“ is a cumbersome, multi-step process.

This article is a practical, technical guide to ObjectRef in BigQuery, a feature designed to unify this analysis. We will explore how to build, query, and govern multimodal datasets, enabling comprehensive insights using familiar SQL and Python interfaces.

Part 1: ObjectRef – The Key to Unifying Multimodal Data

ObjectRef Structure and Function

To address the challenge of siloed data, BigQuery introduces ObjectRef, a specialized STRUCT data type. An ObjectRef acts as a direct reference to an unstructured data object stored in Google Cloud Storage (GCS). It does not contain the unstructured data itself (e.g. a base64 encoded image in a database, or a transcribed audio); instead, it points to the location of that data, allowing BigQuery to access and incorporate it into queries for analysis.

The ObjectRef STRUCT is composed of several key fields:

- uri (STRING): a GCS path to an object

- authorizer (STRING): allows BigQuery to securely access GCS objects

- version (STRING): stores the specific Generation ID of a GCS object, locking the reference to a precise version for reproducible analysis

- details (JSON): a JSON element that often contains GCS metadata like

contentTypeorsize

Here is a JSON representation of an ObjectRef value:

JSON

{

"uri": "gs://cymbal-support/calls/ticket-83729.mp3",

"version": 1742790939895861,

"authorizer": "my-project.us-central1.conn",

"details": {

"gcs_metadata": {

"content_type": "audio/mp3",

"md5_hash": "a1b2c3d5g5f67890a1b2c3d4e5e47890",

"size": 5120000,

"updated": 1742790939903000

}

}

}

By encapsulating this information, an ObjectRef provides BigQuery with all the necessary details to locate, securely access, and understand the basic properties of an unstructured file in GCS. This forms the foundation for building multimodal tables and dataframes, allowing structured data to live side-by-side with references to unstructured content.

Create Multimodal Tables

A multimodal table is a standard BigQuery table that includes one or more ObjectRef columns. This section covers how to create these tables and populate them with SQL.

You can define ObjectRef columns when creating a new table or add them to existing tables. This flexibility allows you to adapt your current data models to take advantage of multimodal capabilities.

Creating an ObjectRef Column with Object Tables

If you have many files stored in a GCS bucket, an object table is an efficient way to generate ObjectRefs. An object table is a read-only table that displays the contents of a GCS directory and automatically includes a column named ref, of type ObjectRef.

SQL

CREATE EXTERNAL TABLE `project_id.dataset_id.my_table`

WITH CONNECTION `project_id.region.connection_id`

OPTIONS(

object_metadata="SIMPLE",

uris = ['gs://bucket-name/path/*.jpg']

);

The output is a new table containing a ref column. You can use the ref column with functions like AI.GENERATE or join it to other tables.

Programmatically Constructing ObjectRefs

For more dynamic workflows, you can create ObjectRefs programmatically using the OBJ.MAKE_REF() function. It’s common to wrap this function in OBJ.FETCH_METADATA() to populate the details element with GCS metadata. The following code also works if you replace the gs:// path with a URI field in an existing table.

SQL

SELECT

OBJ.FETCH_METADATA(OBJ.MAKE_REF('gs://my-bucket/path/image.jpg', 'us-central1.conn')) AS customer_image_ref,

OBJ.FETCH_METADATA(OBJ.MAKE_REF('gs://my-bucket/path/call.mp3', 'us-central1.conn')) AS support_call_ref

By using either Object Tables or OBJ.MAKE_REF, you can build and maintain multimodal tables, setting the stage for integrated analytics.

Part 2: Multimodal Tables with SQL

Secure and Governed Access

ObjectRef integrates with BigQuery’s native security features, enabling governance over your multimodal data. Access to underlying GCS objects is not granted to the end-user directly. Instead, it’s delegated through a BigQuery connection resource specified in the ObjectRef’s authorizer field. This model allows for multiple layers of security.

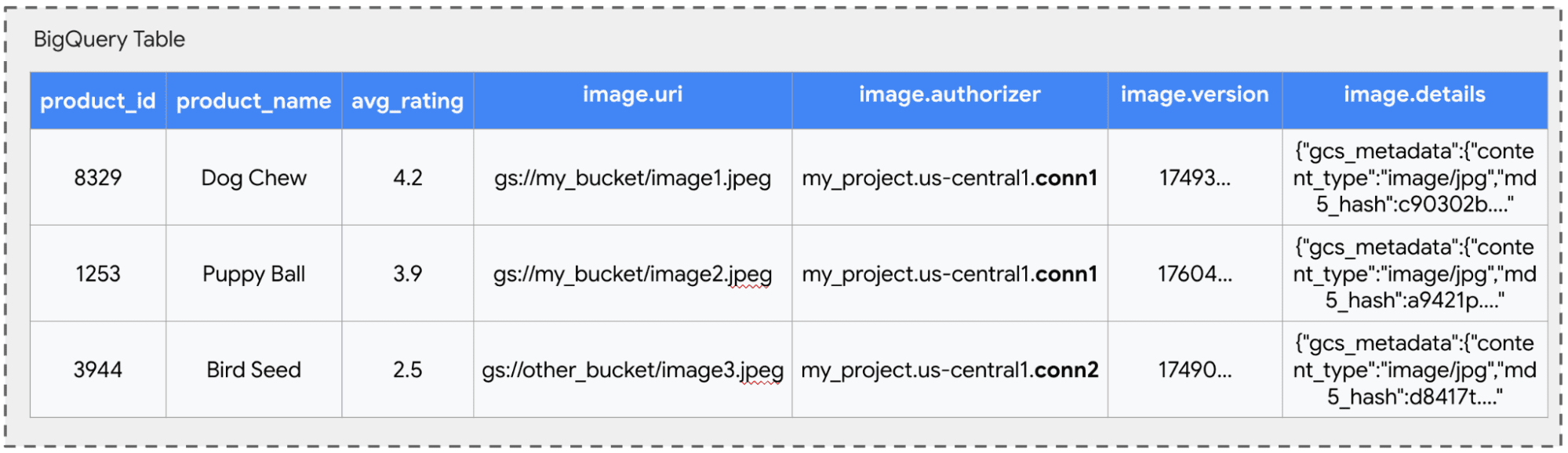

Consider the following multimodal table, which stores information about product images for our e-commerce store. The table includes an ObjectRef column named image.

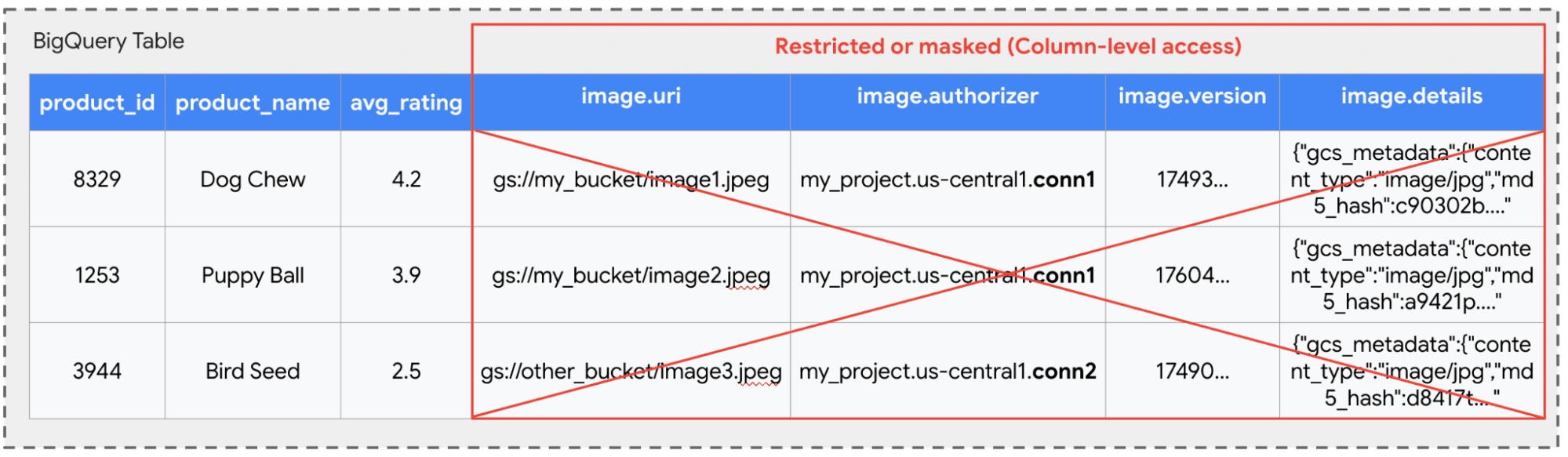

Column-level security: restrict access to entire columns. For a set of users who should only analyze product names and ratings, an administrator can apply column-level security to the image column. This disallows those analysts from selecting the image column while still allowing analysis of other structured fields.

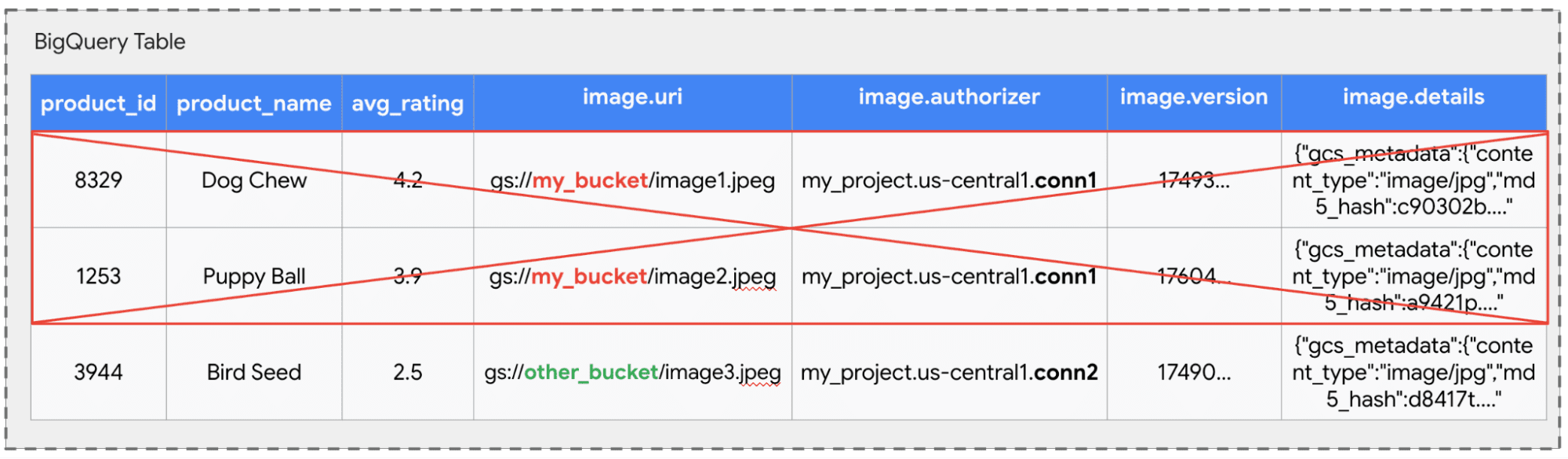

Row-level security: BigQuery allows for filtering which rows a user can see based on defined rules. A row-level policy could restrict access based on a user’s role. For example, a policy might state “Do not allow users to query products related to dogs”, which filters out these rows from query results as if they don’t exist.

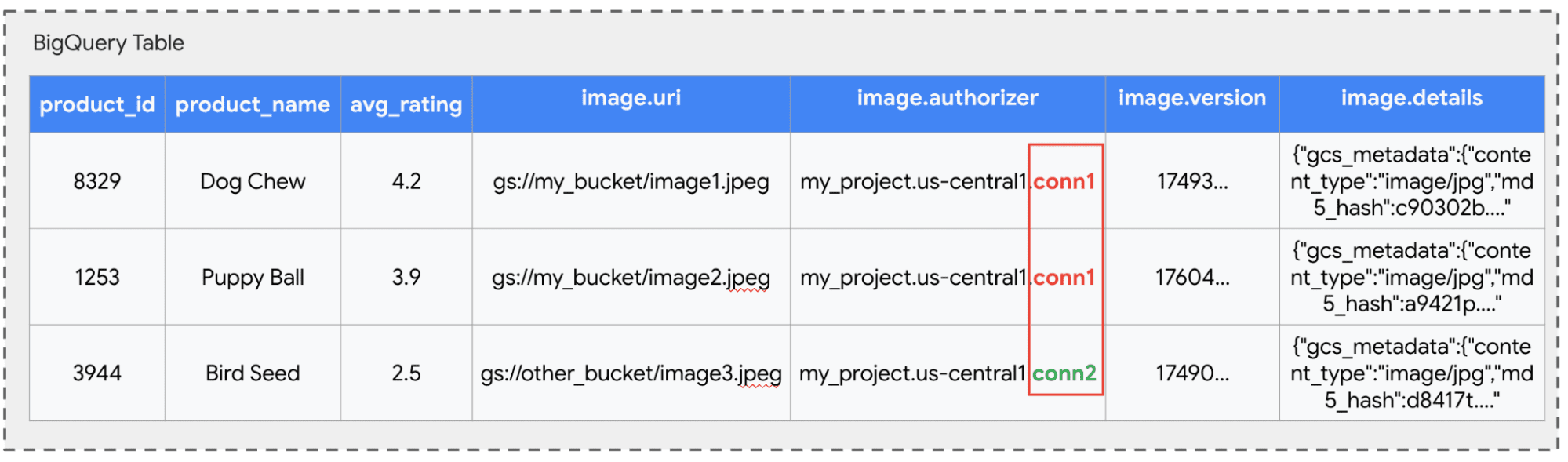

Multiple Authorizers: this table uses two different connections in the image.authorizer element (conn1 and conn2).

This allows an administrator to manage GCS permissions centrally through connections. For instance, conn1 might access a public image bucket, while conn2 accesses a restricted bucket with new product designs. Even if a user can see all rows, their ability to query the underlying file for the “Bird Seed” product depends entirely on whether they have permission to use the more privileged conn2 connection.

AI-Driven Inference with SQL

The AI.GENERATE_TABLE function creates a new, structured table by applying a generative AI model to your multimodal data. This is ideal for data enrichment tasks at scale. Let’s use our e-commerce example to create SEO keywords and a short marketing description for each product, using its name and image as source material.

The following query processes the products table, taking the product_name and image ObjectRef as inputs. It generates a new table containing the original product_id, a list of SEO keywords, and a product description.

SQL

SELECT

product_id,

seo_keywords,

product_description

FROM AI.GENERATE_TABLE(

MODEL `dataset_id.gemini`, (

SELECT (

'For the image of a pet product, generate:'

'1) 5 SEO search keywords and'

'2) A one sentence product description',

product_name, image_ref) AS prompt,

product_id

FROM `dataset_id.products_multimodal_table`

),

STRUCT(

"seo_keywords ARRAY, product_description STRING" AS output_schema

)

);

The result is a new structured table with the columns product_id, seo_keywords, and product_description. This automates a time-consuming marketing task and produces ready-to-use data that can be loaded directly into a content management system or used for further analysis.

Part 3: Multimodal DataFrames with Python

Bridging Python and BigQuery for Multimodal Inference

Python is the language of choice for many data scientists and data analysts. But practitioners commonly run into issues when their data is too large to fit into the memory of a local machine.

BigQuery DataFrames provides a solution. It offers a pandas-like API to interact with data stored in BigQuery without ever pulling it into local memory. The library translates Python code into SQL that is pushed down and executed on BigQuery’s highly scalable engine. This provides the familiar syntax of a popular Python library combined with the power of BigQuery.

This naturally extends to multimodal analytics. A BigQuery DataFrame can represent both your structured data and references to unstructured files, together in a single multimodal dataframe. This enables you to load, transform, and analyze dataframes containing both your structured metadata and pointers to unstructured files, within a single Python environment.

Create Multimodal DataFrames

Once you have the bigframes library installed, you can begin working with multimodal data. The key concept is the blob column: a special column that holds references to unstructured files in GCS. Think of a blob column as the Python representation of an ObjectRef – it doesn’t hold the file itself, but points to it and provides methods to interact with it.

There are three common ways to create or designate a blob column:

PYTHON

import bigframes

import bigframes.pandas as bpd

# 1. Create blob columns from a GCS location

df = bpd.from_glob_path( "gs://cloud-samples-data/bigquery/tutorials/cymbal-pets/images/*", name="image")

# 2. From an existing object table

df = bpd.read_gbq_object_table("", name="blob_col")

# 3. From a dataframe with a URI field

df["blob_col"] = df["uri"].str.to_blob()

To explain the approaches above:

- A GCS location: Use

from_glob_pathto scan a GCS bucket. Behind the scenes, this operation creates a temporary BigQuery object table, and presents it as a DataFrame with a ready-to-use blob column. - An existing object table: if you already have a BigQuery object table, use the

read_gbq_object_tablefunction to load it. This reads the existing table without needing to re-scan GCS. - An existing dataframe: if you have a BigQuery DataFrame that contains a column of STRING GCS URIs, simply use the

.str.to_blob()method on that column to “upgrade” it to a blob column.

AI-Driven Inference with Python

The primary benefit of creating a multimodal dataframe is to perform AI-driven analysis directly on your unstructured data at scale. BigQuery DataFrames allows you to apply large language models (LLMs) to your data, including any blob columns.

The general workflow involves three steps:

- Create a multimodal dataframe with a blob column pointing to unstructured files

- Load a pre-existing BigQuery ML model into a BigFrames model object

- Call the .predict() method on the model object, passing your multimodal dataframe as input.

Let’s continue with the e-commerce example. We’ll use the gemini-2.5-flash model to generate a brief description for each pet product image.

PYTHON

import bigframes.pandas as bpd

# 1. Create the multimodal dataframe from a GCS location

df = bpd.from_glob_path(

"gs://cloud-samples-data/bigquery/tutorials/cymbal-pets/images/*", name="image_blob")

# Limit to 2 images for simplicity

df = df.head(2)

# 2. Specify a large language model

from bigframes.ml import llm

model = llm.GeminiTextGenerator(model_name="gemini-2.5-flash-preview-05-20")

# 3. Ask the LLM to describe what's in the picture

answer = model.predict(df_image, prompt=["Write a 1 sentence product description for the image.", df_image["image"]])

answer[["ml_generate_text_llm_result", "image"]]

When you call model.predict(df_image), BigQuery DataFrames constructs and executes a SQL query using the ML.GENERATE_TEXT function, automatically passing file references from the blob column and the text prompt as inputs. The BigQuery engine processes this request, sends the data to a Gemini model, and returns the generated text descriptions to a new column in the resulting DataFrame.

This powerful integration allows you to perform multimodal analysis across thousands or millions of files using just a few lines of Python code.

Going Deeper with Multimodal DataFrames

In addition to using LLMs for generation, the bigframes library offers a growing set of tools designed to process and analyze unstructured data. Key capabilities available with the blob column and its related methods include:

- Built-in Transformations: prepare images for modeling with native transformations for common operations like blurring, normalizing, and resizing at scale.

- Embedding Generation: enable semantic search by generating embeddings from multimodal data, using Vertex AI-hosted models to convert data into embeddings in a single function call.

- PDF Chunking: streamline RAG workflows by programmatically splitting document content into smaller, meaningful segments – a common pre-processing step.

These features signal that BigQuery DataFrames is being built as an end-to-end tool for multimodal analytics and AI with Python. As development continues, you can expect to see more tools traditionally found in separate, specialized libraries directly integrated into bigframes.

Conclusion:

Multimodal tables and dataframes represent a shift in how organizations can approach data analytics. By creating a direct, secure link between tabular data and unstructured files in GCS, BigQuery dismantles the data silos that have long complicated multimodal analysis.

This guide demonstrates that whether you’re a data analyst writing SQL, or a data scientist using Python, you now have the ability to elegantly analyze arbitrary multimodal files alongside relational data with ease.

To begin building your own multimodal analytics solutions, explore the following resources:

- Official documentation: read an overview on how to analyze multimodal data in BigQuery

- Python Notebook: get hands-on with a BigQuery DataFrames example notebook

- Step-by-step tutorials:

Author: Jeff Nelson, Developer Relations Engineer