How to Build an AI Journal with LlamaIndex

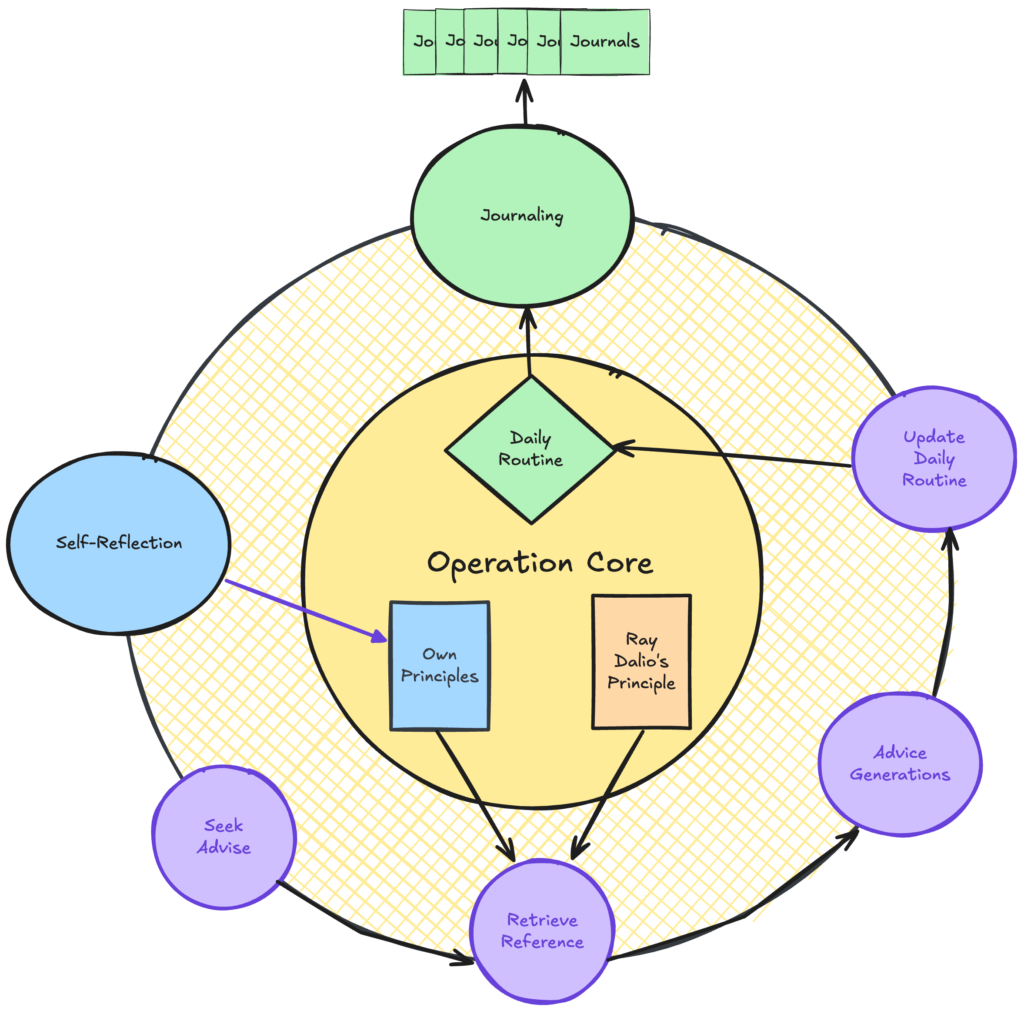

will share how to build an AI journal with the LlamaIndex. We will cover one essential function of this AI journal: asking for advice. We will start with the most basic implementation and iterate from there. We can see significant improvements for this function when we apply design patterns like Agentic Rag and multi-agent workflow. …