Is the curse of dimensionality a real thing? Well, let’s dive into the world of data and explore this intriguing concept together. You might be wondering what exactly the curse of dimensionality is and why it matters. Don’t worry, I’ve got you covered!

Imagine you have a bunch of data points that you want to analyze. The curse of dimensionality refers to the challenges that arise when dealing with datasets that have a large number of features or variables. It’s like navigating a maze with too many twists and turns, making it difficult to find meaningful patterns and insights.

But why is this curse such a big deal? Well, as the number of dimensions increases, the amount of data needed to cover the space grows exponentially. It’s like trying to explore a vast universe with limited resources! This can lead to various problems, such as increased computational complexity and the dilution of meaningful patterns in the data.

So, in this article, we’ll unravel the mysteries of the curse of dimensionality, understand its implications, and discover ways to mitigate its effects. Ready to embark on this data-driven adventure? Let’s go!

Contents

- Is the Curse of Dimensionality a Real Thing?

- Understanding the Curse of Dimensionality

- Key Takeaways: Is the Curse of Dimensionality a Real Thing?

- Frequently Asked Questions

- What is the curse of dimensionality?

- How does the curse of dimensionality affect machine learning?

- How does the curse of dimensionality impact data analysis?

- What are the implications of the curse of dimensionality in optimization?

- Can the curse of dimensionality be completely eliminated?

- Curse of Dimensionality – Georgia Tech – Machine Learning

- Summary

Is the Curse of Dimensionality a Real Thing?

The curse of dimensionality is a concept in mathematics and statistics that refers to the challenges and limitations that arise when working with high-dimensional data. It suggests that as the number of dimensions increases, the amount of data required to make accurate predictions or analysis also increases exponentially. This can lead to issues such as overfitting, increased computational complexity, and difficulty in visualizing and interpreting the data. In this article, we will explore the curse of dimensionality in detail and discuss its impact on various fields.

Understanding the Curse of Dimensionality

The curse of dimensionality arises from the fact that as the number of dimensions or features in a dataset increases, the amount of data required to adequately sample the space also increases exponentially. This is because as the number of dimensions increases, the volume of the space increases exponentially. Consequently, the available data becomes sparser, making it more difficult to generalize patterns and relationships.

The curse of dimensionality affects various aspects of data analysis. One of the most significant challenges is the increased risk of overfitting. In high-dimensional spaces, models have a higher propensity to fit the noise in the data rather than the underlying true relationships. This can lead to poor generalization and inaccurate predictions when faced with new, unseen data. Additionally, the curse of dimensionality increases the computational complexity of algorithms, making it more time-consuming and resource-intensive to process and analyze data.

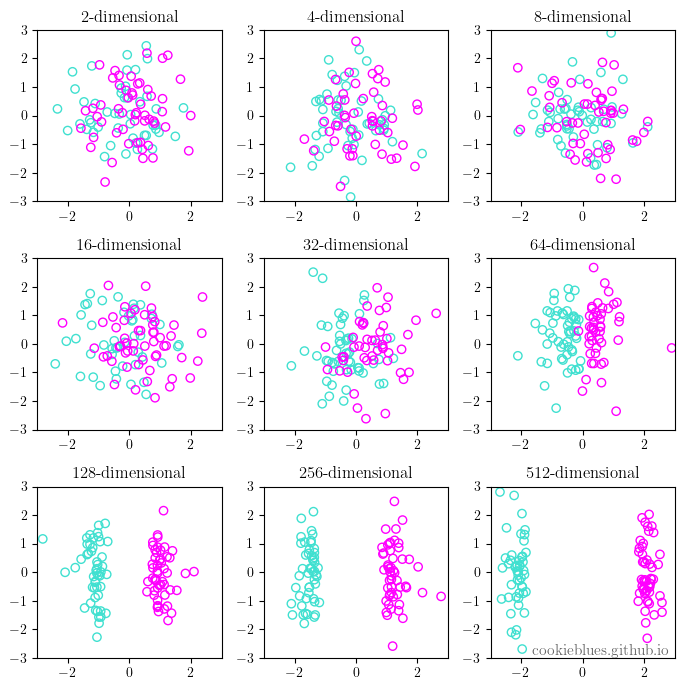

Another consequence of the curse of dimensionality is the difficulty in visualizing and interpreting the data. In low-dimensional spaces, it is relatively easy to plot and comprehend the relationships between variables. However, as the number of dimensions increases, visualization becomes challenging or impossible. Similarly, interpreting the significance and meaning of individual features becomes increasingly complex, making it harder to extract actionable insights from the data.

The Impact of the Curse of Dimensionality on Machine Learning

Machine learning algorithms often rely on large amounts of data to learn patterns and make accurate predictions. However, the curse of dimensionality poses significant challenges in this context. High-dimensional feature spaces can lead to data sparsity, making it difficult for algorithms to identify meaningful patterns. This is particularly true for algorithms that assume some form of local smoothness, such as k-nearest neighbors or kernel-based methods.

To mitigate the curse of dimensionality in machine learning, various techniques have been developed. One approach is feature selection, where irrelevant or redundant features are identified and removed from the dataset. This helps to reduce the dimensionality and improve the performance of algorithms. Another approach is dimensionality reduction, which involves transforming the original data into a lower-dimensional representation while preserving important information. Techniques such as principal component analysis (PCA) and t-distributed stochastic neighbor embedding (t-SNE) are commonly used for dimensionality reduction.

Despite these techniques, high-dimensional datasets remain a challenge in machine learning. Researchers continue to explore novel algorithms and methodologies to tackle the curse of dimensionality and improve the performance of models in such scenarios.

The Curse of Dimensionality in Data Science

The curse of dimensionality has a significant impact on data science, affecting various aspects of data preprocessing, analysis, and interpretation. In data preprocessing, feature engineering becomes crucial to reduce dimensionality and improve the performance of models. This involves techniques such as scaling, normalization, encoding categorical variables, and handling missing values.

When it comes to data analysis, curse of dimensionality necessitates careful consideration of modeling techniques. Machine learning algorithms that perform well in low-dimensional spaces may not be suitable for high-dimensional datasets. Therefore, data scientists need to be well-versed in algorithms and techniques specifically designed for high-dimensional data, such as support vector machines with nonlinear kernels or ensemble methods like random forests.

Lastly, the curse of dimensionality impacts the interpretation of results and the extraction of actionable insights. In high-dimensional spaces, relationships between variables may become more complex, and the importance of individual features can be difficult to assess. Data scientists must employ advanced visualization techniques, statistical tests, and domain expertise to navigate and extract meaningful insights from high-dimensional datasets.

Key Takeaways: Is the Curse of Dimensionality a Real Thing?

- The curse of dimensionality is a real phenomena in mathematics and statistics.

- It refers to the challenges and problems that arise when dealing with high-dimensional data.

- As the number of dimensions increases, the amount of data required to represent the space accurately grows exponentially.

- The curse of dimensionality can lead to difficulties in data analysis, machine learning, and optimization.

- Techniques like dimensionality reduction and feature selection can help mitigate the effects of the curse of dimensionality.

Frequently Asked Questions

The curse of dimensionality is a concept in mathematics and computer science that refers to the challenges and limitations that arise when dealing with high-dimensional data. It is a real phenomenon that can have significant implications for various fields, such as machine learning, data analysis, and optimization. Here are some common questions about the curse of dimensionality:

What is the curse of dimensionality?

The curse of dimensionality is the term used to describe the problems that arise when working with data in high-dimensional spaces. As the number of dimensions increases, the amount of data required to maintain the same level of representation or coverage grows exponentially. This can lead to difficulties in understanding and interpreting the data, as well as in performing calculations or making accurate predictions.

For example, in a 2D space, we can visualize data points on a plane, easily identify relationships, and measure distances between points. However, as the number of dimensions increases, visualization becomes challenging, and it becomes difficult to discern meaningful patterns or make reliable predictions based on the data.

How does the curse of dimensionality affect machine learning?

The curse of dimensionality has a significant impact on machine learning algorithms. As the number of features or variables increases, the amount of training data required to build accurate and reliable models also increases. High-dimensional data sets can lead to overfitting, where the model performs well on the training data but fails to generalize to new, unseen data. This is because in high-dimensional spaces, the training data becomes more sparse, making it difficult to capture the true underlying patterns or relationships.

To mitigate the curse of dimensionality in machine learning, techniques such as feature selection, dimensionality reduction, and regularization are often employed. These methods help to reduce the number of features or dimensions, allowing the model to focus on the most relevant information and improve generalization performance.

How does the curse of dimensionality impact data analysis?

The curse of dimensionality poses challenges in data analysis, particularly in the exploration and interpretation of high-dimensional data sets. As the number of dimensions increases, it becomes more difficult to visualize the data, identify patterns, and understand the relationships between variables. Traditional data analysis techniques, such as scatter plots or correlation matrices, may not be effective in high-dimensional spaces.

To tackle the curse of dimensionality in data analysis, various visualization and dimensionality reduction techniques can be employed. Dimensionality reduction methods, such as principal component analysis (PCA) or t-distributed stochastic neighbor embedding (t-SNE), help to project the data into lower-dimensional spaces while preserving the most important structural information. This enables analysts to gain insights, identify clusters or groups, and make informed decisions based on the reduced-dimensional representation of the data.

What are the implications of the curse of dimensionality in optimization?

The curse of dimensionality can have significant implications in optimization problems, where the goal is to find the best solution among a vast number of possible options. As the number of decision variables increases, the search space grows exponentially, making it extremely challenging to explore the entire solution space. This can lead to inefficiencies, as traditional optimization algorithms may struggle to converge or find optimal solutions in high-dimensional spaces.

To address the curse of dimensionality in optimization, specialized techniques and algorithms, such as evolutionary algorithms, swarm intelligence, or surrogate modeling, can be employed. These methods aim to efficiently explore the solution space and identify promising regions or solutions by leveraging principles of adaptive search, parallelism, or approximation. Such approaches help to overcome the challenges posed by the curse of dimensionality and improve optimization performance in high-dimensional problems.

Can the curse of dimensionality be completely eliminated?

The curse of dimensionality is an inherent limitation when working with high-dimensional data; however, it can be mitigated or managed through various techniques. By employing dimensionality reduction methods, such as feature selection or projection techniques, the number of dimensions can be reduced while retaining the most relevant information. Additionally, algorithmic approaches, such as regularized models or specialized optimization techniques, can help to handle high-dimensional data more effectively.

It is important to note that while these techniques can help alleviate the challenges associated with the curse of dimensionality, they may come with their own trade-offs or limitations. The choice of the appropriate technique depends on the specific problem and the nature of the data. It is crucial to carefully analyze and understand the data and select the most suitable approaches to mitigate the curse of dimensionality in each scenario.

Curse of Dimensionality – Georgia Tech – Machine Learning

Summary

So, is the curse of dimensionality a real thing? Well, it can make things tricky for sure. When we have a lot of features or variables to consider, it can be harder to analyze and make accurate predictions or decisions. The curse of dimensionality refers to the challenges that arise as we increase the number of dimensions in our data. It can cause problems like overfitting, computational complexity, and increased sparsity of data. But don’t worry, there are techniques like dimensionality reduction that can help us tackle these issues and make sense of high-dimensional data. Understanding the curse of dimensionality is important, and with the right tools and strategies, we can overcome its challenges.

In conclusion, while the curse of dimensionality may seem daunting, it is possible to navigate through it. By simplifying our data and employing appropriate techniques, we can unlock the insights hidden within high-dimensional datasets. So, don’t let the curse scare you away from exploring the fascinating world of data analysis and machine learning! Remember, with the right approach, the curse of dimensionality can be tamed.